An unsupervised image fusion method based on deep learning

A technology of image fusion and deep learning, applied in the field of image processing, can solve the problems of no evaluation index for image fusion results, difficult to learn, difficult to apply to mobile terminals, etc., and achieve high-quality fusion effects

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0024] The present invention is described in further detail now in conjunction with accompanying drawing.

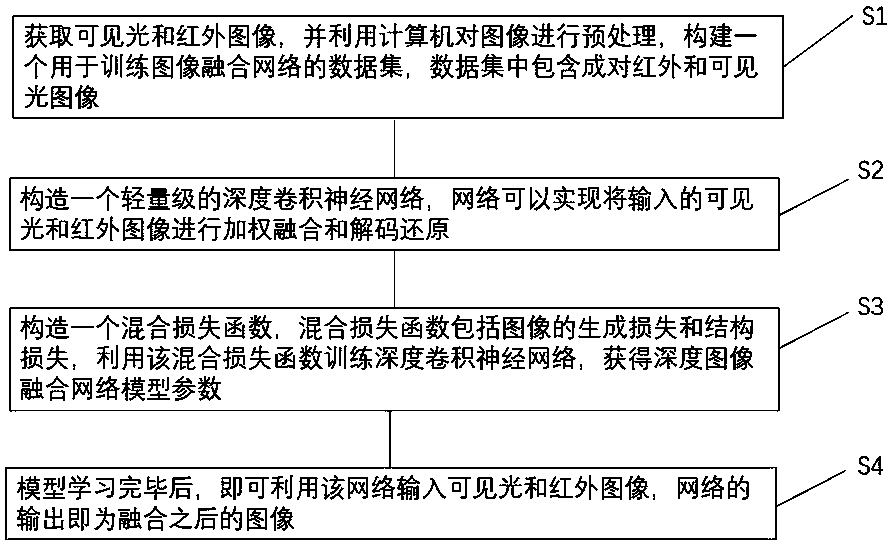

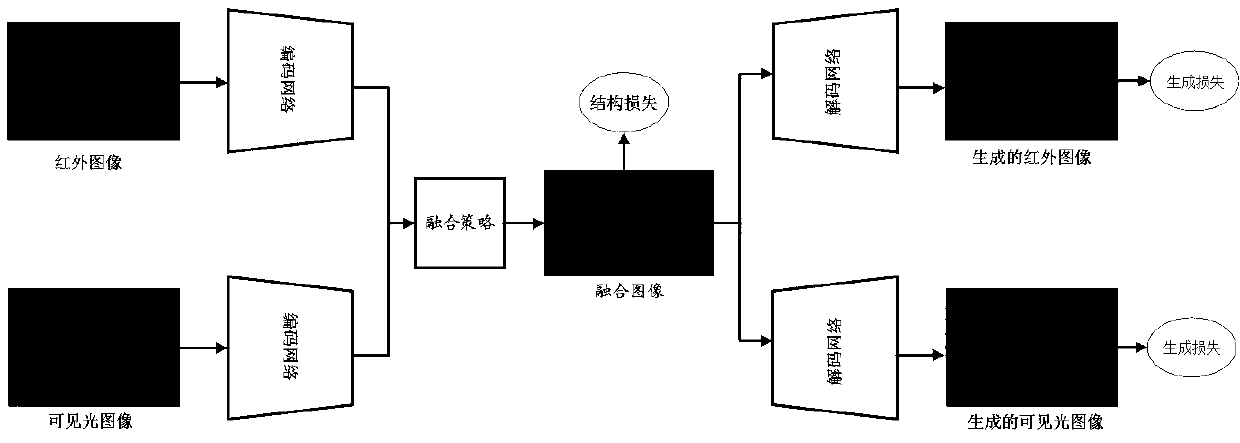

[0025] Such as figure 1 A light-weight unsupervised image fusion method based on deep learning is shown, including the following steps:

[0026] Step S1: Acquire visible light and infrared images, and use a computer to preprocess the images to construct a data set for training an image fusion network, which contains pairs of infrared and visible light images.

[0027] In this embodiment, the acquired infrared and visible light images need to be paired, that is, they are taken at the same position and at the same time, and the images acquired from different data sources do not need to be scaled to the same scale; when constructing the training data set, when the data Stop collecting data when the set size contains a preset number of images.

[0028] Specifically, the following content is included in step S1:

[0029] 1.1. The infrared and visible light images to be col...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com