cache data processing method and cache

A data processing and data technology, applied in the field of Cache and Cache data processing, can solve problems such as the impact of CPU operation efficiency, avoid side channel attacks, and achieve the effect of simple hardware implementation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0030] The specific embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings, but it should be understood that the protection scope of the present invention is not limited by the specific embodiments.

[0031] Unless expressly stated otherwise, throughout the specification and claims, the term "comprise" or variations thereof such as "includes" or "includes" and the like will be understood to include the stated elements or constituents, and not Other elements or other components are not excluded.

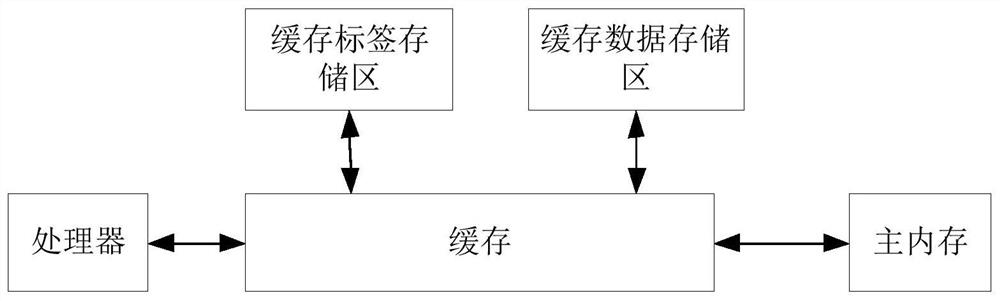

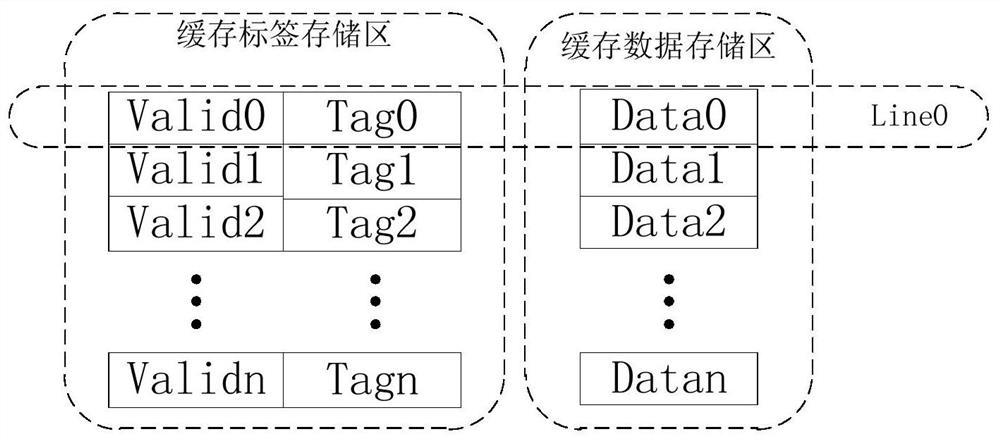

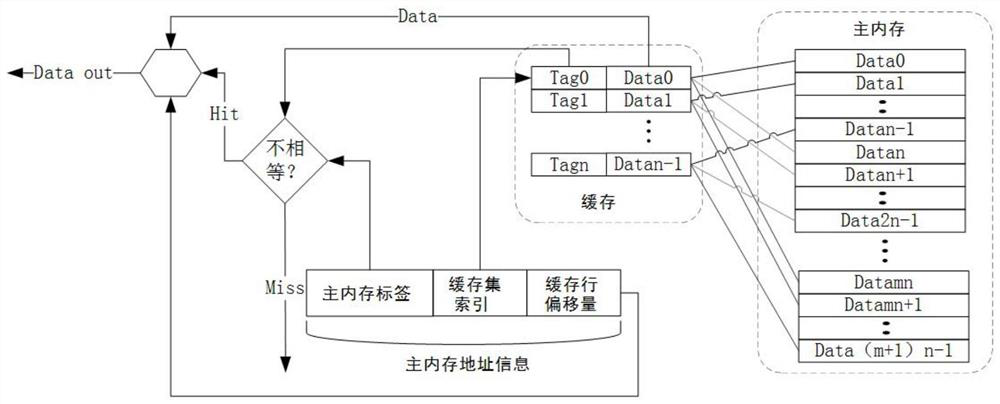

[0032] In order to overcome the shortcoming of prior art, the present invention provides a kind of Cache data processing method and Cache, supports the Cache of Main memory to Cache Memory random mapping, and existing Cache is different, the correspondence between Mainmemory and Cache line of the present invention Relationships are not fixed. It can effectively avoid side-channel attacks based on Cache access time, and the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com