Data preprocessing method, map construction method, loop detection method and system

A data preprocessing, semantic map technology, applied in the field of navigation, can solve problems such as positioning difficulties

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

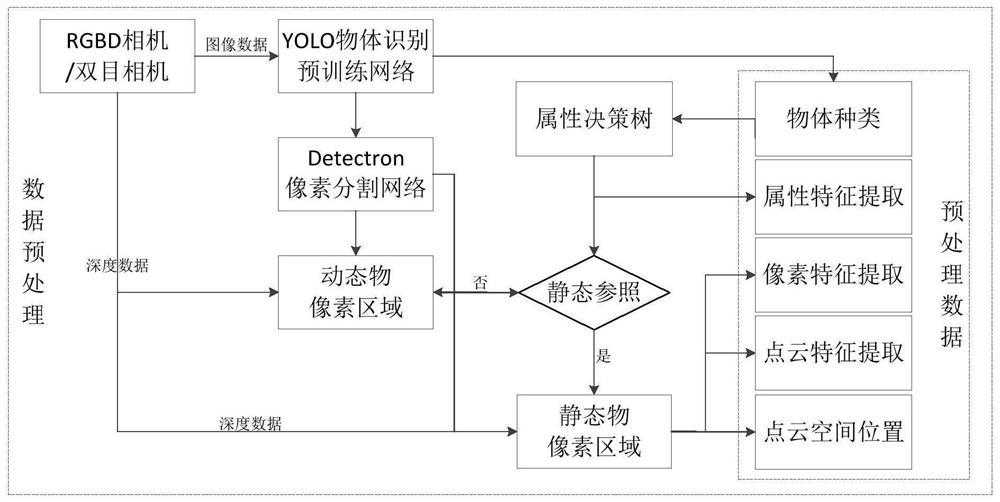

[0082] Such as figure 2 As shown, a kind of data preprocessing method of the present embodiment includes:

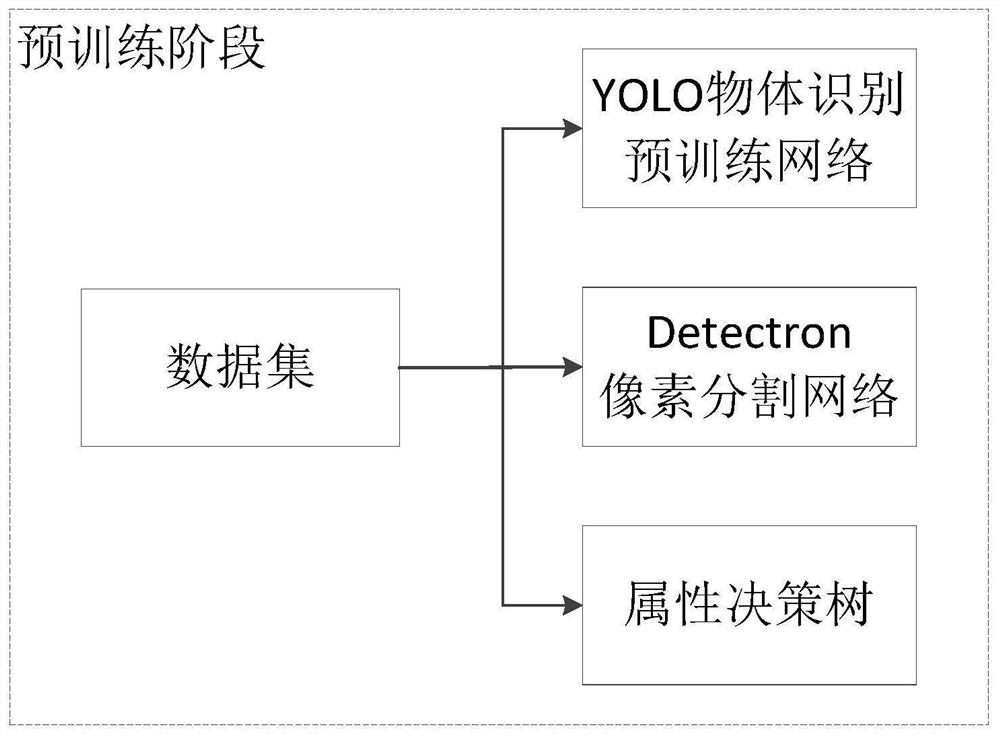

[0083] (1) Pre-training stage:

[0084] Such as figure 1 As shown, construct and train object recognition pre-training network, pixel segmentation network and attribute decision tree.

[0085] As an implementation, the object recognition pre-training network is a deep neural network, which is used to determine the object recognition granularity and content, and determine the type and position of the object in the image according to the object recognition granularity and content, and frame the object .

[0086] For example: the object recognition pre-training network uses the YOLO object recognition network.

[0087] YOLO (You Only Look Once: Unified, Real-Time Object Detection) is a target detection system based on a single neural network proposed by Joseph Redmon and Ali Farhadi in 2015. YOLO is a convolutional neural network that can predict multiple Box position...

Embodiment 2

[0110] Corresponding to Embodiment 1, this embodiment provides a data preprocessing system, including:

[0111] (1) pre-training module, which is used for:

[0112] Build and train object recognition pretrained networks, pixel segmentation networks, and attribute decision trees.

[0113] As an implementation, the object recognition pre-training network is a deep neural network, which is used to determine the object recognition granularity and content, and determine the type and position of the object in the image according to the object recognition granularity and content, and frame the object .

[0114] For example: the object recognition pre-training network uses the YOLO object recognition network.

[0115] YOLO (You Only Look Once: Unified, Real-Time Object Detection) is a target detection system based on a single neural network proposed by Joseph Redmon and Ali Farhadi in 2015. YOLO is a convolutional neural network that can predict multiple Box positions and categorie...

Embodiment 3

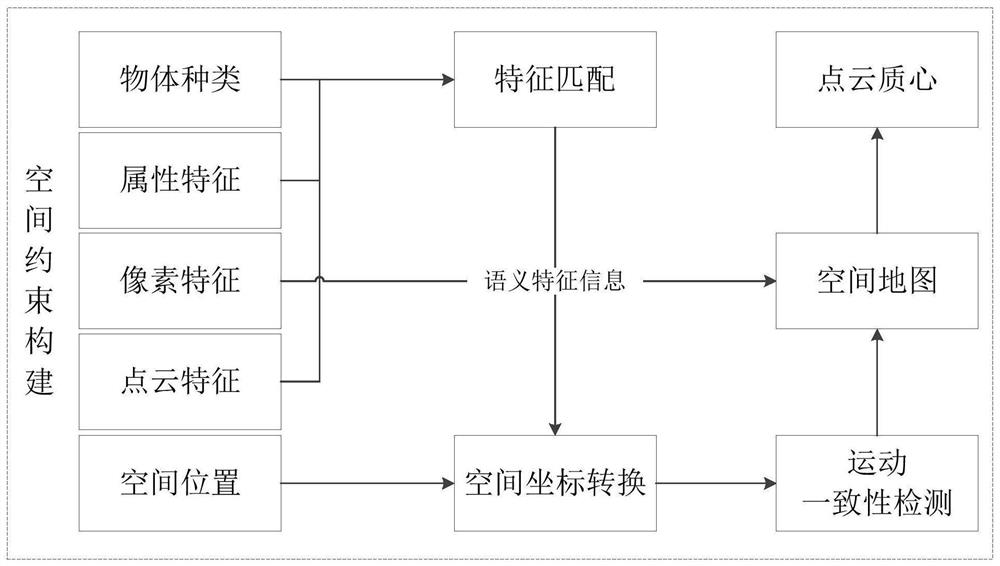

[0128] Such as image 3 As shown, this embodiment provides a method for constructing a spatially constrained map, including:

[0129] use as figure 2 The shown data preprocessing method obtains the corresponding spatial reference point of the same object in the image data before and after the movement of the robot;

[0130] The corresponding spatial reference points of the same object are converted into spatial coordinates, the motion results of the robot are calculated and consistent sampling is performed, the motion estimation of the robot is obtained, and the position scale constraint is formed;

[0131] Record the object category in the scene and its corresponding spatial point cloud position and object semantic feature description and store it in the map to construct a spatial constraint map.

[0132] For example: Between two time instants of data, the motion of the robot is calculated via a spatial reference point. Let the j-th spatial reference point of the i-th obj...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com