Visual angle synchronization method and device in virtual reality VR live broadcast

A technology of virtual reality and synchronization device, which is applied in the generation of 2D images, the input/output process of data processing, instruments, etc. It can solve problems such as synchronization of viewing angles, and achieve the effect of showing smooth changes in viewing angles.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

[0075] This embodiment 1 mainly introduces the viewing angle synchronization solution corresponding to the live broadcast mode of pure display VR content that is biased towards movies, that is, in this solution, the displaying angle of view when the VR sending device displays the VR content, It is related to the motion of the VR sending device, but the display angle of view of the current to-be-displayed image frame by the VR receiving device has nothing to do with the motion of the VR receiving device. refer to figure 1 As shown in , it is a schematic diagram of a scene of an exemplary embodiment of the present application in practical application. exist figure 1 Among them, it is an application scenario of viewing angle synchronization in a VR video live broadcast application scenario. The user of the VR sending device 101 is a live broadcast user, also known as the sender user. The sender user uses the VR sending device 101 such as a VR head-mounted display, or a mobile te...

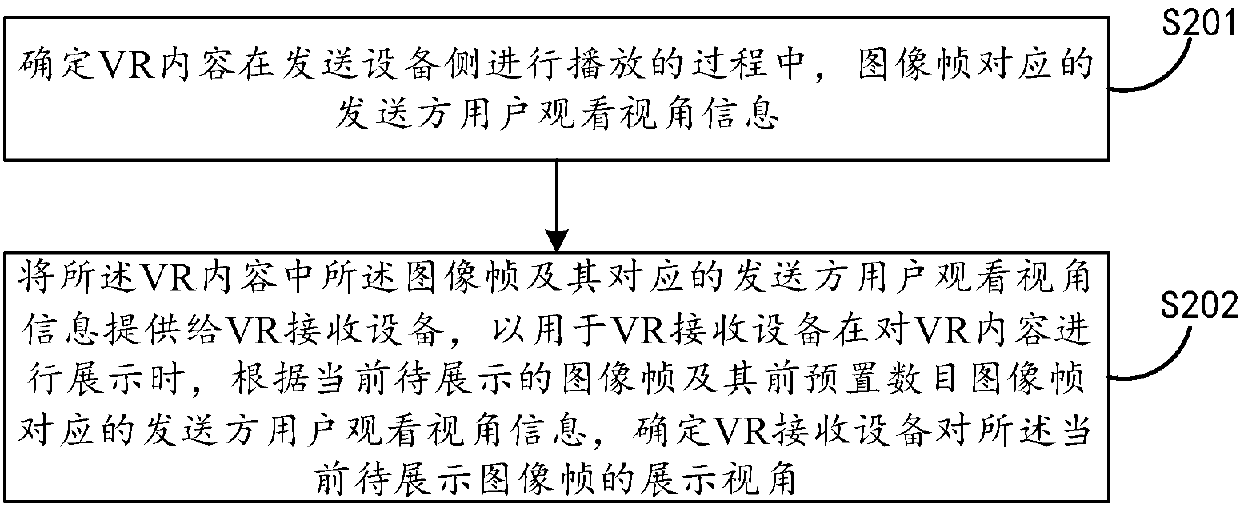

Embodiment 2

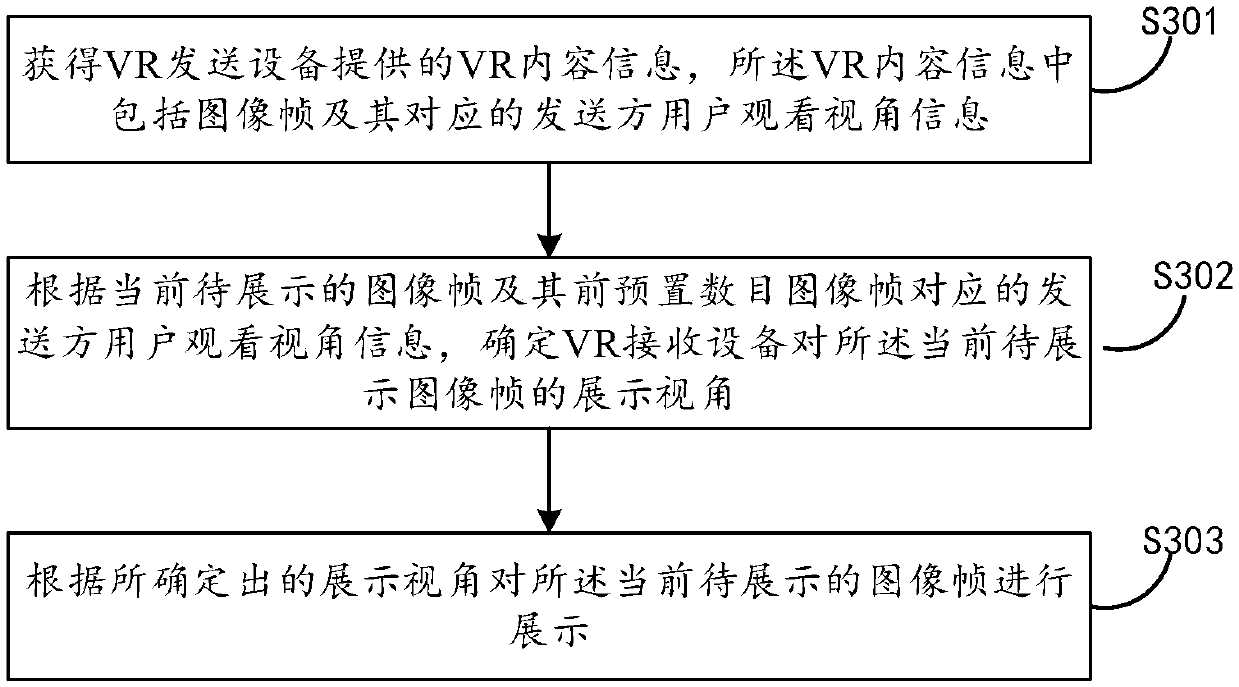

[0093] The second embodiment is corresponding to the first embodiment. From the perspective of the VR receiving device, a method for synchronizing the viewing angle in the virtual reality VR live broadcast is provided. Refer to image 3 , the method may specifically include:

[0094] S301: Obtain VR content information provided by the VR sending device, where the VR content information includes image frames and corresponding viewing angle information of the sending user;

[0095] S302: According to the viewing angle information of the sender user corresponding to the current image frame to be displayed and the preset number of image frames before it, determine the display angle of view of the current image frame to be displayed by the VR receiving device;

[0096] Specifically, when determining the display angle of view of the current image frame to be displayed by the VR receiving device, the average value of the viewing angle information of the sender user corresponding to t...

Embodiment 3

[0102] The third embodiment mainly introduces the problem of viewing angle synchronization during the live broadcast of the game-oriented exploratory VR content. That is, in this case, the display angle of view of the VR content by the VR sending device is related to the motion of the VR sending device, and the display angle of view of the VR content by the VR sending device is related to the motion of the VR sending device. Specifically, the third embodiment firstly provides a method for synchronizing view angles in a virtual reality VR live broadcast from the perspective of a VR sending device. Specifically, see Figure 4 , the method can include:

[0103] S401: Determine viewing angle information of the sender user corresponding to the image frame during the playback of the VR content on the sending device side;

[0104]This step may be the same as step S201 in Embodiment 1, that is, even if the VR receiving device displays VR content, the specific viewing angle of view ch...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com