Omission recovery method for short text understanding

A recovery method and short text technology, which can be used in electrical digital data processing, special data processing applications, instruments, etc., and can solve the problems of model loss of source sequence structure information and low accuracy.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0100] The present invention will be further described below in conjunction with the accompanying drawings and specific embodiments, so that those skilled in the art can better understand the present invention and implement it, but the examples given are not intended to limit the present invention.

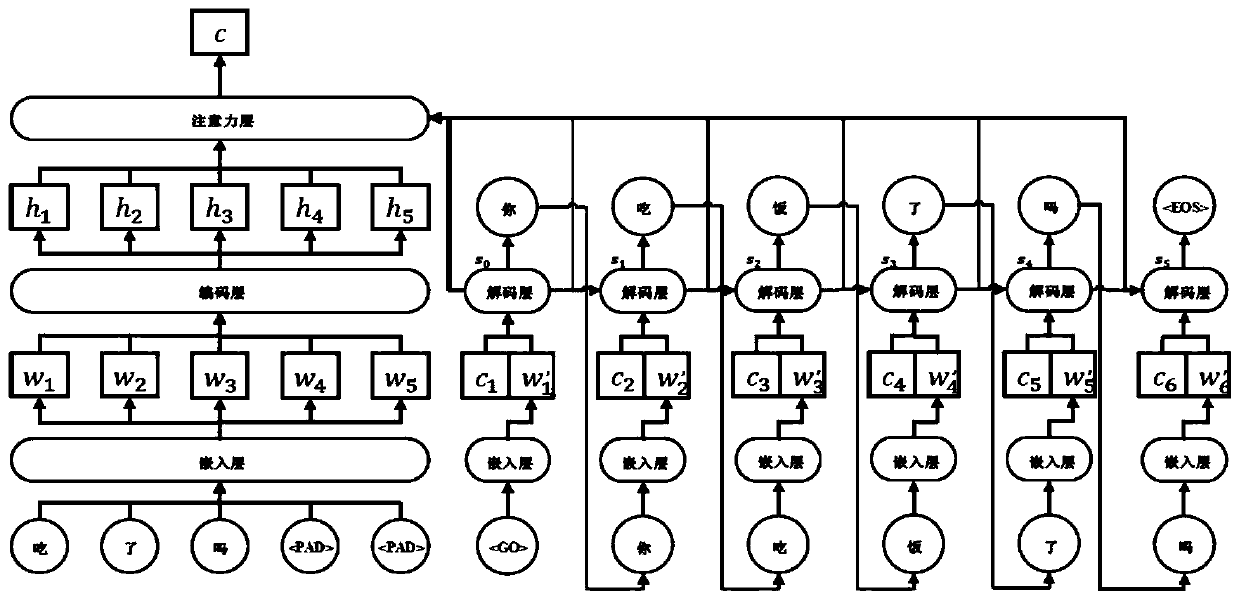

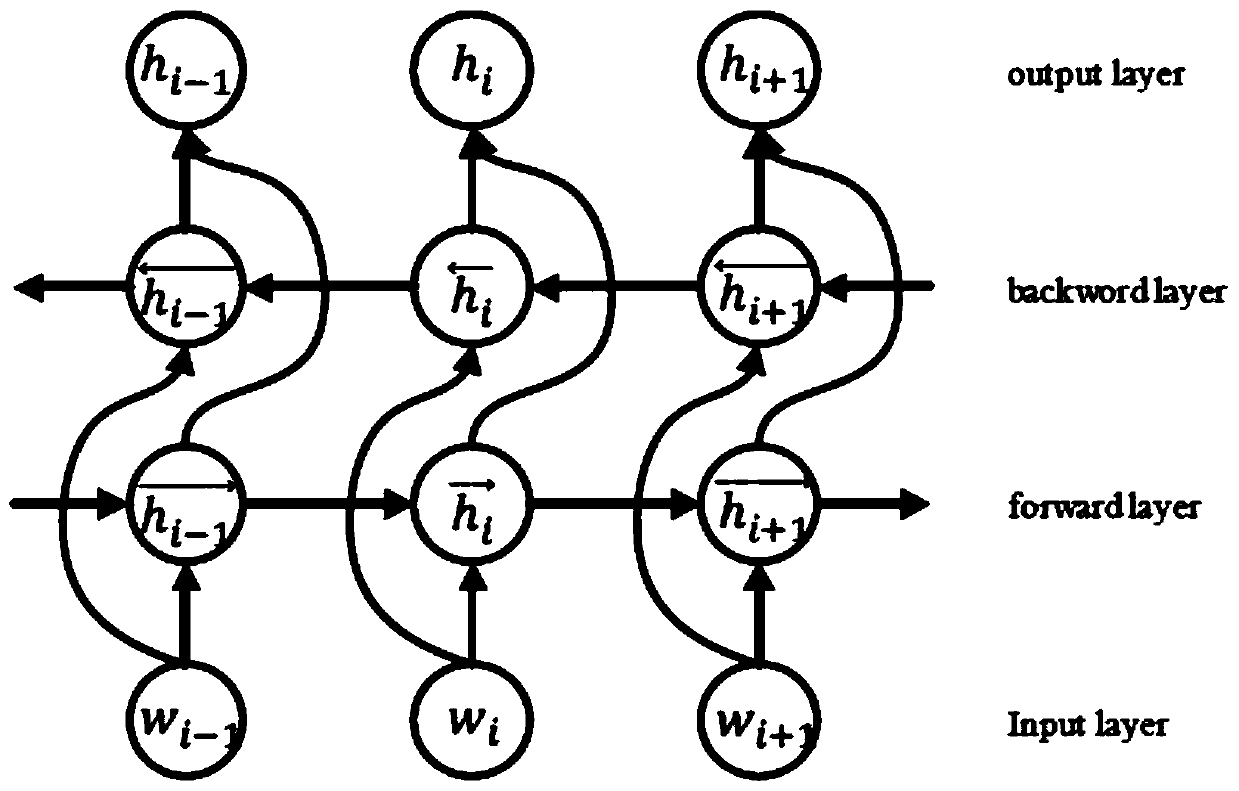

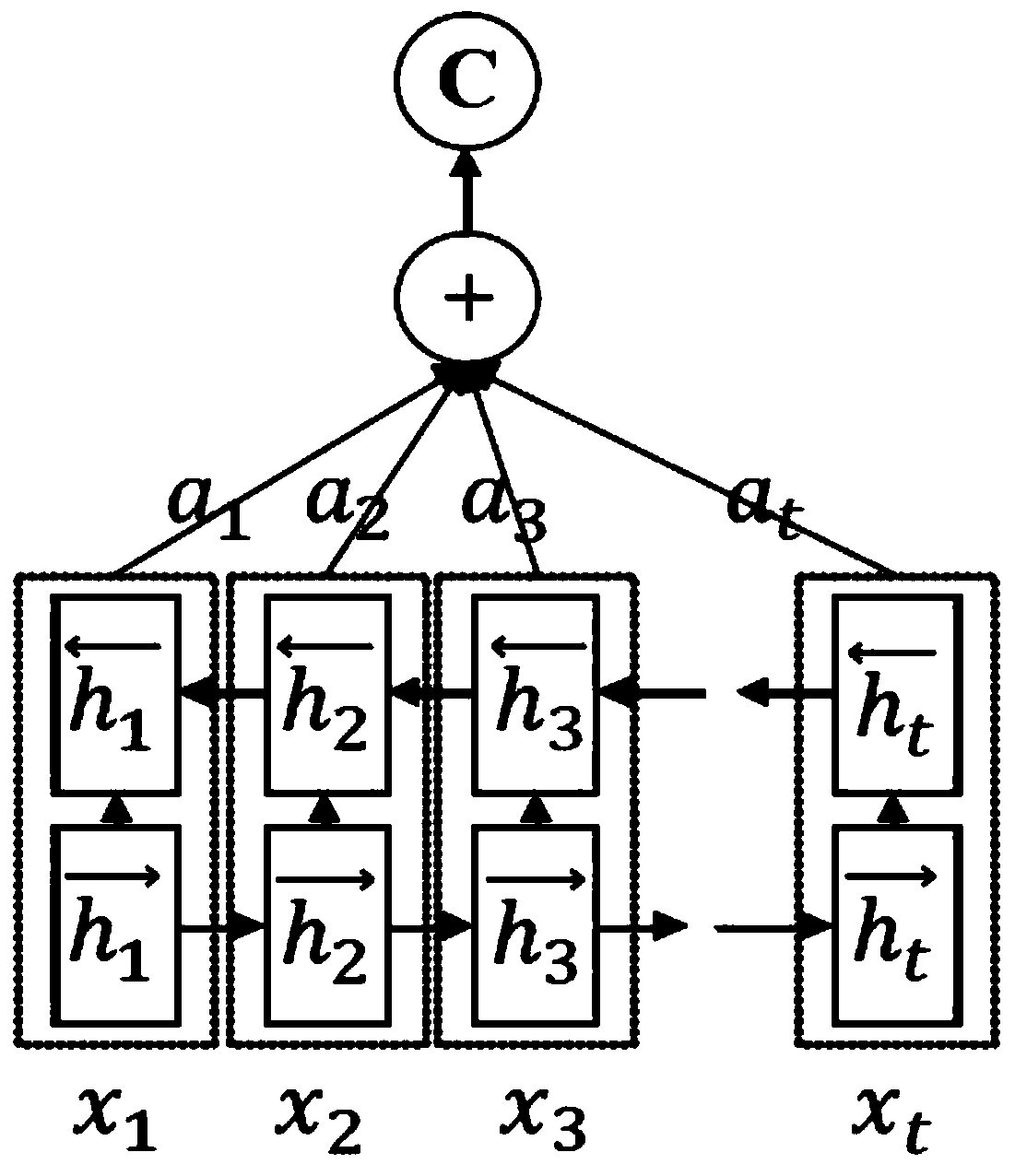

[0101] The omission recovery model used in the present invention is a neural network framework based on an encoder and a decoder. The model is mainly divided into embedding layer, encoding layer and decoding layer. The embedding layer is to obtain the distributed representation of discrete words; the encoding layer is to mine the features of the text; the decoding layer is to use the features extracted by the encoding layer to generate the result after omitting the completion. Each part is introduced separately below.

[0102] embedding layer

[0103] The main function of the embedding layer (Embedding) is to map discrete word units to a low-dimensional semantic space, a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com