Human skeleton action recognition method

A human skeleton and recognition method technology, applied in the field of recognition graphics, can solve the problems of not being able to better capture spatio-temporal feature information, recognition errors, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

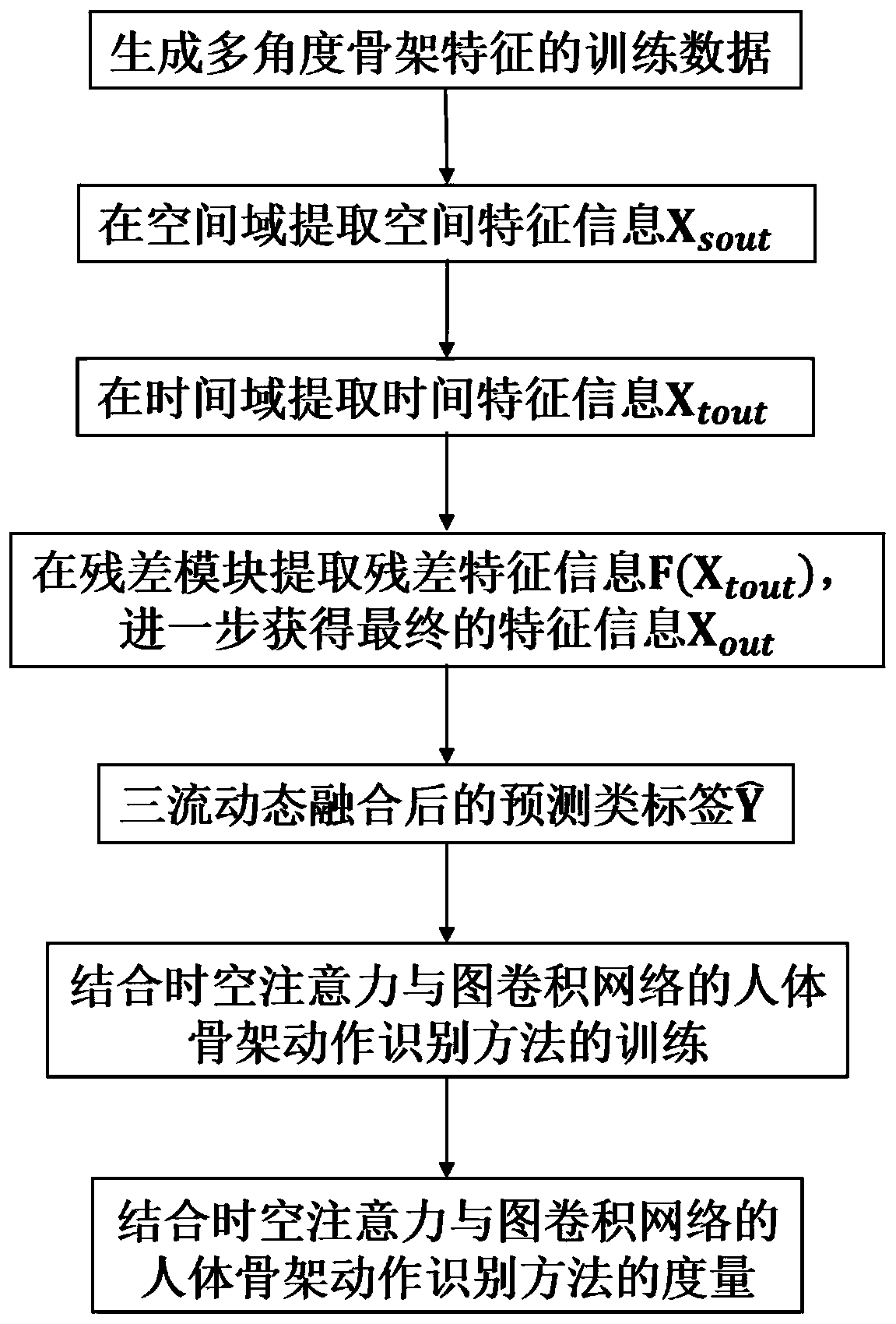

[0100] The specific steps of the action recognition method combining spatiotemporal attention and graph convolution network in this embodiment are as follows:

[0101] The first step is to generate training data for multi-angle skeleton features:

[0102] The training data of the multi-angle skeleton feature includes joint information flow data, bone information flow data and motion information flow data,

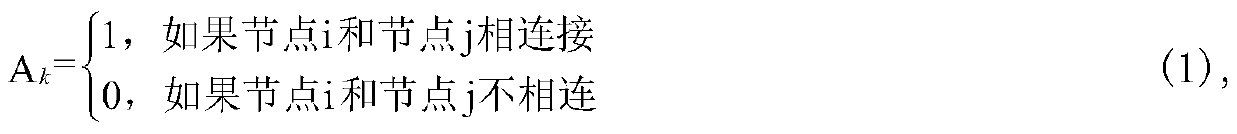

[0103] First, for a set of input video sequences of human skeleton actions, construct an undirected connected graph of the human skeleton, where the joint points are the vertices of the graph, and the natural connections between the joint points are the edges of the graph, defining the skeleton graph G={V, E}, where V is a set of n joint points, E is a set of m skeleton edges, and the adjacency matrix A of the skeleton graph is obtained by the following formula (1): k ∈{0,1} n×n ,

[0104]

[0105] Then use the coordinate data of its joint points to obtain the joint s...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com