Action recognition method of self-adaptive mode

An action recognition and self-adaptive technology, applied in the field of action recognition, can solve the problem of low recognition accuracy, and achieve the effect of improving the accuracy and avoiding the impact.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

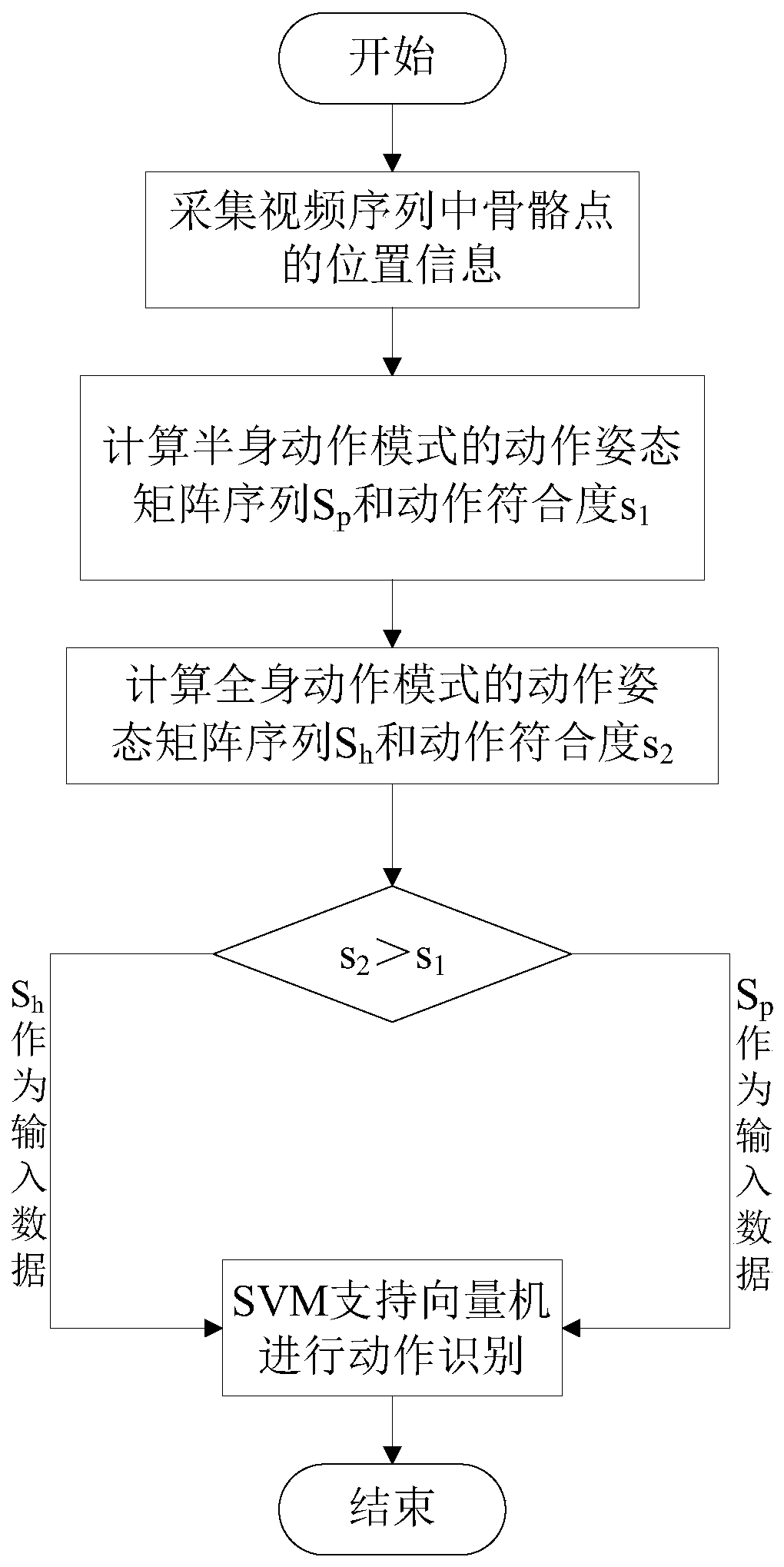

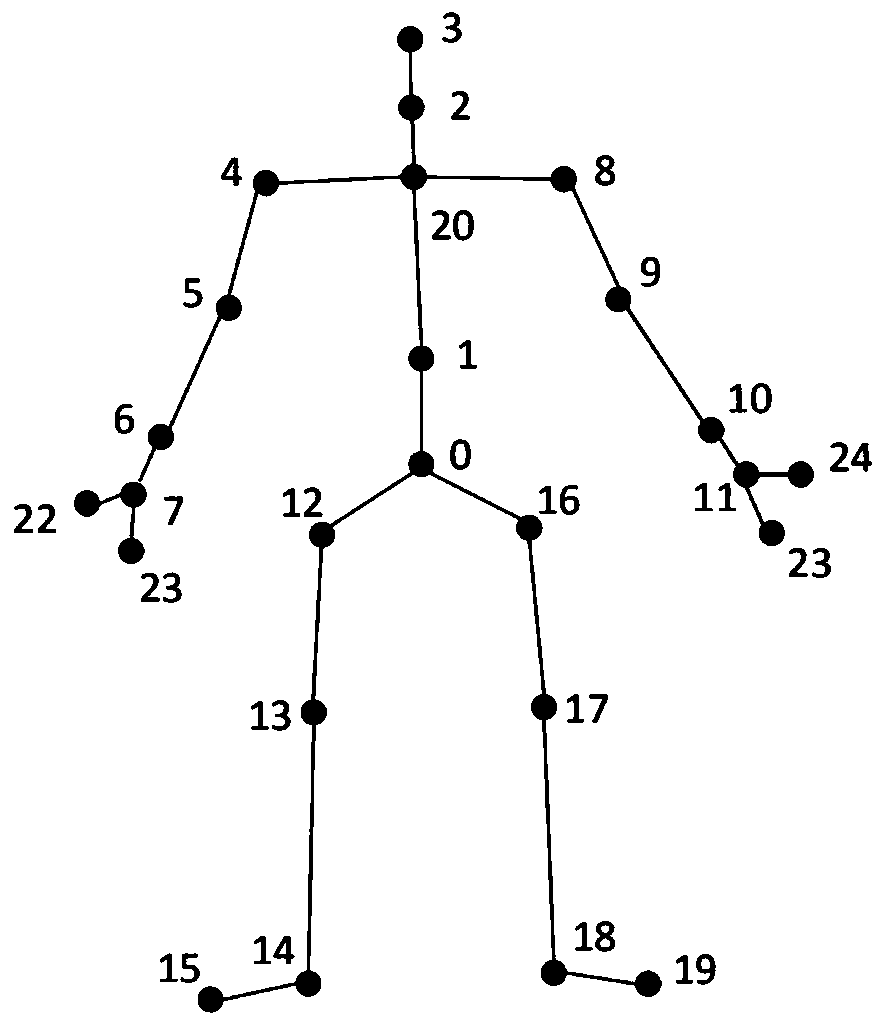

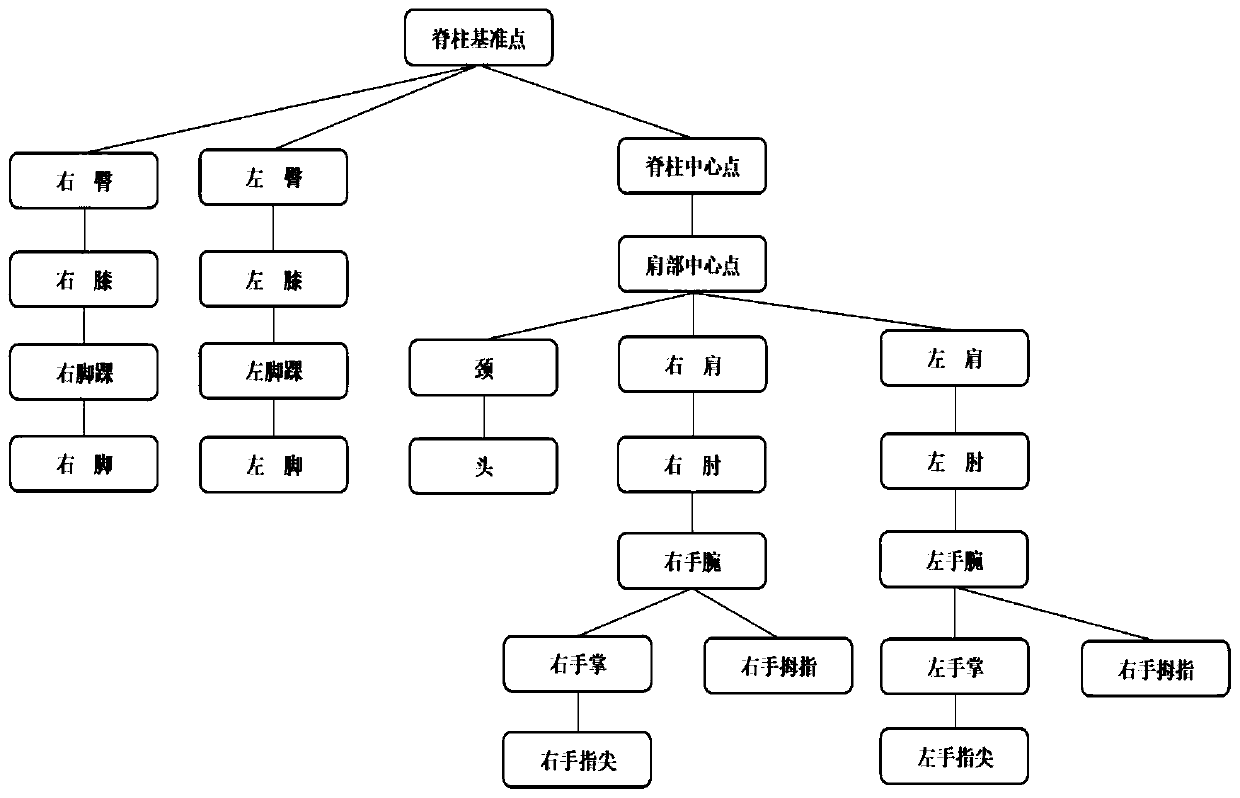

Method used

Image

Examples

Embodiment 1

[0065] This embodiment is carried out on the public UTKinect-Action dataset, and the results of the UTKinect-Action dataset are compared with the HO3DJ method and the CRF method.

[0066] The UTKinect-Action dataset contains the data of 10 actions extracted by the Kinect sensor, each action is completed by 10 people, each person repeats each action twice, and there are 199 valid action sequences in total. Actions in the UTKinect-Action dataset are characterized by high clustering and viewpoint variation.

[0067] The comparison results between this method and other methods are shown in Table 1. This method is 4.89% higher than the HO3DJ method and 4.09% higher than the CRF method.

[0068] Table 1 Comparison of recognition accuracy of each action in UTKinect-Action dataset

[0069]

Embodiment 2

[0071] Table 2 Action modes and recognition rates of each action in the MSR Action3D dataset

[0072]

[0073] This implementation is carried out on the MSR Action3D dataset, and the results of the MSR Action3D dataset are compared with HO3DJ, Profile HMM and Eigenjoints methods.

[0074] For the MSR Action3D dataset, the dataset contains 20 actions of 10 people, each action repeated 3 times, a total of 557 action sequences. The 20 actions in the dataset are divided into three subsets AS1, AS2 and AS3, as shown in Table 2, each subset has 8 actions. Among them, AS1 and AS2 are similar, and AS3 is relatively complex. Table 3 shows the comparison results between the method proposed in this paper and other methods on the MSRAction3D dataset. It can be seen from the experimental results that under the MSR Action3D dataset, the experimental results show that the action recognition rate is improved compared with the traditional Eigenjoints action recognition method. 9%, the act...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com