Image text mutual retrieval method based on bidirectional attention

一种注意力、文本的技术,应用在图像处理领域,达到准确构建的效果

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

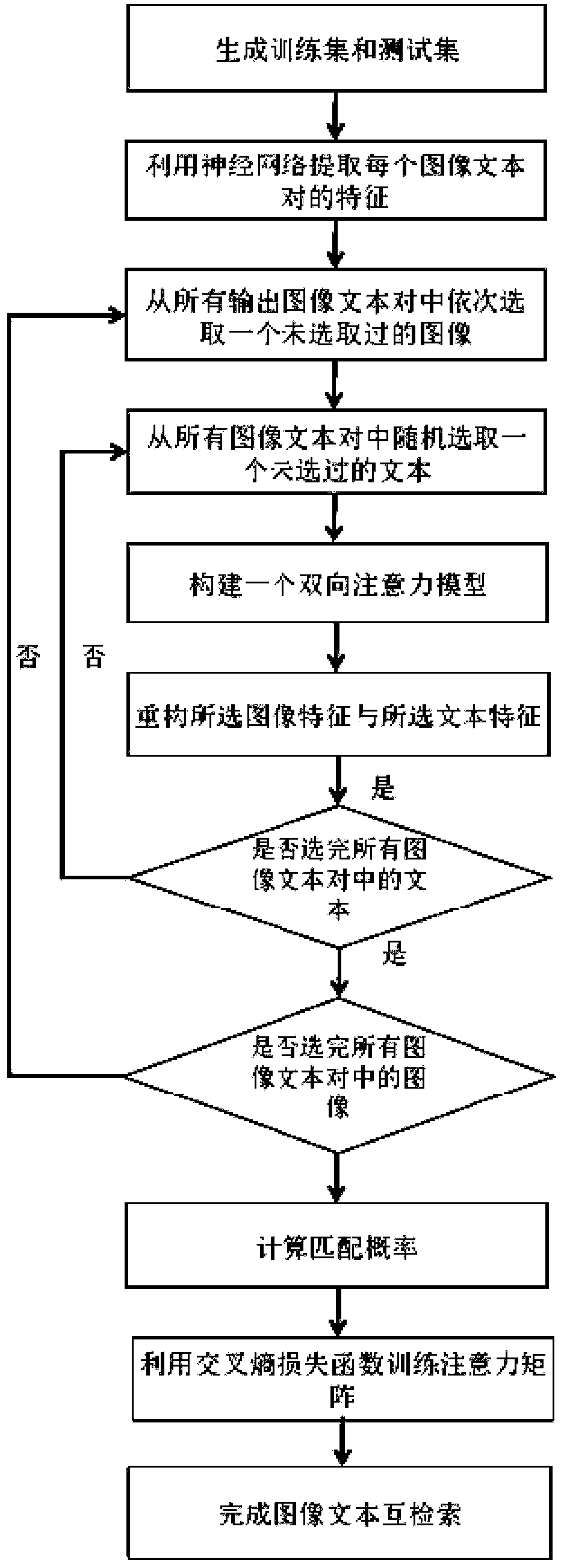

[0045] The present invention will be described in further detail below in conjunction with the accompanying drawings.

[0046] Refer to attached figure 1 , the steps of the present invention are further described in detail.

[0047] Step 1, generate training set and test set.

[0048] A total of 25,000 images and their corresponding text pairs were arbitrarily selected from the Flickr30k dataset, and 15,000 image-text pairs constituted the training set, and 10,000 image-text pairs constituted the test set.

[0049] Step 2, using the neural network to extract the features of each image-text pair.

[0050] Build a 14-layer neural network, set and train the parameters of each layer.

[0051] The structure of the neural network is as follows: first convolutional layer—>first pooling layer—>second convolutional layer—>second pooling layer—>third convolutional layer—>third pooling layer —> Fourth convolutional layer —> Fourth pooling layer —> Fifth convolutional layer —> Fifth p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com