Linguistic model training method and device as well as linguistic model construction method and device

A language model and training method technology, applied in the field of input methods, can solve the problems of private information exposure and difficulty in protecting user privacy, achieve the effect of less data, reduce the risk of user private information exposure, and improve upload efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

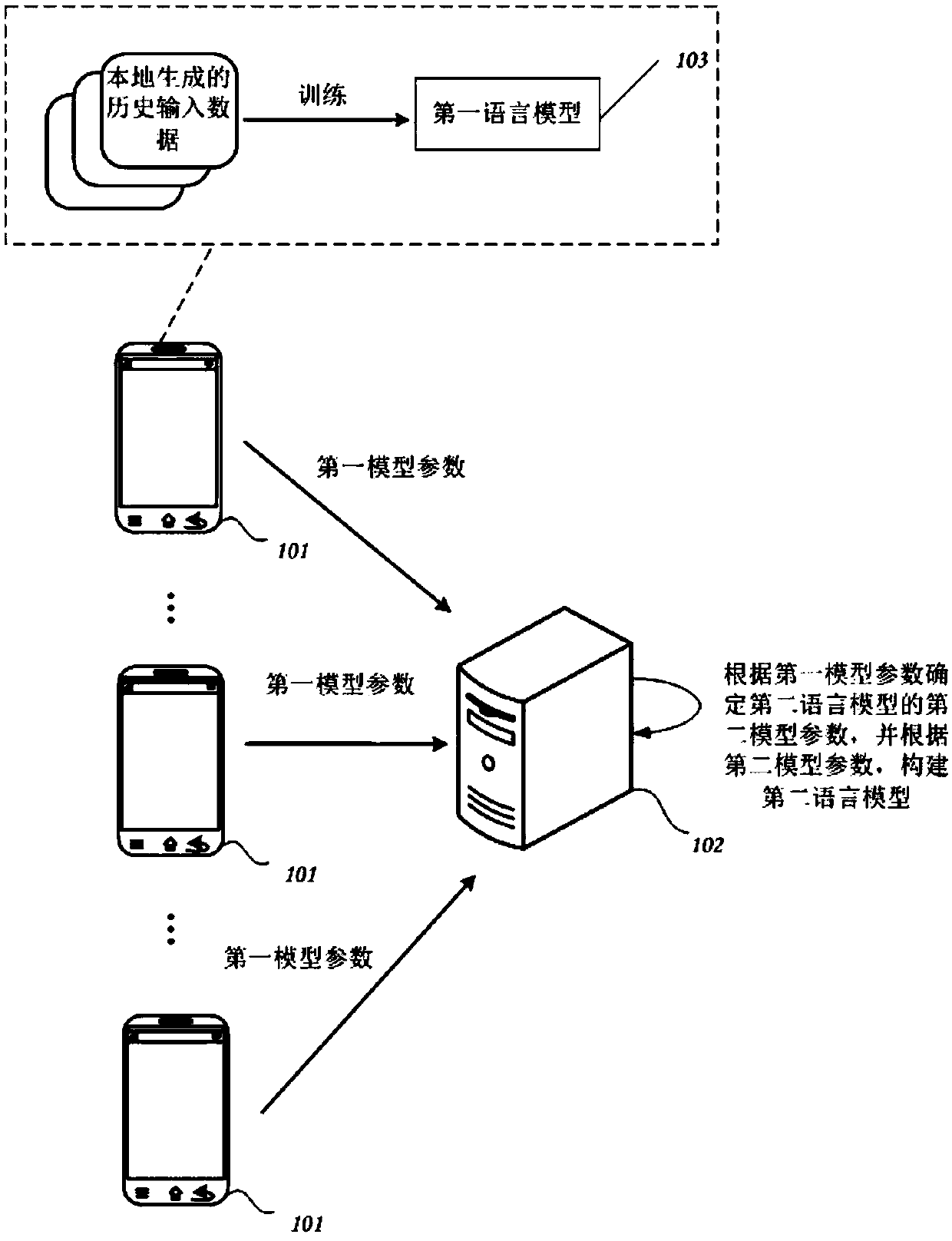

[0058] Embodiments of the present application are described below in conjunction with the accompanying drawings.

[0059] In the traditional language model training method, the input method can train the language model on the network side through a large number of historical input data of users, and the acquired historical input data is generally uploaded or input normal in the process of using the input method collected under. Either way will lead to the risk of exposure of the user's private information, such as the specific content input by the user, making it difficult to protect the user's privacy during the process of training the language model.

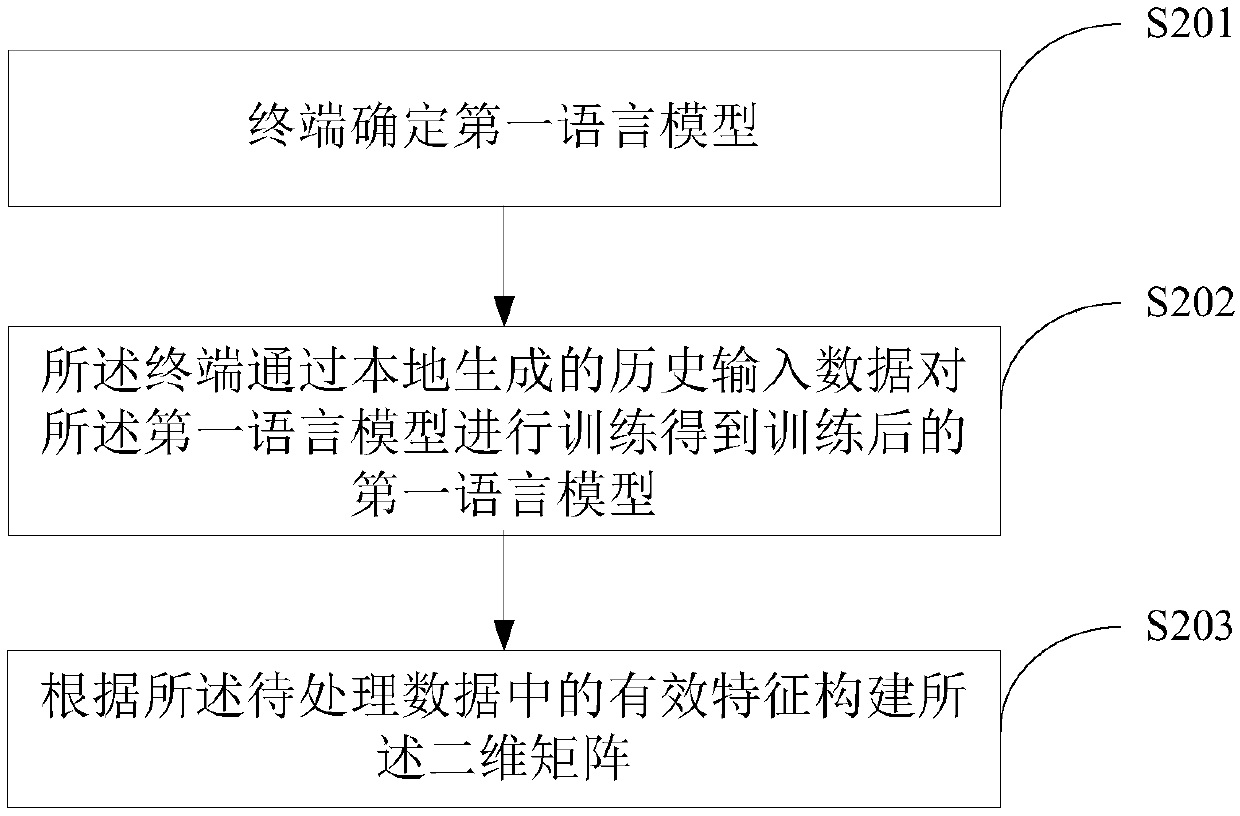

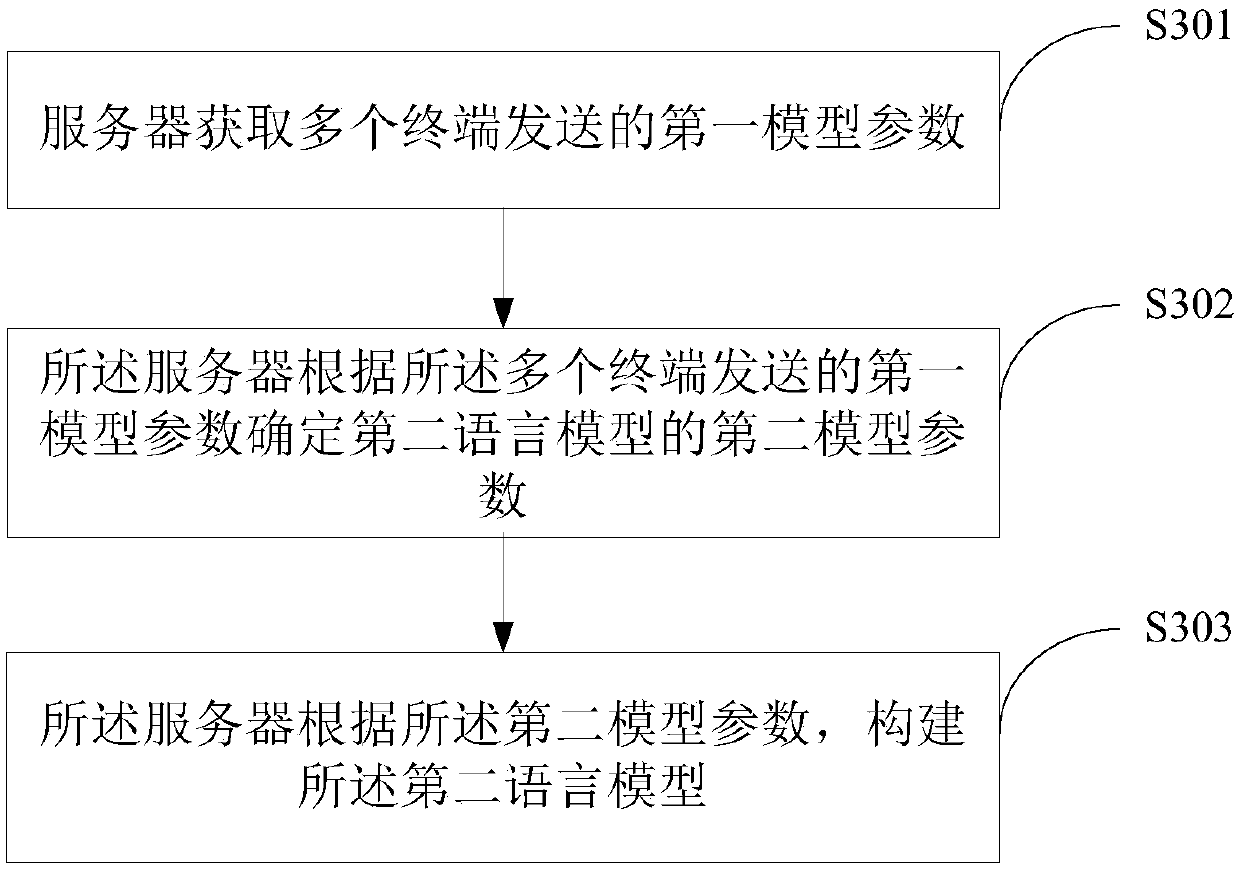

[0060] To this end, an embodiment of the present application provides a language model training method. In this method, after the terminal determines the first language model for training, it can train the first language model according to locally generated historical input data, so as to Improve the model parameters of the f...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com