Attention mechanism-based image description generation method

An image description and attention technology, applied in computer parts, biological neural network models, instruments, etc., can solve problems such as error accumulation, difficulty in focusing on target objects, and loss of information.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0077] The technical solutions of the present invention will be further described below in conjunction with the accompanying drawings and embodiments.

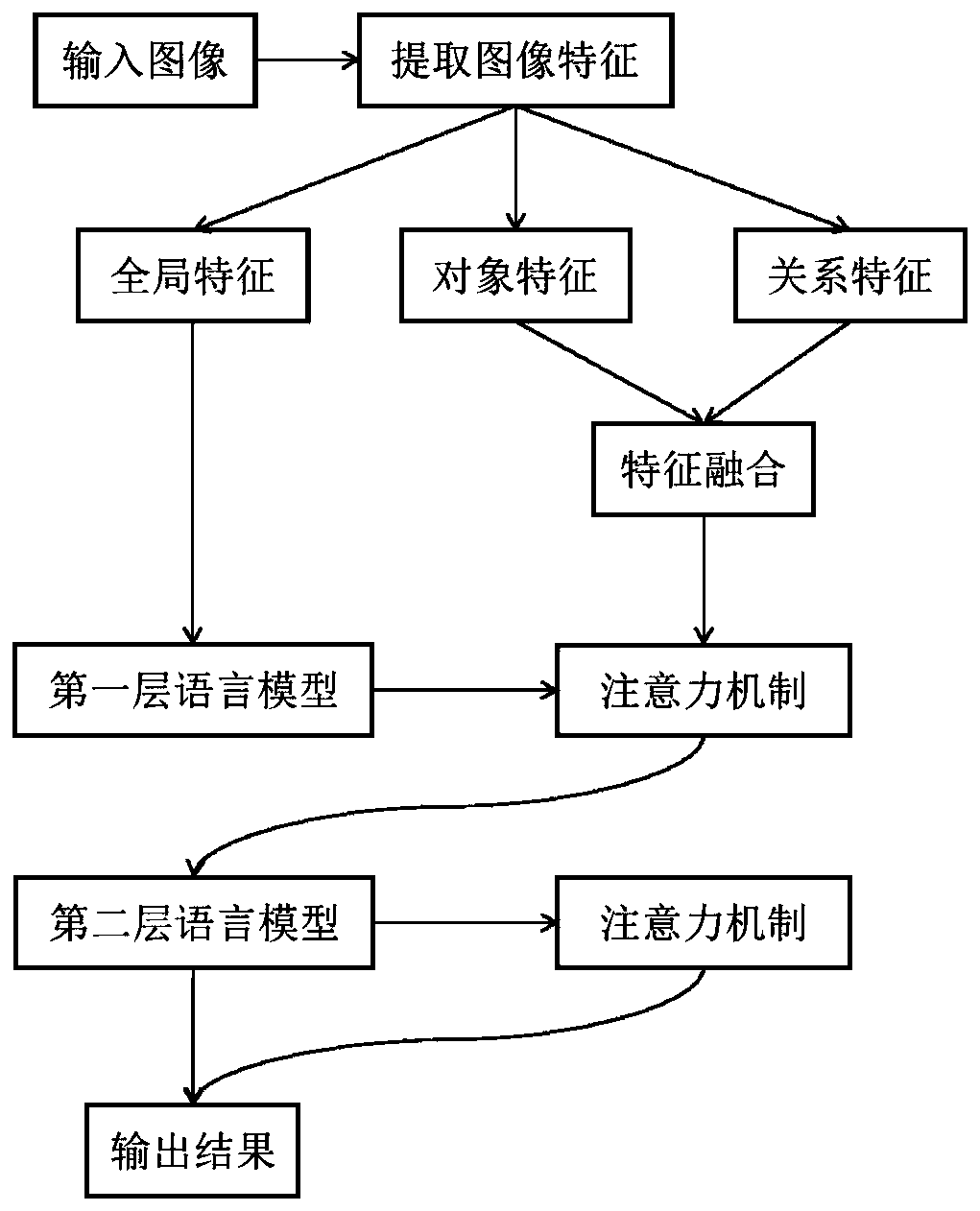

[0078] Such as figure 1 As shown, an image description generation method based on attention mechanism includes the following steps:

[0079] Step 1, extracting words from the tagged sentences of the dataset to build a vocabulary;

[0080] The way to obtain the vocabulary in step 1 is to count the number of occurrences of each word in the text description of the MS COCO dataset, and only select words that appear more than five times to be included in the vocabulary. The vocabulary of the MS COCO dataset contains 9,487 words .

[0081] Step 2: Use the ResNet101 model as the initial CNN model, use the ImageNet dataset to pre-train the parameters of ResNet101, use the pre-trained ResNet101 to extract the global features of the image alone, and then use the pre-trained ResNet101 to replace the CNN extraction in the Faster R-CNN a...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com