Conference video splitting method and system

A conference and video technology, applied in the field of video processing, can solve the problems of different conferences, large workload of human voice fingerprint database, and a lot of manpower, etc., to achieve the effect of improving accuracy, ensuring integrity, and reducing the amount of calculation.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

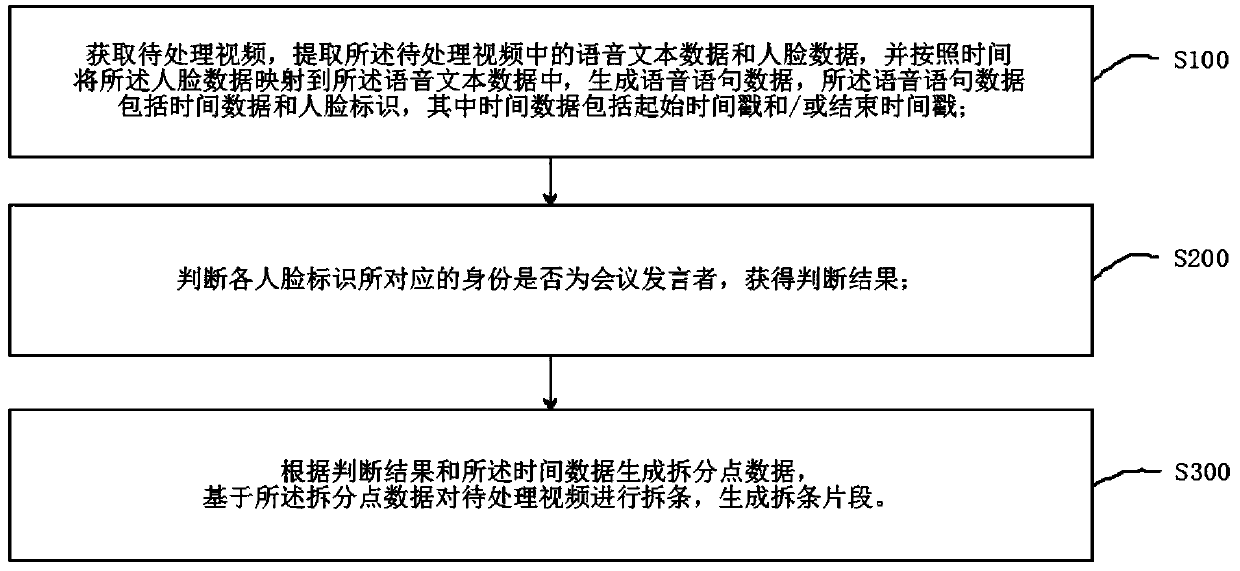

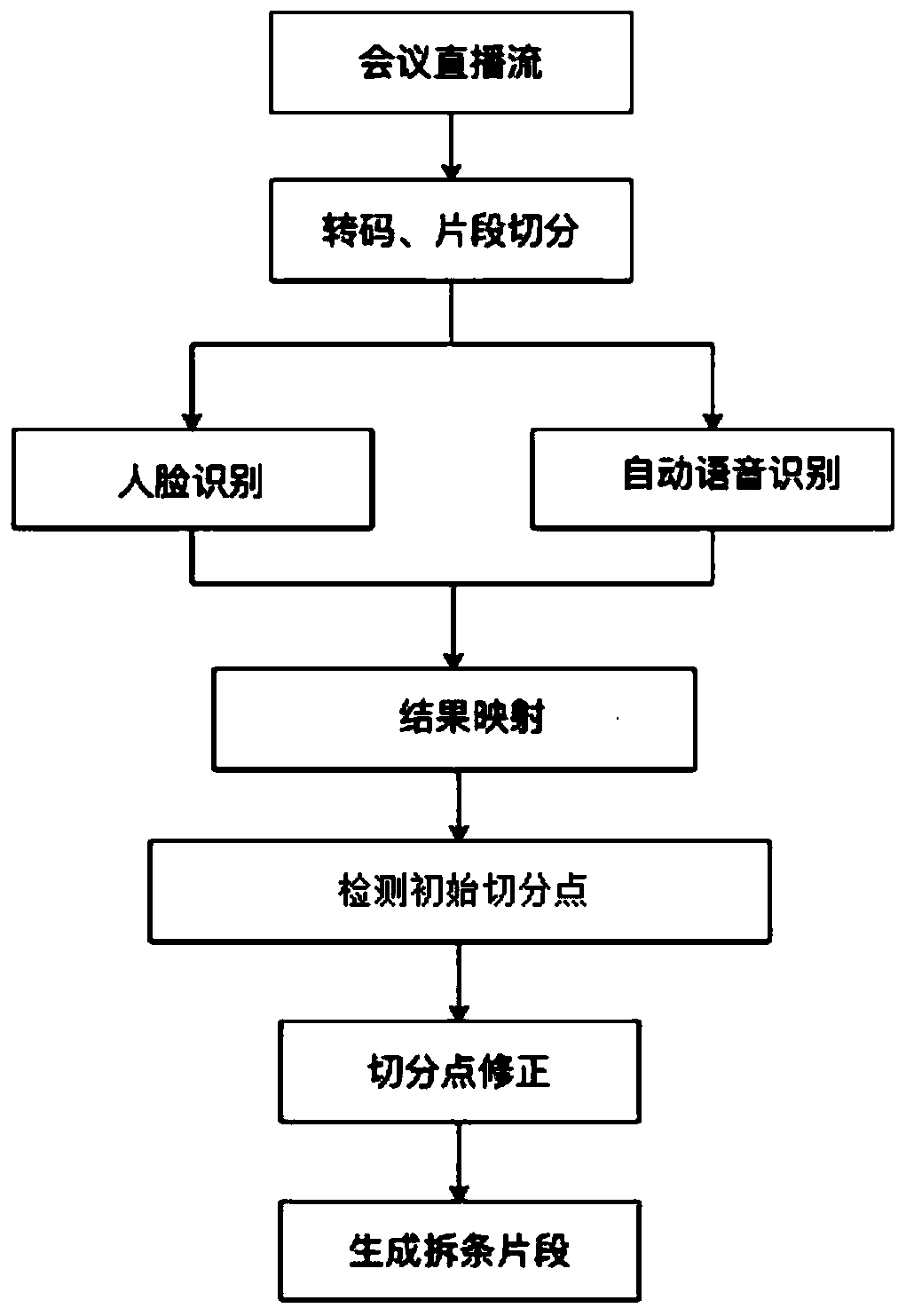

[0054] Embodiment 1. A method for splitting conference videos, such as figure 1 shown, including the following steps:

[0055] S100. Acquire the video to be processed, extract the voice text data and face data in the video to be processed, and map the face data to the voice text data according to time, and generate voice sentence data, the voice sentence The data includes time data and face identification, where the time data is the starting timestamp;

[0056] The start time stamp is the start time of the sentence corresponding to the voice sentence data.

[0057] S200. Judging whether the identity corresponding to each face identifier is a conference speaker, and obtaining a judging result;

[0058] S300. Generate split point data according to the judgment result and the time data, and split the video to be processed based on the split point data to generate split segments.

[0059] In this embodiment, the face data and the voice text data are mapped according to time, so...

Embodiment 2

[0091] Embodiment 2, change the time data in embodiment 1 from "start time stamp" to "end time stamp", and the rest are equal to embodiment 1;

[0092] In this embodiment, the end time stamp is the end time of the sentence corresponding to the speech sentence data. At this time, the end time stamp of the speech sentence data before the sentence appears is used as the split point to generate split point data.

Embodiment 3

[0093] Embodiment 3. Change the time data in Embodiment 1 from "start time stamp" to "start time stamp and end time stamp". The specific steps to split point data are:

[0094] Take the start time stamp of the sentence as the first start split point, and take the end time stamp of the speech sentence data before the sentence as the first end split point, according to the first start split point and the first end split point splitpoint generates splitpoint data.

[0095] Because there is a pause time between each sentence, the design of the first beginning split point and the first end split point in the present embodiment makes the silent segment not appear in the title / end of the strip segment of the gained, improving the user's viewing experience.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com