Parameter communication optimization method for distributed machine learning

A machine learning and parameter communication technology, applied in machine learning, database distribution/replication, instruments, etc., can solve problems such as unbalanced iterative computing load, fault-tolerant amplification, lost local updates, etc., to achieve heterogeneous cluster computing performance optimization, The effect of high accuracy and speed, training speed optimization

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0023] Below in conjunction with accompanying drawing and specific implementation application process, the present invention is further described:

[0024] Step 1—Set up nodes in a master-slave manner:

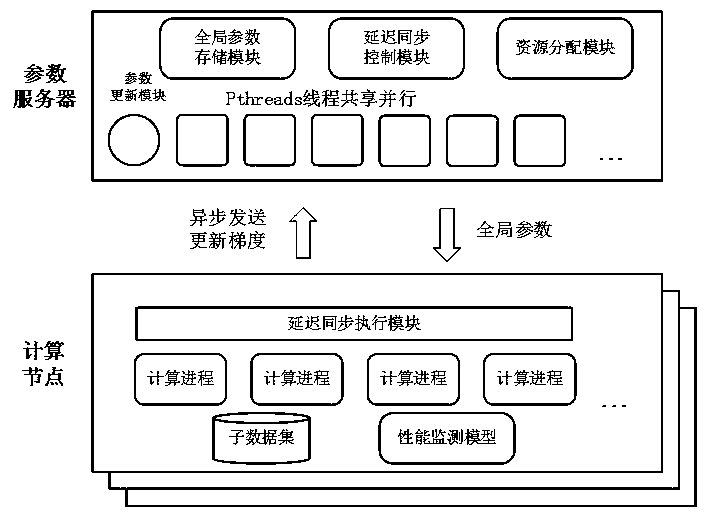

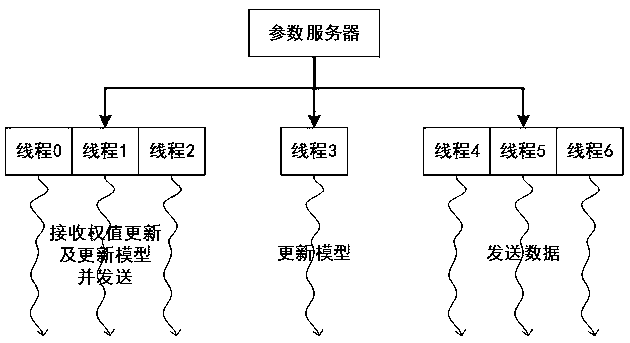

[0025] Such as figure 1 , the present invention uses one node of the heterogeneous cluster as a parameter server, and the other nodes as computing nodes to realize a parameter server system. Such as figure 2 , the parameter server is implemented in a multi-threaded manner. Each thread corresponds to a computing node, which is used to receive and send the gradient calculated by the computing node; and another thread is specially set up to process the sum of the gradients of the above threads and the model parameters. updates and broadcasts. Such as image 3 , the calculation node is mainly used to calculate and update the model gradient.

[0026] Step 2 - Adopt a data parallel strategy:

[0027] The present invention constructs multiple copies of the network model to be ...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap