Method and device

A technology of moving direction and sensors, which is applied in the fields of self-driving vehicles and virtual driving, and can solve the problems of large consumption of computing resources, consumption of communication resources, and consumption of self-driving vehicles.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment approach

[0059] In order to enable those skilled in the art to easily implement the present invention, exemplary embodiments of the present invention will be described in detail by referring to the accompanying drawings, as shown below.

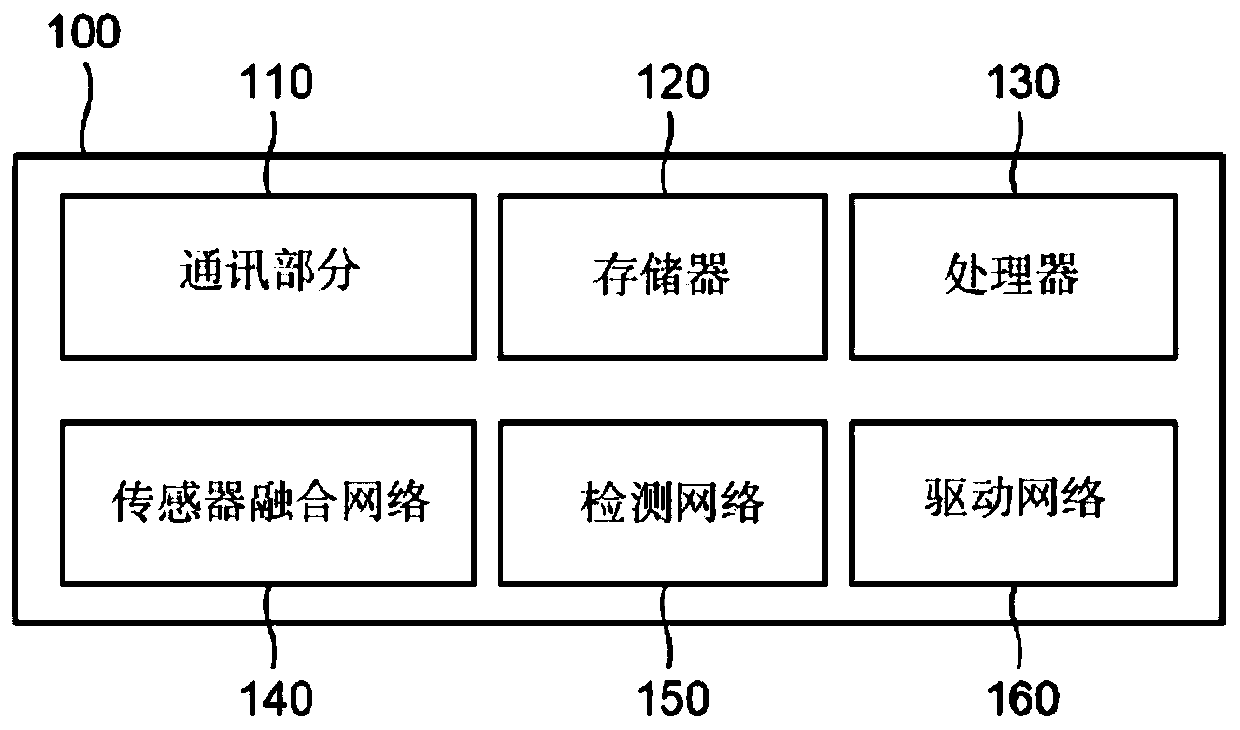

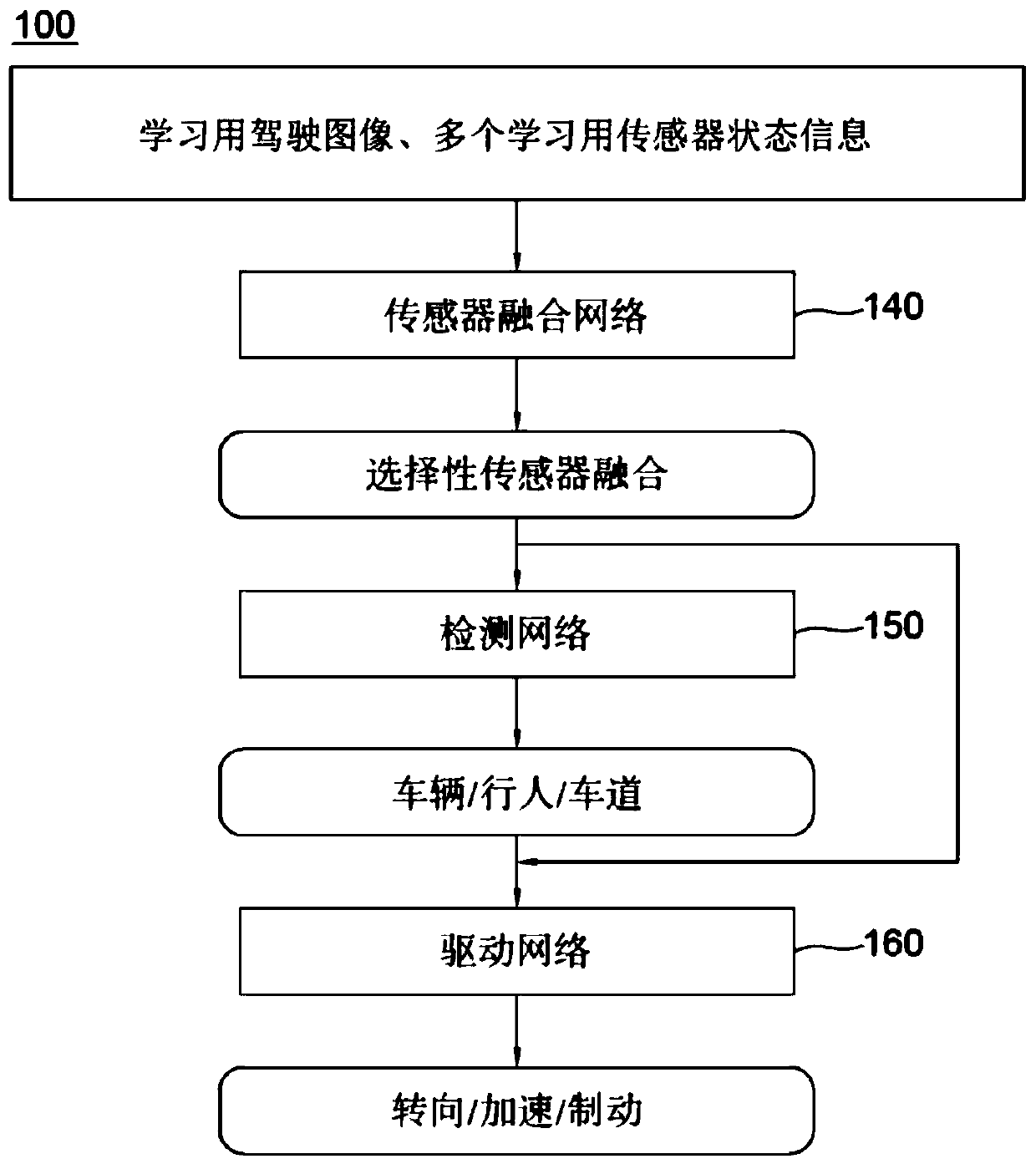

[0060] figure 1 Schematically shows a reinforcement learning-based learning device for learning a sensor fusion network for multi-object sensor fusion in cooperative driving according to an embodiment of the present invention. refer to figure 1 , the learning device 100 may include: a memory 120 for storing instructions to learn a sensor fusion network for multi-object sensor fusion in cooperative driving of an object self-driving vehicle based on reinforcement learning; a processor 130 for executing Processing corresponding to instructions in memory 120 .

[0061] Specifically, the learning device 100 can generally achieve the desired system performance by using a combination of at least one computing device and at least one computer software, such...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com