Three-branch network behavior identification method based on multipath space-time feature enhanced fusion

A technology of spatio-temporal features and recognition methods, applied in neural learning methods, character and pattern recognition, biological neural network models, etc., can solve problems such as complex backgrounds, wearing clothes, etc., to increase effective information utilization, improve effects, and maximize The effect of interaction

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

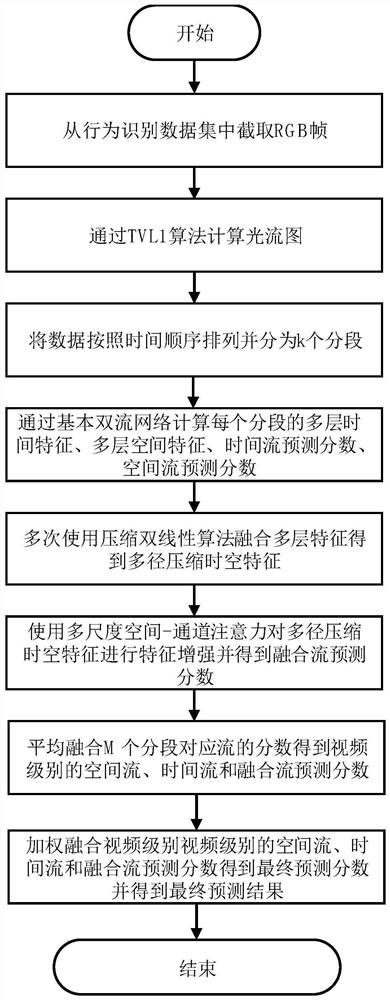

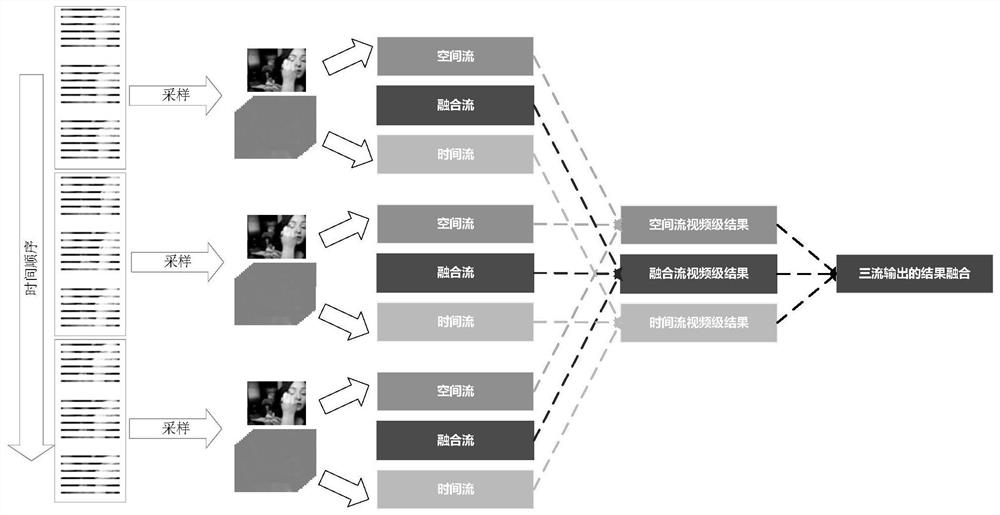

[0035] In order to better illustrate the present invention, the public behavioral data set UCF101 is taken as an example below. In this example, k=3 is used to segment the entire video, that is, three time-segmented networks are used, and in each network Select M=3 layer features, k and M can be adjusted according to the actual situation in the specific implementation.

[0036] figure 2 It is the overall model diagram after dividing the entire video into three sections in chronological order;

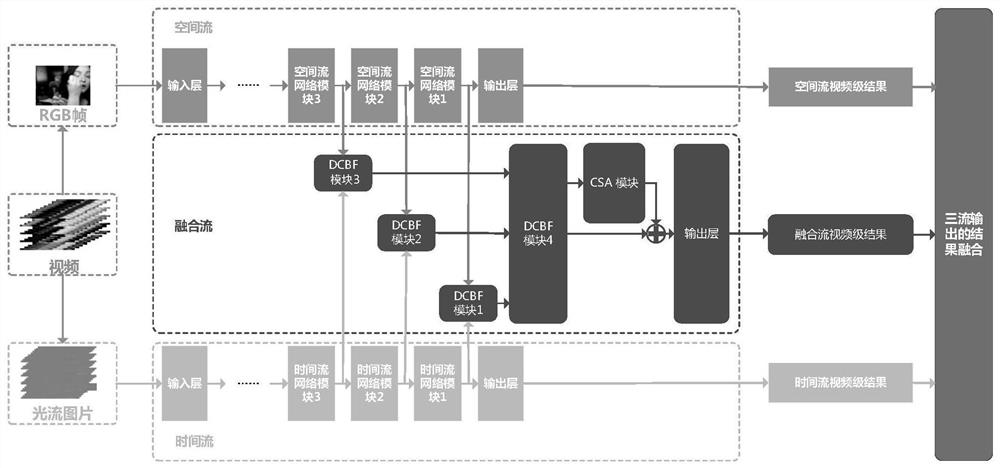

[0037] image 3 It is an overall model diagram (single time segment) of the present invention;

[0038] image 3 The algorithm model diagram of the present invention representing a single time segment, combined with figure 2It can represent the complete algorithm flow chart in the present invention. The algorithm uses RGB pictures and corresponding continuous optical flow pictures as input, wherein the RGB frames obtained from the video are input into the spatial flow network, and...

PUM

Login to view more

Login to view more Abstract

Description

Claims

Application Information

Login to view more

Login to view more - R&D Engineer

- R&D Manager

- IP Professional

- Industry Leading Data Capabilities

- Powerful AI technology

- Patent DNA Extraction

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic.

© 2024 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap