Structural damage identification method based on echo state and multi-scale convolution joint model

A combined model and echo state technology, applied in pattern recognition in signals, character and pattern recognition, neural learning methods, etc., can solve problems such as insufficient model depth, disappearing network gradients, and low recognition accuracy to prevent disasters and accidents , to ensure the effect of safety

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0040] The present invention will be further described in detail below in conjunction with the accompanying drawings.

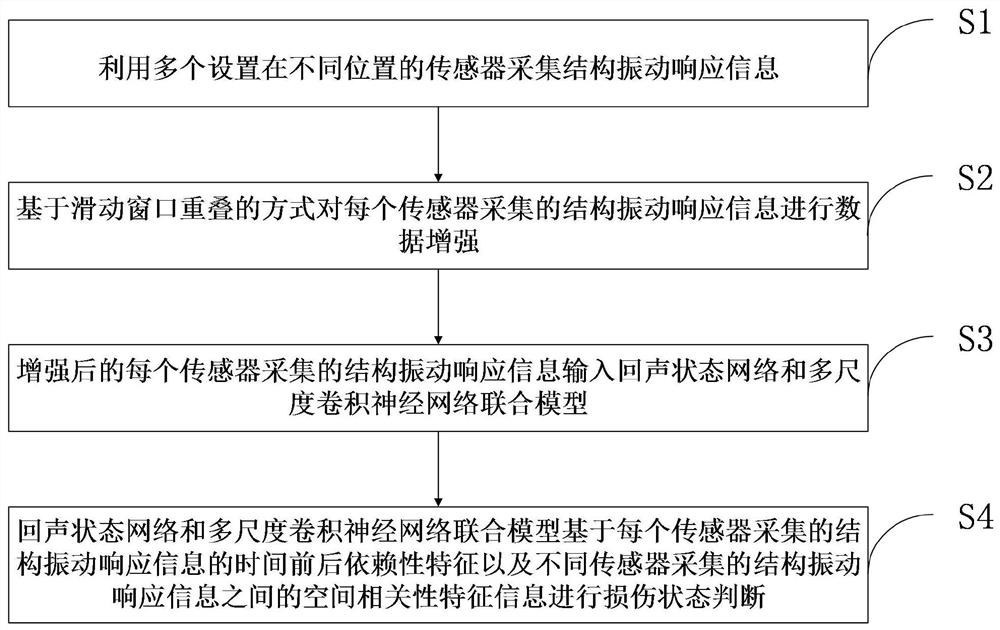

[0041] Such as figure 1 As shown, it is the structural damage identification method based on the joint model of echo state and multi-scale convolution disclosed by the present invention, including:

[0042] S1. Using multiple sensors arranged in different positions to collect structural vibration response information;

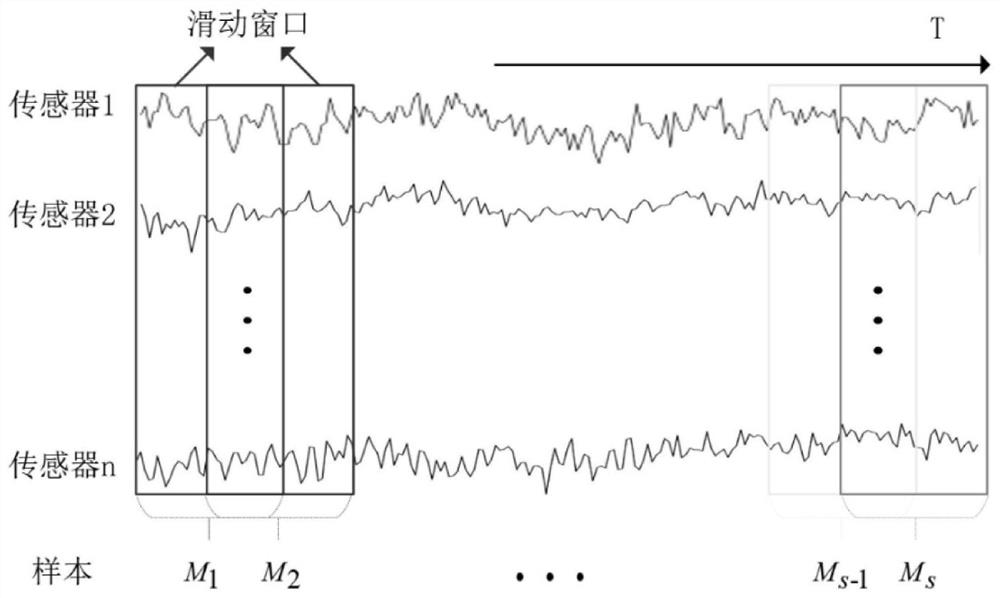

[0043] S2. Perform data enhancement on the structural vibration response information collected by each sensor based on sliding window overlapping;

[0044] S3. The enhanced structural vibration response information collected by each sensor is input into the joint model of the echo state network and the multi-scale convolutional neural network;

[0045] S4. The joint model of the echo state network and the multi-scale convolutional neural network is based on the time and context dependence characteristics of the structural vibration response ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com