Multi-camera cooperative target tracking method based on deep learning

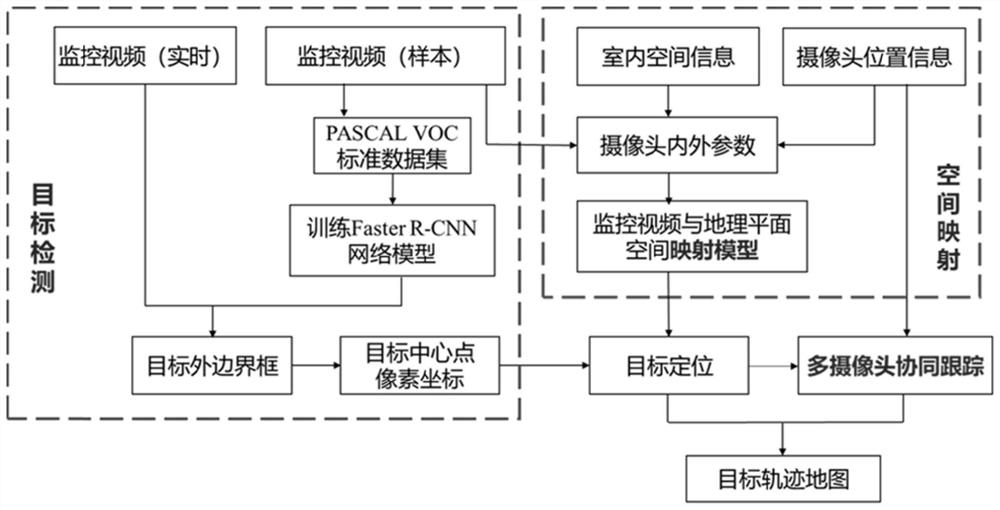

A target tracking and multi-camera technology, applied in the field of multi-camera collaborative target tracking based on deep learning, can solve the problems of discrete image information and difficulty in information integration, and achieve the effect of improving the degree of intelligence and improving the degree of intelligence

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

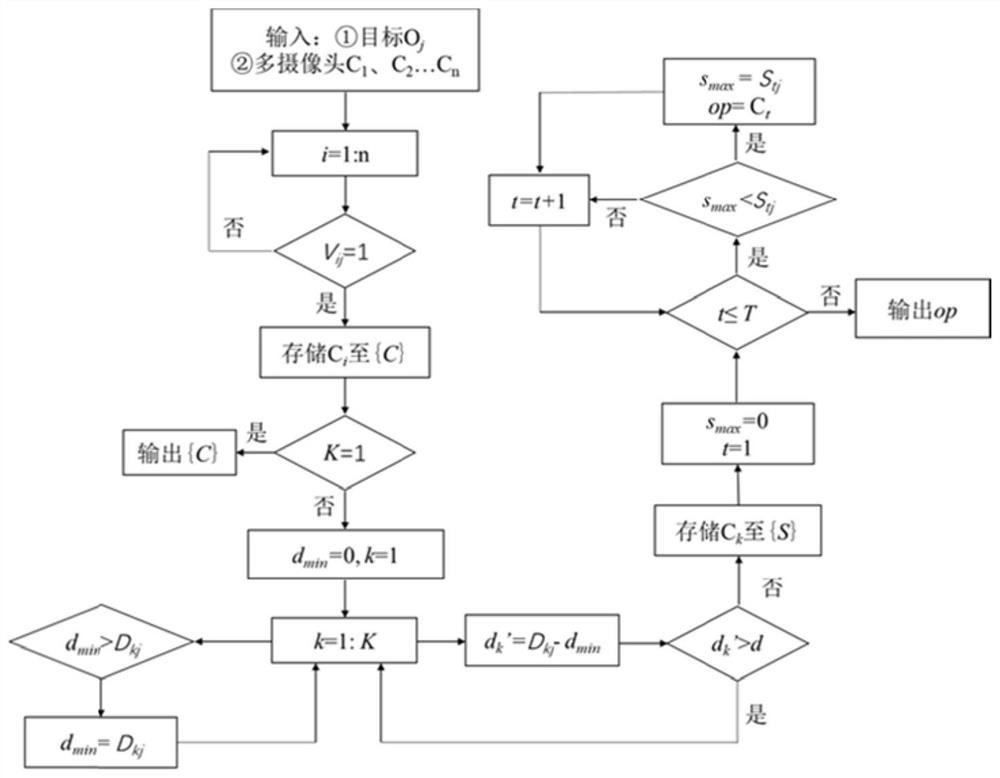

Method used

Image

Examples

Embodiment 1

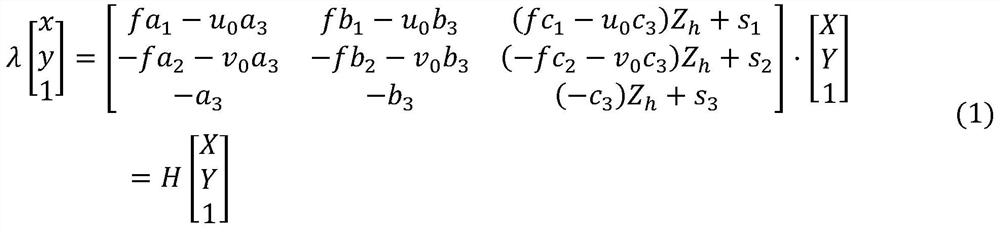

[0033] Step 1: Construct the spatial data model of the video surveillance system

[0034] The spatial data model of the video surveillance environment is established by using vector data, and the spatial data model is divided into three parts: the three-dimensional spatial expression of the surveillance environment, the video position and view, and the target spatial position and trajectory information:

[0035] 1) Three-dimensional space representation of the monitoring environment

[0036]The custom O-XYZ three-dimensional coordinates are used to represent the three-dimensional monitoring environment. The coordinate origin O can be set as a certain feature point in the monitoring environment, the XOY plane represents the two-dimensional monitoring ground, and the Z axis represents the height information. The three-dimensional objects in the monitoring environment can be mapped to the two-dimensional monitoring ground and can be abstractly expressed as point, line, and surfac...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com