An edge collaborative caching method for load balancing of differentiated services in Internet scenarios

A technology of differentiated services and cooperative caching, applied in transmission systems, electrical components, etc., can solve problems such as poor user experience and large average access delay of user requests, and achieve the goal of reducing queuing delay, improving user experience, and improving stability. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

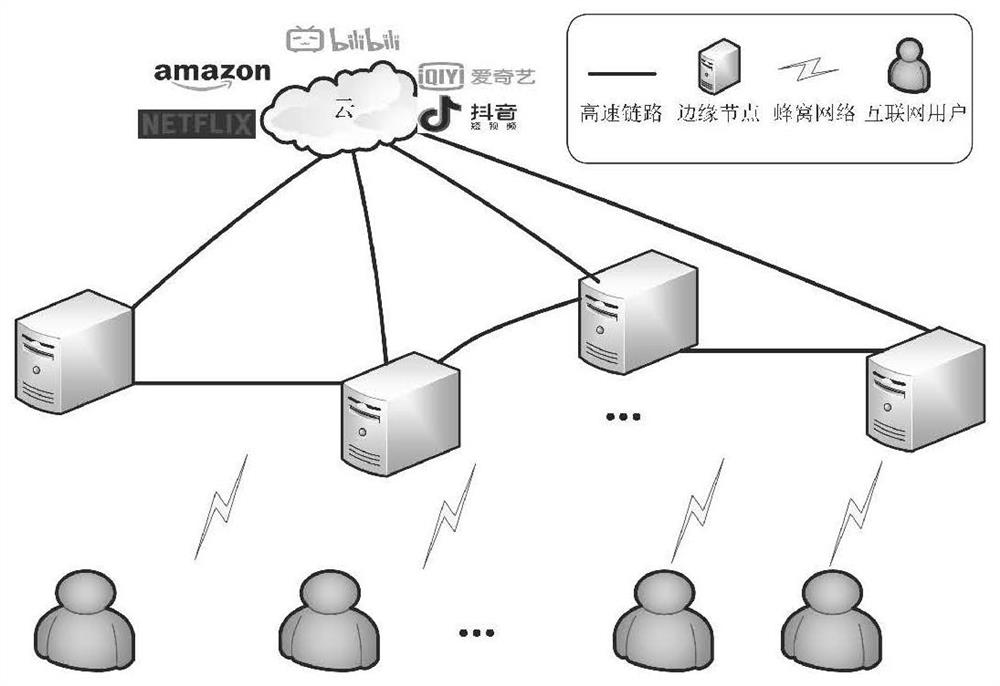

[0080] like figure 1 As shown, the present invention adopts an edge cache system based on edge-cloud collaboration and edge-edge collaboration modes. In the edge cache system, a collaborative cache strategy for Internet service applications in edge nodes is studied. It is assumed that the cloud data center owns / configures all Internet service applications. Due to the limited storage capacity of edge nodes, only source files (or application installation packages) can be downloaded / obtained from the cloud data center and then installed and configured in the nodes. Usually, due to the limited capacity of the edge node, after installing a new service application, the edge node will discard the source file (or application installation package).

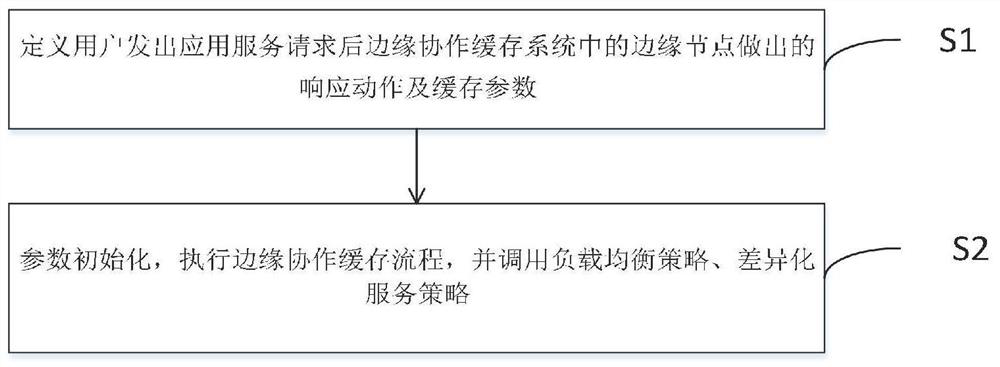

[0081] like figure 2 As shown in , an edge collaborative caching method for load balancing of differentiated services in Internet scenarios includes the following steps:

[0082] S1: Define the response actions and cache parameters made...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com