Neural network training method, device and equipment and computer readable storage medium

A neural network training and neural network technology, applied in the field of devices, neural network training methods, equipment and computer-readable storage media, can solve the problem of huge calculation amount of neural network model, reduce network calculation amount, avoid processing, and strengthen The effect of model interpretability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

no. 1 example

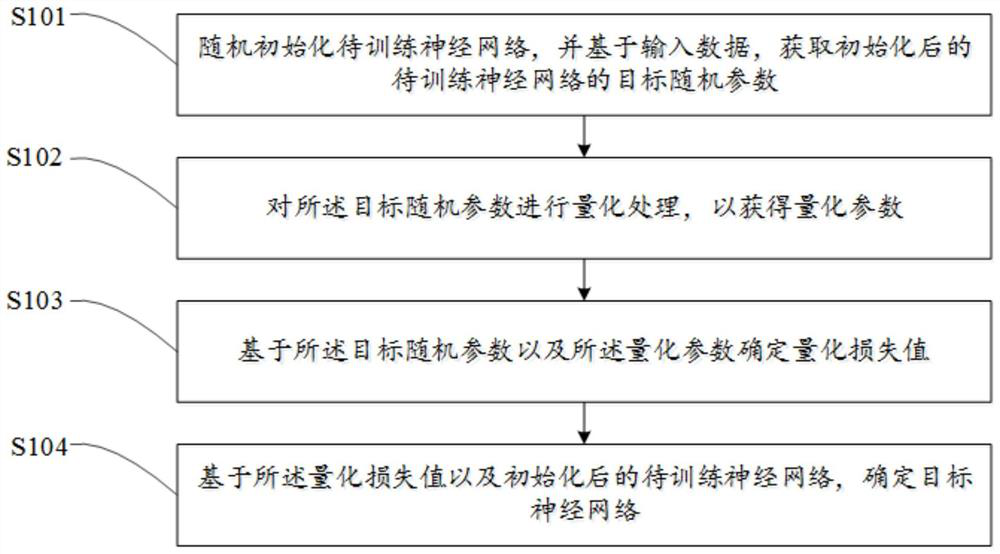

[0065] Based on the first embodiment, a second embodiment of the neural network training method of the present invention is proposed. In this embodiment, step S103 includes:

[0066] Step S201, determining a first loss value based on the target random parameter, and determining a second loss value based on the quantization parameter;

[0067] Step S202: Determine the quantized loss value based on the first loss value and the second loss value.

[0068] In this embodiment, after the quantization parameter is obtained, the first loss value is determined according to the target random parameter, and the second loss value is determined according to the quantization parameter. Specifically, the first loss value is calculated based on the input data and the target random parameter, and according to the input data And the quantization parameter calculates the second loss value.

[0069] Specifically, in an embodiment, the step S201 includes:

[0070] Step b, determining a first los...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com