Fitness action recognition and evaluation method based on machine vision and deep learning

A technology of deep learning and machine vision, applied in character and pattern recognition, instruments, computer components, etc., can solve problems such as physical injuries, home fitness can not achieve fitness effects, and irregular fitness movements, etc., to achieve strong reference value and improve Scoring Accuracy and Scoring Efficiency, Effect of Improving Fitness Efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment

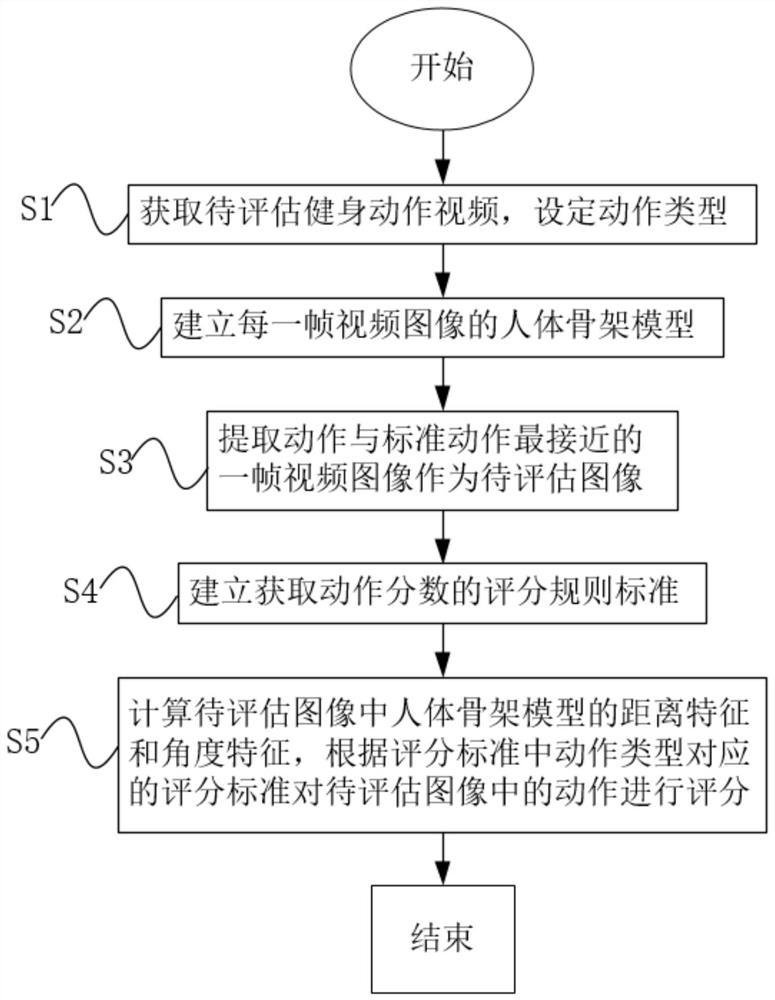

[0038] A fitness action recognition and evaluation method based on machine vision and deep learning, such as figure 1 shown, including the following steps:

[0039] S1: Obtain the video of the fitness action to be evaluated, and set the action type.

[0040] In this embodiment, the user can choose to start the computer camera to take pictures of the fitness movements, or choose to upload the fitness videos of the corresponding movements, and the computer will write them into the corresponding files. The video format adopts .MP4 format, MJPG encoder is adopted, and the video frame rate is 30 frames. After cutting by computer, the video screen size is: 640*480.

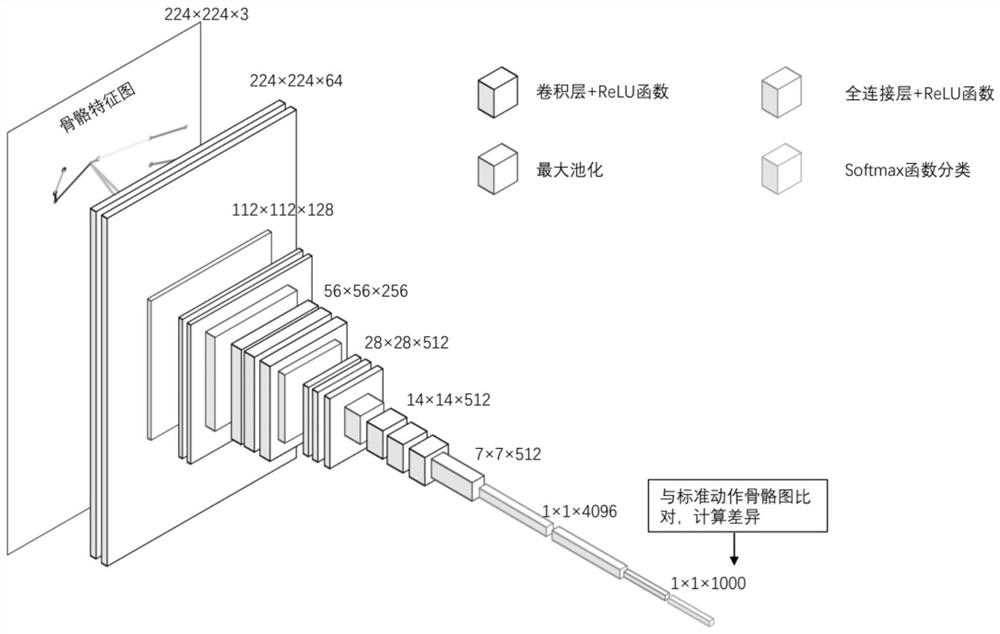

[0041] S2: Establish a human skeleton model for each frame of video image.

[0042] Step S2 specifically includes:

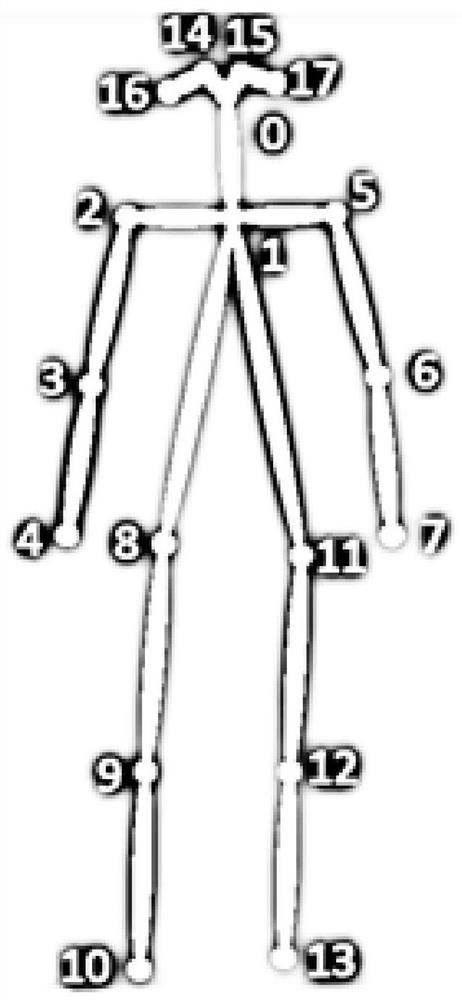

[0043] S21: Select the COCO human body model, and use the CMU human body posture dataset to obtain the key points of the bones of each frame of the video image. The key points of the bones include nose...

Embodiment 2

[0086] In this embodiment, the present invention further includes step S6: if the action score is less than 60, prompting the wrong position of the action.

[0087] The specific steps of step S6 include:

[0088] S61: Determine whether the action score is less than 60 points, if yes, go to step S61, otherwise output the evaluation score.

[0089] S62: Get the evaluation score G m corresponding to a m,n In is the scoring standard type of the failing score, and the error action prompt corresponding to the scoring standard type is obtained.

[0090] Taking the push-up action as an example, in Embodiment 1, the acquired action score is 65.1, and if it is greater than or equal to 60, it is output.

[0091] Taking plank support as an example, the 70, 80, 90, 100 respectively, 150, 165, 180, 195 respectively, are 165, 175, 185, and 195, respectively, and the identified angular feature 2-3-4 is 67°, x 7,1 =67°, calculated as a 7,1 =0.72; the recognition angle feature 16-2...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com