Hand posture estimation method and system based on visual and inertial information fusion

An attitude estimation and hand technology, applied in the fields of deep learning, computer vision, and human-computer interaction, can solve the problems that a single sensor is difficult to meet the human-computer interaction work, and it is difficult to meet the complex actual interaction scenarios, so as to overcome the spatial distance and The limitation of natural environment, the improvement of generalization ability, the effect of good real-time performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0045] The present invention will be described in further detail below in conjunction with the accompanying drawings and specific embodiments. It should be understood that the specific embodiments described here are only used to explain the present invention, not to limit the present invention.

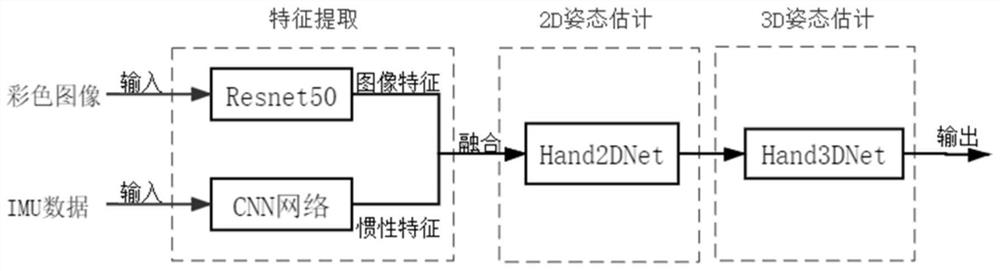

[0046] Such as figure 1 As shown, the specific implementation steps of the hand pose estimation method based on visual and inertial information fusion in this embodiment are as follows:

[0047] 1. Construction of Hand Pose Dataset

[0048] (101) Data collection

[0049] The visual color image collection of the hand pose dataset is completed using the color camera and ToF depth camera equipped with the AR glasses. The envoy wearing the AR glasses can collect hand movement color images and depth images from the first-person perspective.

[0050] Inertial information collection is completed using a simple data glove device. The data glove has 6 built-in inertial measurement units (In...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com