Online multi-target tracking method of unified target motion perception and re-identification network

A multi-target tracking and motion-aware technology, applied in the field of online multi-target tracking, can solve the problems of frequent conversion of identity recognition and inability to associate, and achieve the effect of adding re-identification branches, improving performance, and enhancing anti-occlusion capabilities.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0039] The following examples will further illustrate the present invention in conjunction with the accompanying drawings. The present embodiment is implemented on the premise of the technical solution of the present invention, and provides implementation and specific operation process, but the protection scope of the present invention is not limited to the following implementation example.

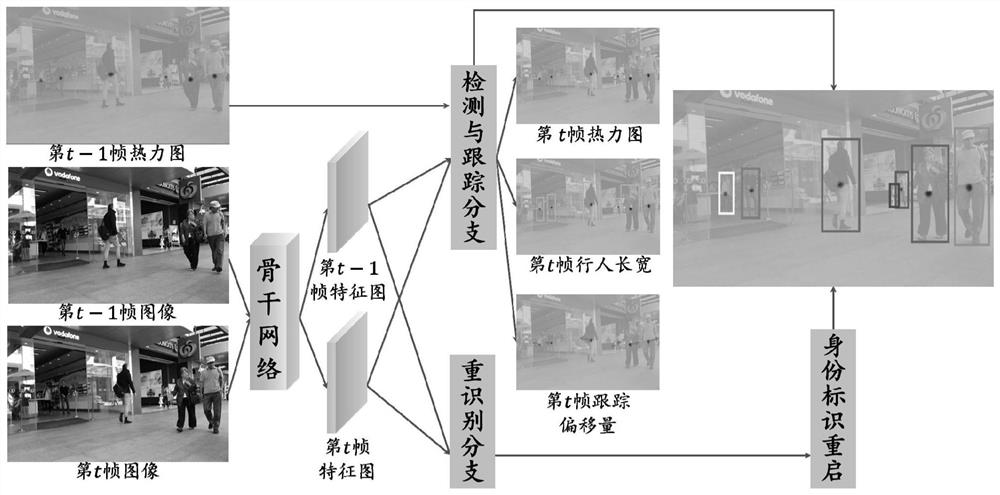

[0040] see figure 1 , the implementation of the embodiment of the present invention includes the following steps:

[0041] A. Input the current frame image and the previous frame image to the backbone network to obtain the feature maps of the two frame images.

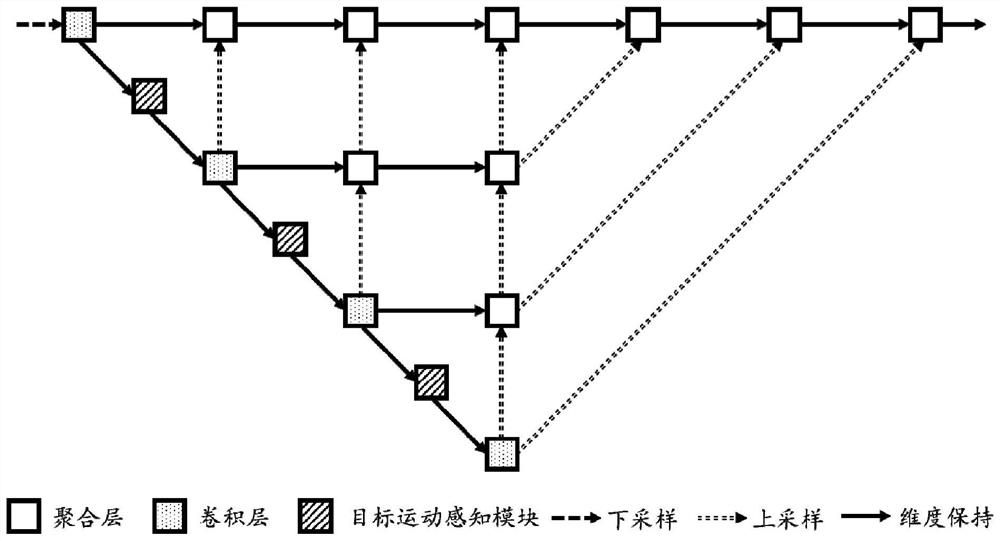

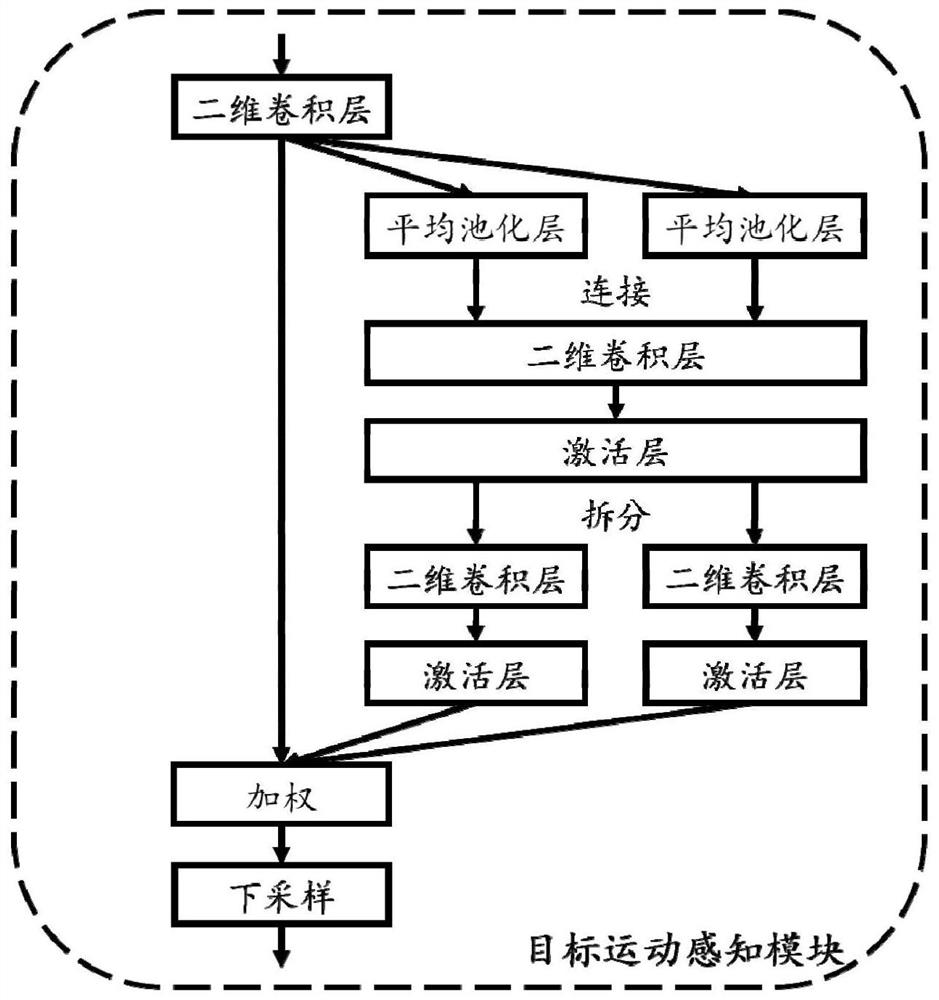

[0042] Such as figure 2 As shown, the backbone network is transformed with the DLA-34 network; the DLA-34 network is composed of an iterative depth aggregation module and a hierarchical depth aggregation module; all ordinary convolutional layers in the upsampling module of the DLA-34 network are replaced with deformable convolu...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com