Real-time self-generating pose calculation method based on variance component estimation theory

A technology of variance component estimation and calculation method, which is applied in calculation, navigation calculation tools, computer parts and other directions, and can solve problems such as difficulty in ensuring precise solution of pose information

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

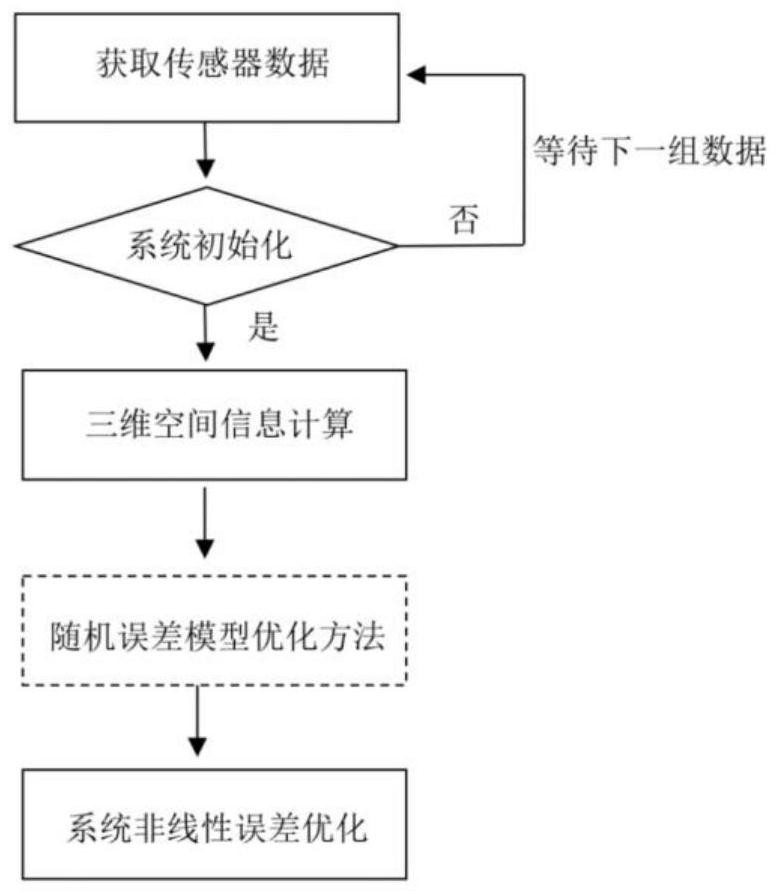

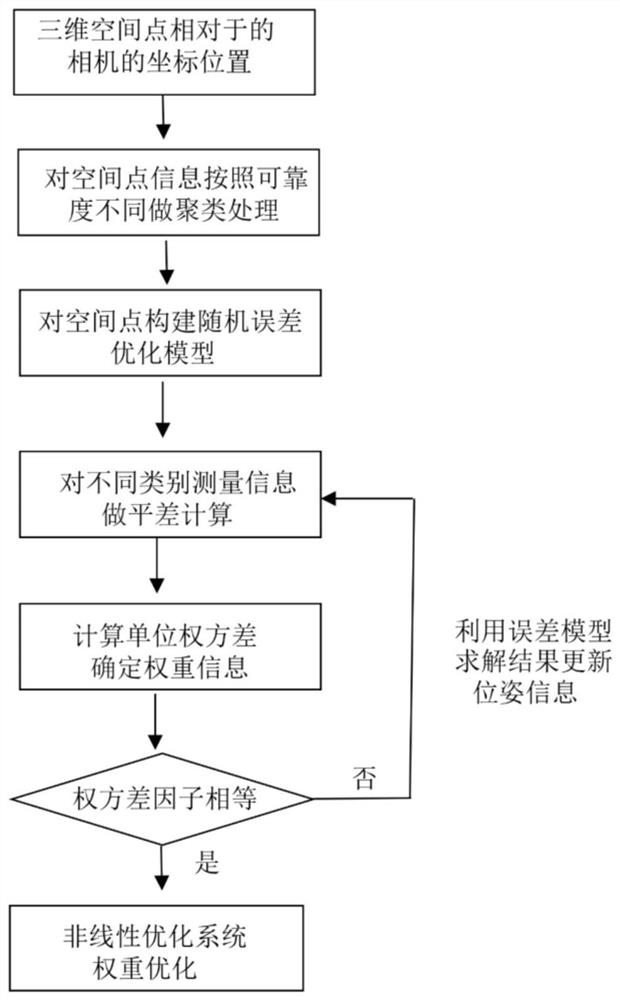

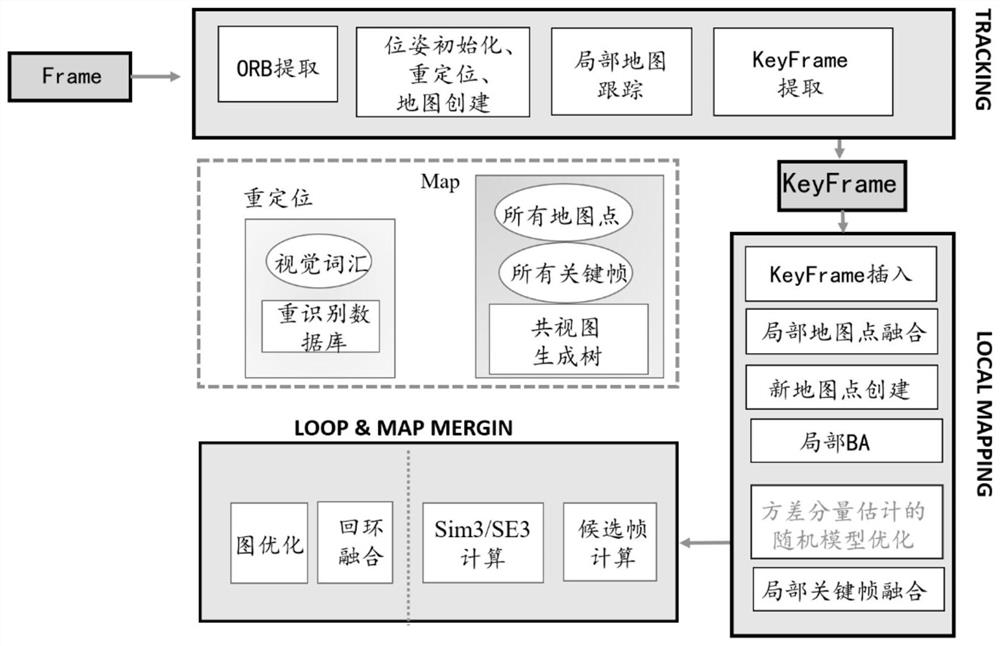

[0073] The following is a detailed description of the visual navigation algorithm based on variance component estimation in the present invention:

[0074] Step 1: Use the visual sensor to obtain image measurement information in real time and complete the system initialization; the binocular stereo camera is constructed according to feature point extraction, left and right camera image feature point matching, and unit origin position information to realize system initialization.

[0075] Step 2: After the initialization is completed, use the measurement information to calculate the coordinates of the three-dimensional space points.

[0076] Step 2.1: In the process of using the visual sensor to calculate the pose, directly use the baseline information of the camera to calculate the spatial coordinates of the binocular measurement information, and the calculation method is as follows:

[0077]

[0078] Among them, f is the focal length of the camera, b is the baseline size o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com