Target segmentation system and its training method, target segmentation method and equipment

A technology of target segmentation and training images, applied in the field of computer vision, can solve the problem of lack of perception ability of multi-size targets, and achieve the effect of increasing running time

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

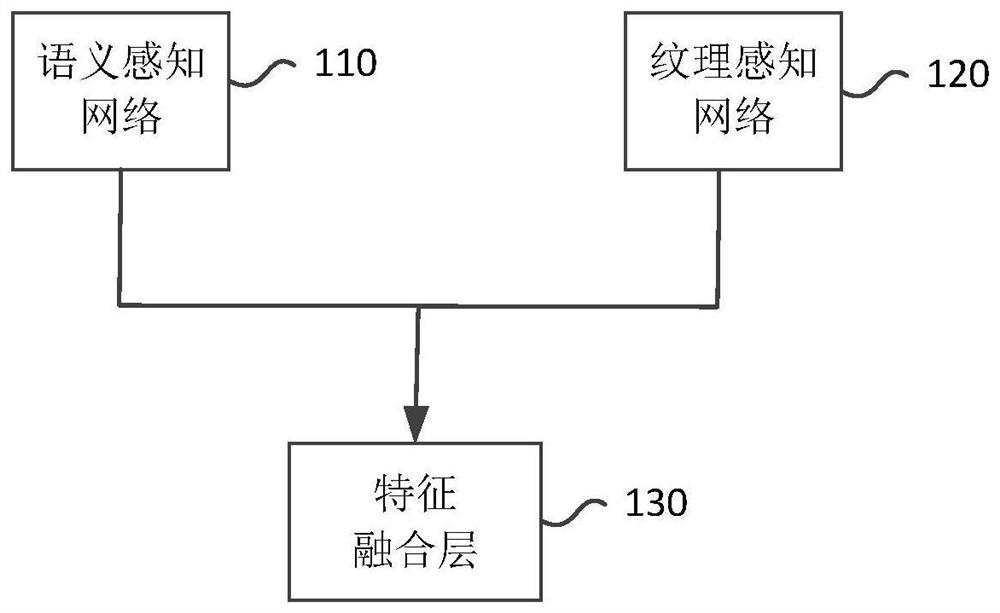

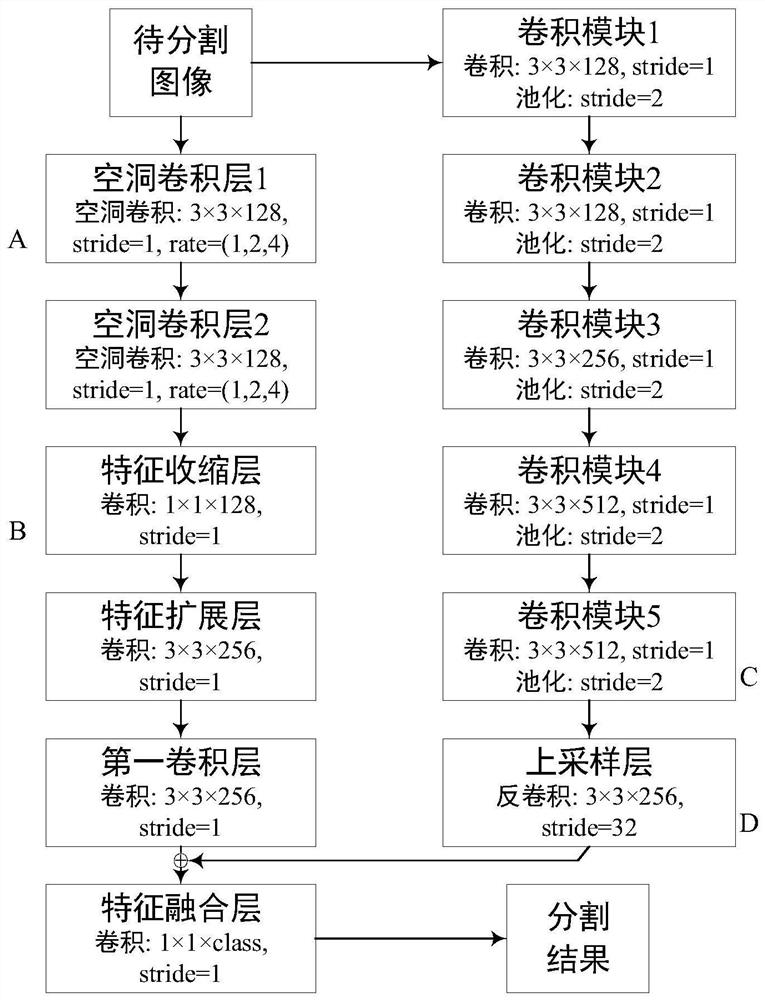

[0066] This embodiment proposes an object segmentation system. The system proposes a dual-branch multi-scale feature fusion model, which is suitable for the case of non-uniform object scales in images, and improves the accuracy and robustness of multi-scale object segmentation in natural images. figure 1 It is a schematic structural diagram of an object segmentation system provided by an embodiment of the present invention. Such as figure 1 As shown, the system includes: a semantic perception network 110 , a texture perception network 120 and a feature fusion layer 130 .

[0067] The semantic awareness network 110 adopts the form of a full convolution network, including a convolution module, a pooling module, and a regularization module. The semantic awareness network is set to obtain the first preprocessing data of the image, and extracts Semantic feature map of the described image.

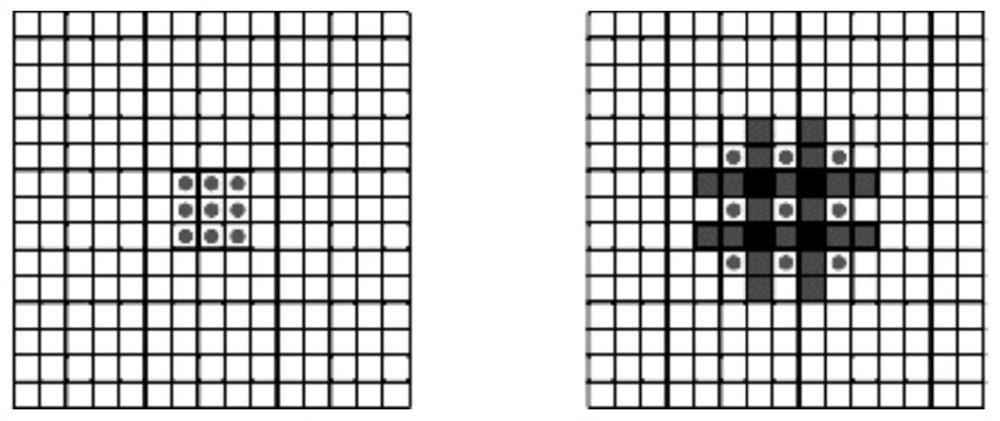

[0068] The texture-aware network 120 adopts a poolless network form, including a serially...

Embodiment 2

[0109] This embodiment provides a training method for an object segmentation system, which is used for training the object segmentation system described in Embodiment 1. Figure 5 It is a flowchart of a training method for an object segmentation system provided by an embodiment of the present invention. Such as Figure 5 As shown, the method includes steps S10-S40.

[0110] S10. Acquire a training image set, wherein the training image set includes a plurality of training images; perform pixel-level manual segmentation and labeling on each training image to obtain an annotation map of each training image.

[0111]S20. Perform original-scale data enhancement on each training image to obtain the first preprocessed data of each training image; perform multi-scale data enhancement on the first preprocessed data to obtain the first preprocessed data of each training image Two preprocessing data; wherein, the original scale data enhancement includes at least one of flipping, rotati...

Embodiment 3

[0150] This embodiment provides a method for object segmentation. Firstly, the target segmentation system is trained by using the training method of the second embodiment, and the method utilizes the trained target segmentation system to realize multi-scale target segmentation of images. Figure 7 It is a flowchart of an object segmentation method provided by an embodiment of the present invention. Such as Figure 7 As shown, the method includes steps S1-S4.

[0151] S1: Obtain an image to be segmented.

[0152]S2: Input the image to be segmented into the semantic perception network of the trained target segmentation system described in Embodiment 1 as the first preprocessed data.

[0153] S3: Input the image to be segmented into the texture perception network of the target segmentation system as second preprocessed data.

[0154] S4: Using the object segmentation system to perform object segmentation on the image to be segmented to obtain an object segmentation map of the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com