Diversified image description statement generation technology based on deep learning

A technology of image description and deep learning, applied in neural learning methods, still image data retrieval, still image data indexing, etc., can solve problems such as ignoring image details and generating single sentences, achieve good readability, and improve network parameter utilization rate effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

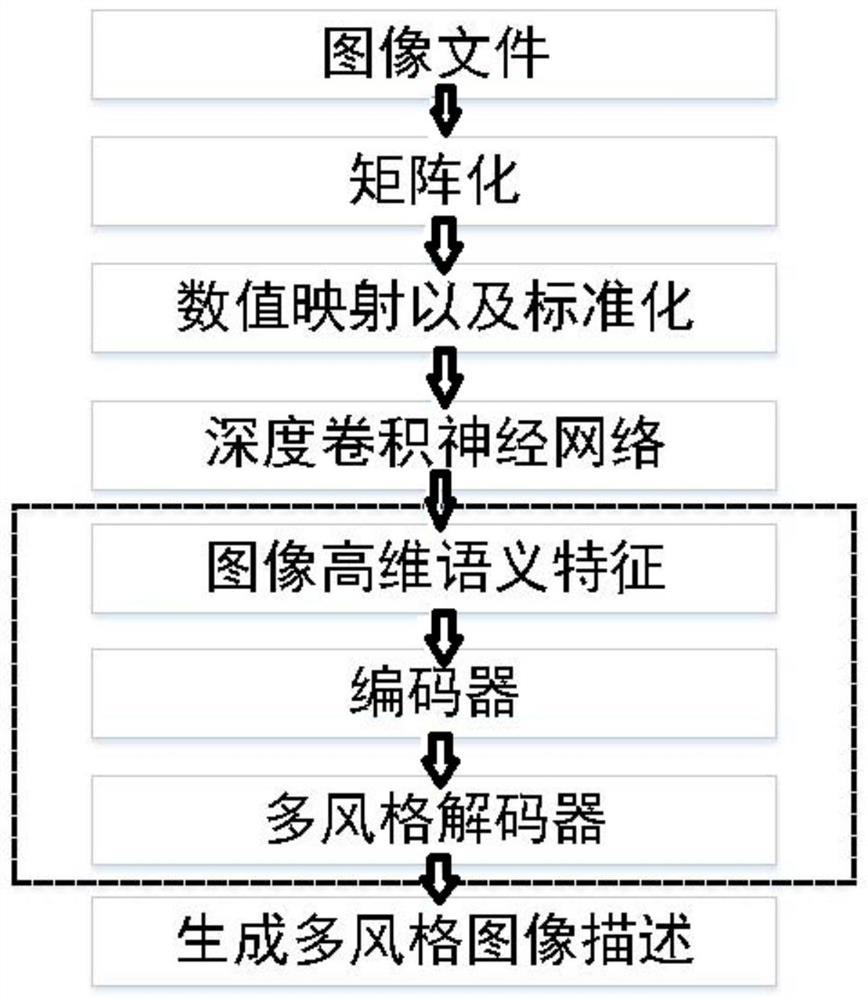

[0059] figure 1 It is the overall flowchart of the image description generation statement: the specific steps are as follows:

[0060] 1) Get a real world image file. 2) For an image file, it must first be matrixed. In the matrix, each element represents the content information of the corresponding position of the picture. The number of matrices and the relationship between values at the same position in different matrices depend on the color type of the picture. 3) In order to speed up the convergence speed of the image description generation model, it is necessary to perform data mapping between [0-1] and standardize the matrixed image description files. 4) The normalized image matrix is input to the deep convolutional neural network. 5) Through the multi-level feature extraction of the deep convolutional neural network, the high-dimensional semantic features of the image are obtained. 6) The high-dimensional semantic features of the image are input into the encoder...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com