A quality-of-service-aware cache scheduling method based on feedback and fair queues

A technology of quality of service and scheduling method, which is applied in the field of computer system structure cache scheduling, and can solve the problems of inability to provide service quality, weak data correlation, and overall local load reduction.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0047] In order to make the purpose, technical solution and technical effect of the present invention clearer, the present invention will be further described in detail below in conjunction with the accompanying drawings.

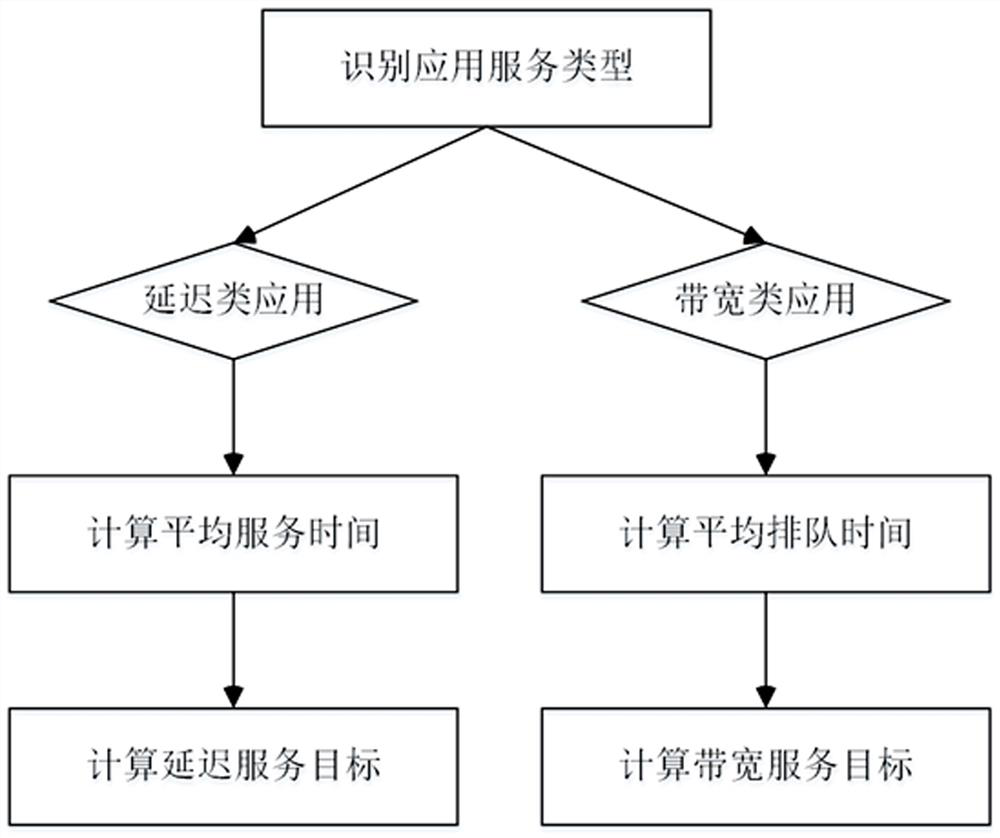

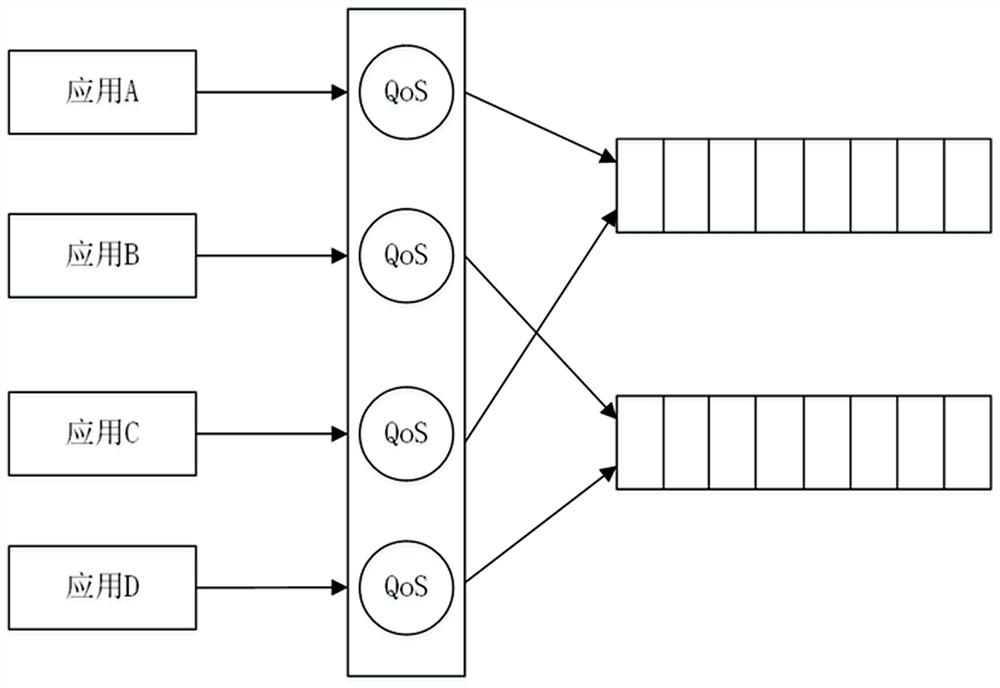

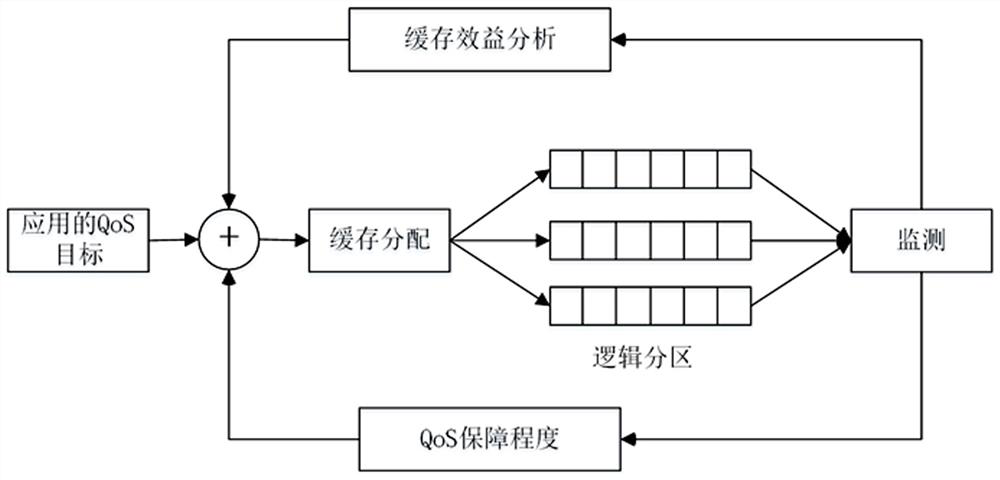

[0048] The present invention proposes a quality-of-service-aware cache scheduling method based on a feedback structure and a fair queue. Using a cache partition strategy, the cache is divided into multiple logical partitions, each application corresponds to a cache logical partition, and dynamically changes according to the load of the application. Adjust the size of its logical partition, and an application can only access the corresponding logical partition. The present invention mainly adopts six modules: service quality measurement strategy, starting time fair queue, feedback-based cache partition management module, cache block allocation management module, cache elimination strategy monitoring module, and cache compression monitoring module. The servic...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com