DeepFake defense method and system based on visual adversarial reconstruction

A visual and coding technology, applied in character and pattern recognition, fraud detection, computer components, etc., can solve the problems of destroying DeepFake and easily being destroyed by adversarial perturbation

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

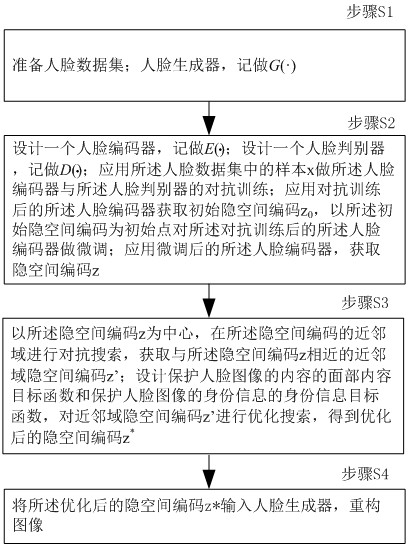

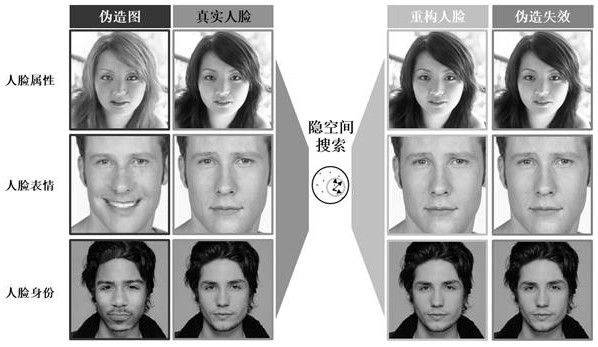

[0060] The invention discloses a DeepFake defense method based on visual confrontation reconstruction, wherein the DeepFake is a deep fake. figure 1 It is a flowchart of a DeepFake defense method based on visual confrontation reconstruction according to an embodiment of the present invention, such as figure 1 As shown, the method includes:

[0061] Step S1, prepare face data set; face generator, remember to do G (•); target DeepFake model, denote F (•); Described human face generator and target DeepFake model are the existing network with known structure;

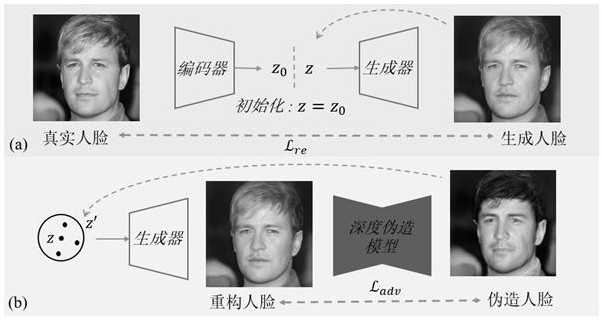

[0062] Step S2, design a face encoder, remember to do E (•); design a face discriminator, write D (•); Apply the sample x in the face data set to do the confrontation training of the face encoder and the face discriminator; apply the face encoder after the confrontation training to obtain the initial hidden space code z 0 , fine-tuning the face encoder after the adversarial training with the initial latent space code...

Embodiment 2

[0101] The invention discloses a DeepFake defense system based on visual confrontation reconstruction. Figure 7 It is a structural diagram of a DeepFake defense system based on visual confrontation reconstruction according to an embodiment of the present invention; as Figure 7 As shown, the system 100 includes:

[0102] The first processing module 101 is configured to prepare a face data set; the face generator is denoted as G (•); target DeepFake model, denote F (•); Described human face generator and target DeepFake model are the existing network with known structure;

[0103] The second processing module 102 is configured to design a face encoder, denoted as E (•); design a face discriminator, write D (•); Apply the sample x in the face data set to do the confrontation training of the face encoder and the face discriminator; apply the face encoder after the confrontation training to obtain the initial hidden space code z 0 , fine-tuning the face encoder after the adv...

Embodiment 3

[0129] The present invention: discloses an electronic device. The electronic device includes a memory and a processor. The memory stores a computer program. When the processor executes the computer program, the steps in any one of the DeepFake defense methods based on visual confrontation reconstruction in any one of the disclosed embodiments of the present invention are implemented.

[0130] Figure 8 It is a structural diagram of an electronic device according to an embodiment of the present invention, such as Figure 8 As shown, the electronic device includes a processor, a memory, a communication interface, a display screen and an input device connected through a system bus. Wherein, the processor of the electronic device is used to provide calculation and control capabilities. The memory of the electronic device includes a non-volatile storage medium and an internal memory. The non-volatile storage medium stores an operating system and computer programs. The internal ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com