Image text matching method based on multi-relation perceptual reasoning

A matching method and text technology, applied in reasoning methods, still image data retrieval, metadata still image retrieval, etc., can solve problems such as polysemous words cannot be solved

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0026] The present invention will be further described below in conjunction with accompanying drawing:

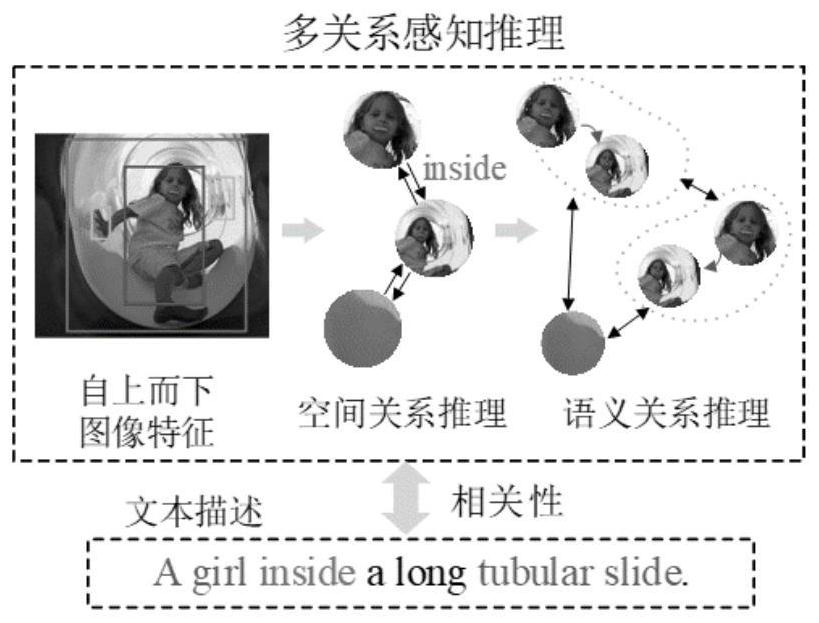

[0027] figure 1 It is a schematic diagram of the multi-relationship perception reasoning module proposed by the present invention. This module consists of spatial relational reasoning and semantic relational reasoning, and is used to capture the spatial positional relationship between image regions and the semantic relationship between objects. These visual relational features can characterize finer-grained content in images, which in turn provide a complete scene interpretation, thereby facilitating matching with complex textual semantic representations. In order to verify the rationality of the multi-relational perception reasoning module proposed by the present invention, the single-relational reasoning and multi-relational reasoning were tested and verified, and the results are shown in Table 1:

[0028] Table I

[0029]

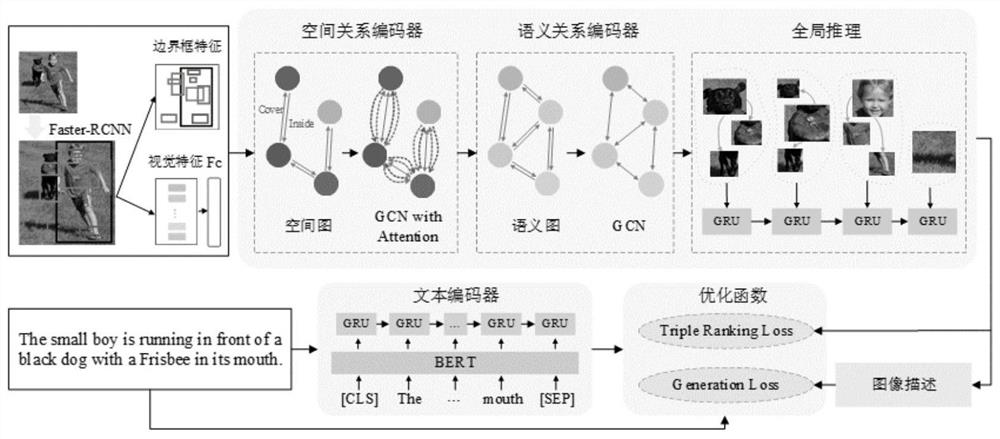

[0030] figure 2 It is a structural diag...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com