Method for generating image by sensing combined space attention text

A technology for image generation and attention, applied in image data processing, neural learning methods, 2D image generation, etc., can solve the problems of not being able to focus and refine all objects well, and the quality of generated results is inaccurate, and achieve Effects that improve perceived quality and layout, and reduce variance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

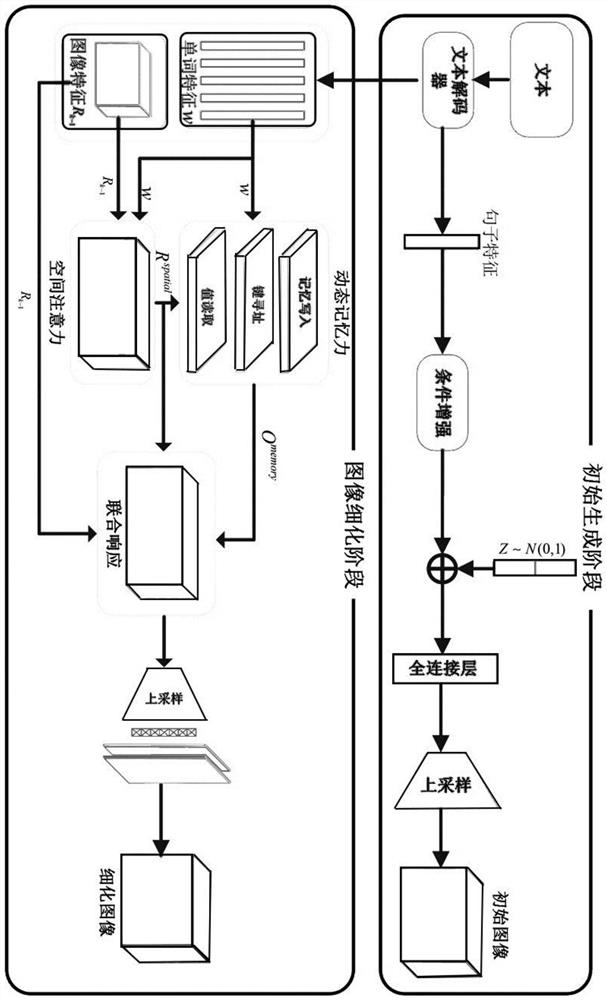

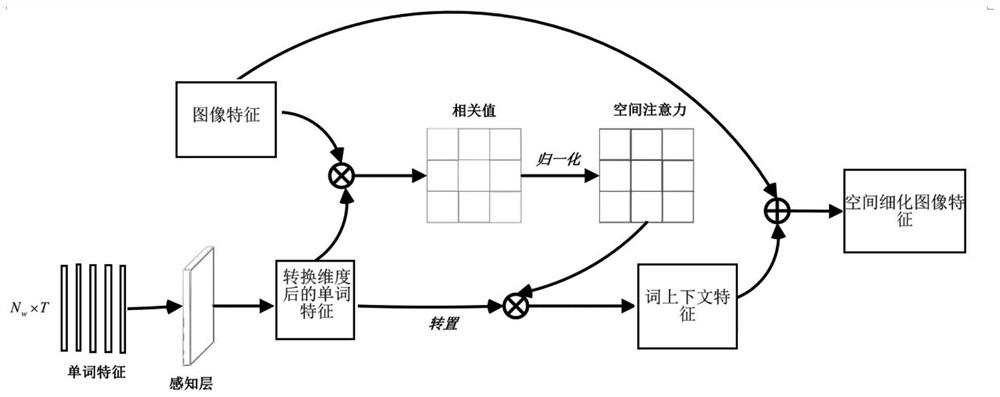

[0085] The present invention proposes a perceptual joint spatial attention text-generated image method, which is based on a multi-stage adversarial generative network, and aims to improve the perceptual quality and layout of text-generated images. The idea of this method is based on the dual-attention mechanism. Specifically, this method considers combining the word-level spatial attention method with the dynamic memory method and jointly responding to ensure that the generator focuses on the image sub-region corresponding to the most relevant word. content as well as location and shape. In addition, this method introduces a perceptual loss function for the last generator of the multi-stage text generation image model, with the purpose of reducing the difference between the final generated image and the target image, so that the image to be generated is more semantically similar to the target image.

[0086] In order to achieve the above goals, the following solutions are ad...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com