Scene detection reference model training method and system and application method

A benchmark model and scene detection technology, applied in the field of remote sensing image processing, can solve the problems of lack of semantic concept definition, small number of training samples, significant differences, etc., and achieve the effect of full coverage supervision

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

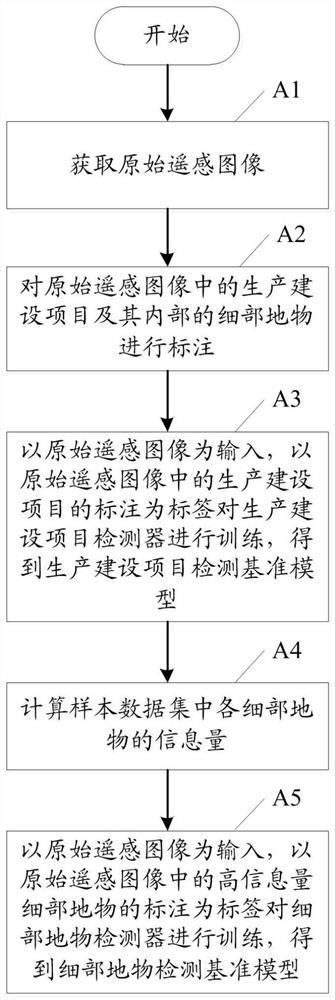

[0044] see figure 1 , the invention provides a kind of scene detection reference model training method, described training method comprises the following steps:

[0045] A1: Obtain the original remote sensing image;

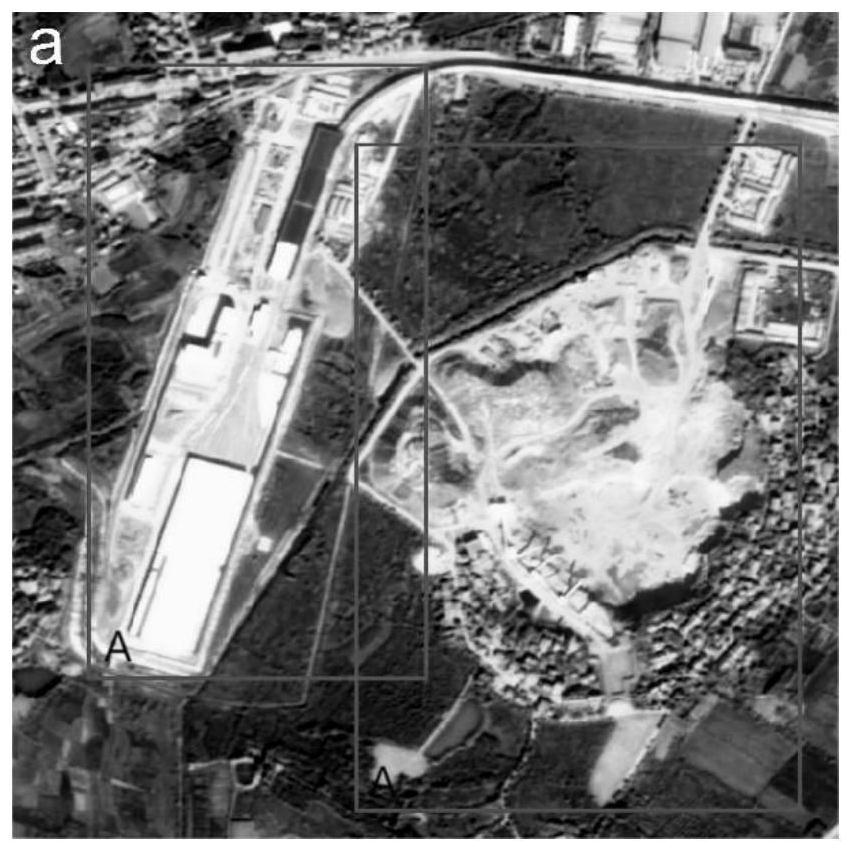

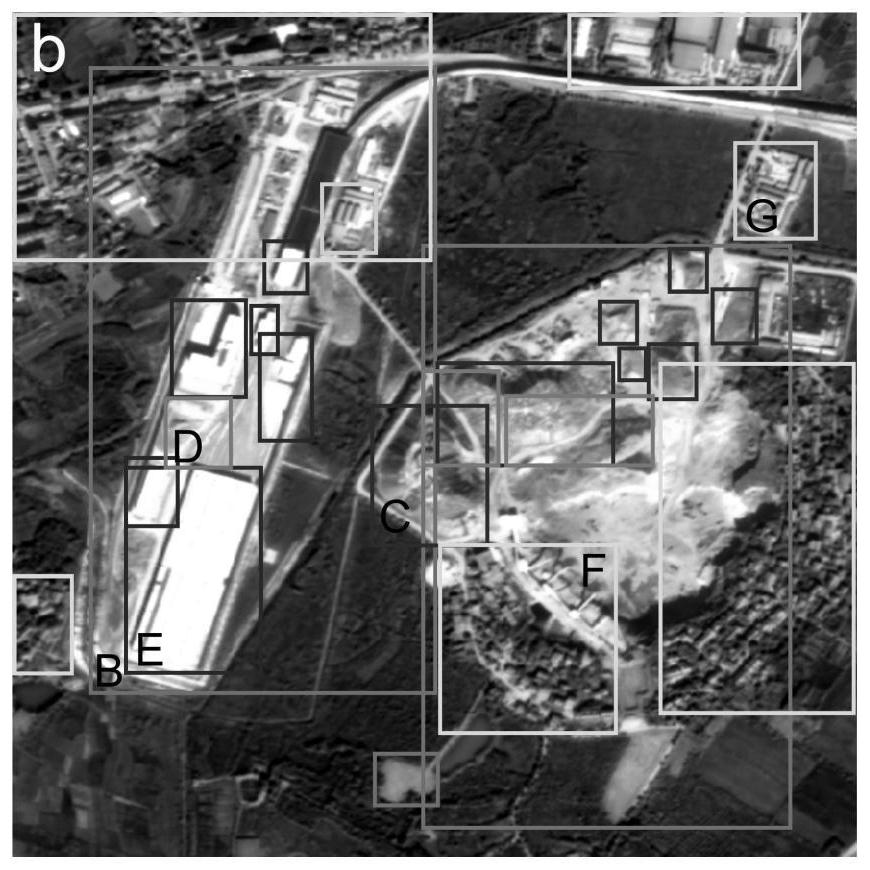

[0046] A2: Mark the production and construction projects in the original remote sensing image and the detailed features inside them, and the mark is a candidate frame;

[0047] A3: Take the original remote sensing image as input, and use the label of the production and construction project in the original remote sensing image as a label to train the production and construction project detector to obtain the production and construction project detection benchmark model, and the production and construction project detector Detection network for Faster RCNN;

[0048] A4: Calculate the amount of information of each detailed feature in the sample data set;

[0049] A5: Take the original remote sensing image as input, and use the labeling of the high-information det...

Embodiment 2

[0070] see Figure 4 , the present invention provides a kind of application method of scene detection reference model, described application method comprises the following steps:

[0071] B1: Acquire remote sensing images;

[0072] B2: Input the remote sensing images into the production and construction project detection reference model and the detailed ground object detection reference model respectively, and obtain the preliminary detection results of the actual production and construction projects and the preliminary detection results of the actual detailed ground objects, wherein the production and construction project detection The benchmark model and the detailed ground object detection benchmark model are the scene detection benchmark model obtained through the training in Example 1; the preliminary detection results of the actual production and construction projects are several actual production and construction project candidate frames and their corresponding confiden...

Embodiment 3

[0125] see Figure 8 , the present invention provides a scene detection benchmark model training system, the training system comprising:

[0126] The original remote sensing image acquisition module 1 is used to acquire the original remote sensing image;

[0127] Annotation module 2, configured to annotate the production and construction projects in the original remote sensing images and the detailed features inside them, the annotations being candidate frames;

[0128] The production and construction project detection reference model training module 3 is used to use the original remote sensing image as input and use the label of the production and construction project in the original remote sensing image as a label to train the production and construction project detector to obtain the production and construction project detection Benchmark model, the production and construction project detector is a Faster RCNN detection network;

[0129] The amount of information calculat...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com