GPU data processing system based on multi-input single-output FIFO structure

A data processing system and a single-output technology, which is applied in the field of GPU data processing, can solve problems such as parallel output information channel blockage, inability to input multiple information in parallel, and reduce GPU data processing efficiency, so as to avoid blockage, widely use value, and improve The effect of data acquisition efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

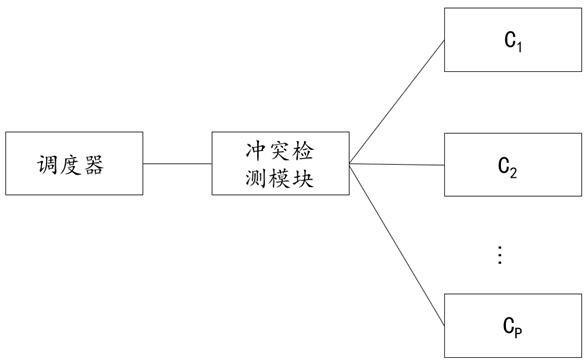

[0015] The allocation unit is used to transmit the second data acquisition request to the corresponding cache memory based on the cache memory identification information in each second data acquisition request, and the N second data acquisition requests can be allocated after passing through the allocation unit Divided into P road.

[0016] As an embodiment, among the P cache memories, each cache memory corresponds to a physical address storage interval, and is used to obtain from the memory the data corresponding to the physical address in the corresponding physical address storage interval, and the P physical addresses Storage intervals do not overlap. It can be understood that, based on the corresponding relationship between each cache memory and the physical address storage interval, the upstream device can directly specify the corresponding cache memory when sending the first data acquisition request. Each of the physical address storage intervals includes a plurality of...

Embodiment 2

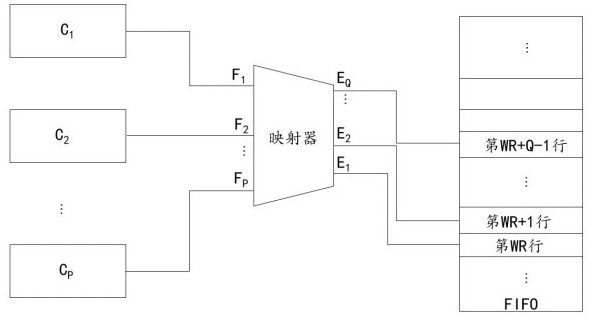

[0029] To illustrate with a specific example, assuming P=4, F 2 and F 4 The input port inputs the corresponding third data acquisition request, then F 2 The third get data request is mapped to the output port E 1 , the F 4 The third get data request on the input port is mapped to the output port E 2 , the output port E 1 and E 2 , will input port F 2 and F 4 The third fetch data request is stored in FIFO in parallel, where E 1 Will F 2 The third data acquisition request is stored in the WR line of the FIFO, E 2 Will F 2 The third data acquisition request is stored in line WR+1 of FIFO.

[0030] In Embodiment 2 of the present invention, by setting the mapper, the FIFO of the multi-input and single-output port, and the write pointer, Q third acquisition data request information processed in parallel can be input into the FIFO in parallel, avoiding any third acquisition data request information acquisition The blockage of the channel improves the data acquisition effi...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com