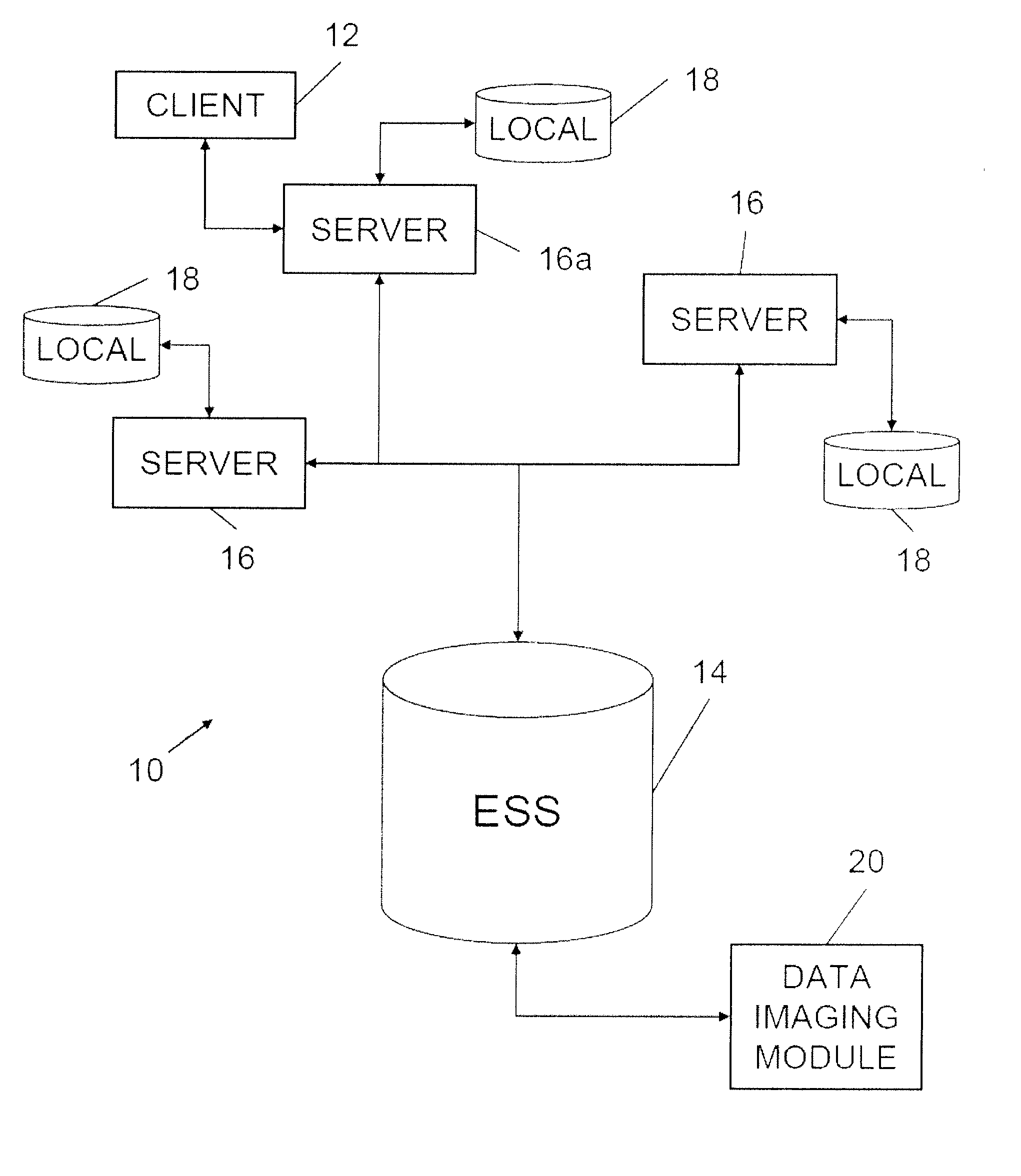

[0005]One aspect of the invention relates to systems and methods that seek to optimize (or at least enhance) data throughput in data warehousing environments by connecting multiple servers having local storages with a designated ESS, such as, for example, a SAN. According to another aspect of the invention, the systems and methods preserve a full reference copy of the data in a protected environment (e.g., on the ESS) that is fully available. According to another aspect of the invention, the systems and methods maximize (or at least significantly enhance) overall IO potential performance and reliability for efficient and reliable system resource utilization.

[0006]Other aspects and advantages of the invention include providing a reliable data environment in a mixed storage configuration, compensating and adjusting for differences in disk (transfer) speed between mixed storage components to sustain high throughput, supporting different disk sizes on server configurations, supporting high performance FR and DR in a mixed storage configuration, supporting dynamic reprovisioning as servers are added to and removed from the system configuration and supporting database clustering in which multiple servers are partitioned within the system to support separate databases, applications or user groups, and / or other enhancements. Servers within the data warehousing environment may be managed in an autonomous, or semi-autonomous, manner, thereby alleviating the need for a sophisticated central management system.

[0008]The ESS may hold a copy of the entire database. This copy may be kept current in real-time, or near real-time. As such, the copy of the database held by the ESS may be used as a full reference copy for FR or DR on portions of the database stored within the local storage of individual servers. Since the copy of the ESS is kept continuously (or substantially so) current, “snapshots” of the database may be captured without temporarily isolating the ESS artificially from the servers to provide a quiescent copy of the database. By virtue of the centralized nature of the ESS, the database copy may be maintained with relatively high security and / or high availability (e.g., due to standard replication and striping policies). In some implementations, the ESS may organize the data stored therein such that data that is accessed more frequently by the servers (e.g., data blocks not stored within the local storages) is stored in such a manner that it can be accessed efficiently (e.g., for sequential read access). In some instances, the ESS may provide a backup copy of portions of the database that are stored locally at the servers.

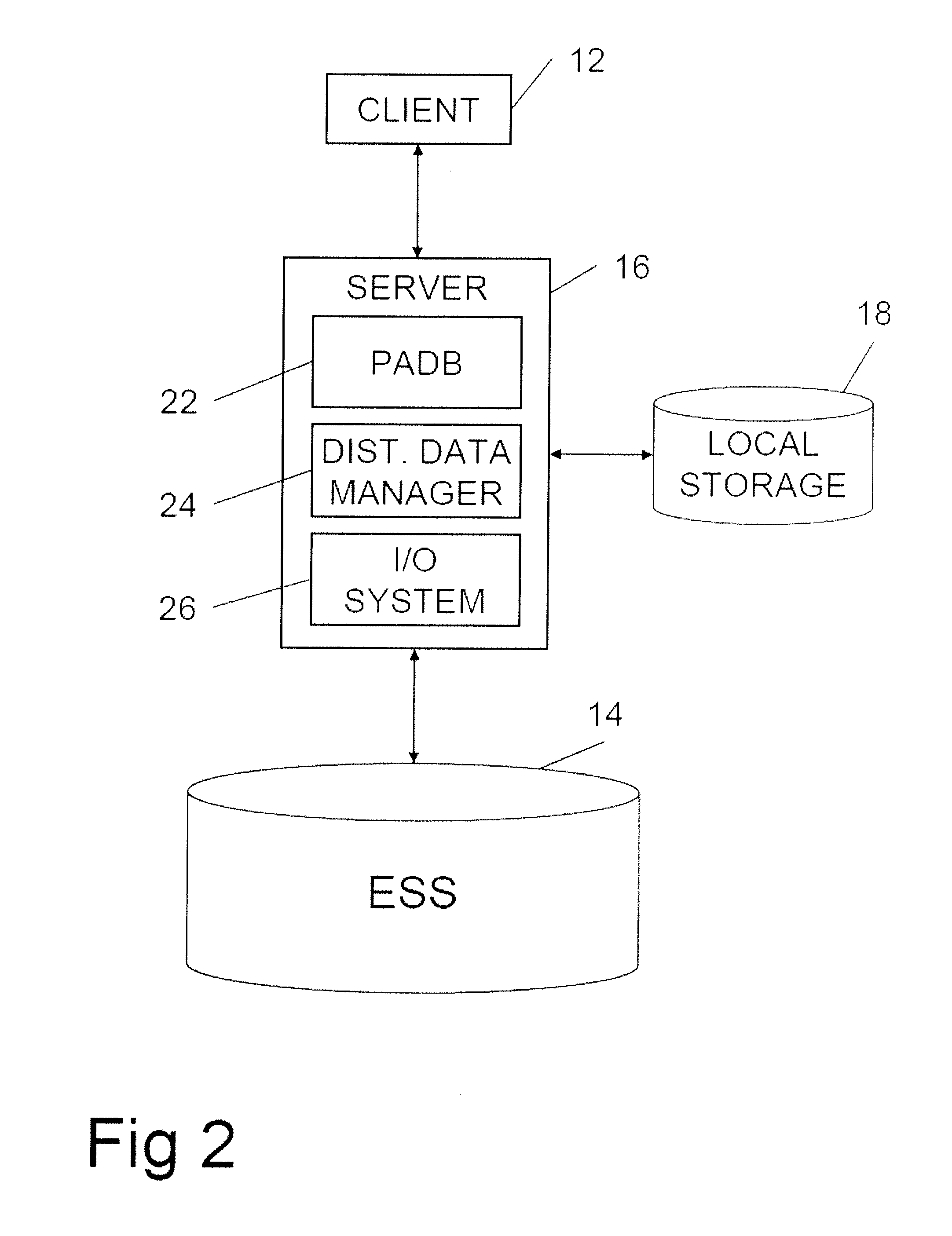

[0010]The servers may form a network of server computer nodes, where one or more leader nodes communicate with the client to acquire queries and deliver data for further processing, such as display, and manages the processing of queries by a plurality of compute node servers. Individual servers process queries in parallel fashion by reading data simultaneously from local storage and the ESS to enhance I / O performance and throughput. The proportions of the data read from local storage and the ESS, respectively, may be a function of (i) data throughput between a given server and the corresponding local storage, and (ii) data throughput between the ESS and the given server. In some implementations, the proportions of the data read out from the separate sources may be determined according to a goal of completing the data read out from the local storage and the data read out from the ESS at approximately the same time. Similarly, the given server may adjust, in an ongoing manner, the portion of the database that is stored in the corresponding local storage in accordance with the relative data throughputs between the server and the local storage and between the server and the ESS (e.g., where the throughput between the server and the local storage is relatively high compared to the throughput between the server and the ESS, the portion of the database stored on the local storage may be adjusted to be relatively large). In some implementations, the individual servers may include one or more of a database engine, a distributed data manager, a I / O system, and / or other components.

[0015]In some embodiments, snapshots of the database may be captured from the ESS. A snapshot may include an image of the database that can be used to restore the database to its current state at a future time. A method of capturing a snapshot of the database may include, monitoring a passage of time since the previous snapshot, if the amount of time since the previous snapshot has breached a predetermined threshold, monitoring the database to determine whether a snapshot can be performed, and performing the snapshot. Determining whether a snapshot can be performed may include determining whether any queries are currently being executed on the database and / or determining whether any queries being executed update the persistent data within the database. This may enhance the capture of snapshots with respect to system in which the database must isolated from queries, updated from temporary data storage, and then imaged to capture a snapshot because snapshots can be captured during ongoing operations at convenient intervals (e.g., when no queries that update the data are be performed).

Login to View More

Login to View More  Login to View More

Login to View More