Monosyllabic language lip-reading recognition system based on vision character

A visual feature and recognition system technology, applied in the field of lip reading recognition system, to achieve the effect of sample diversification, rich content and strong practicability

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

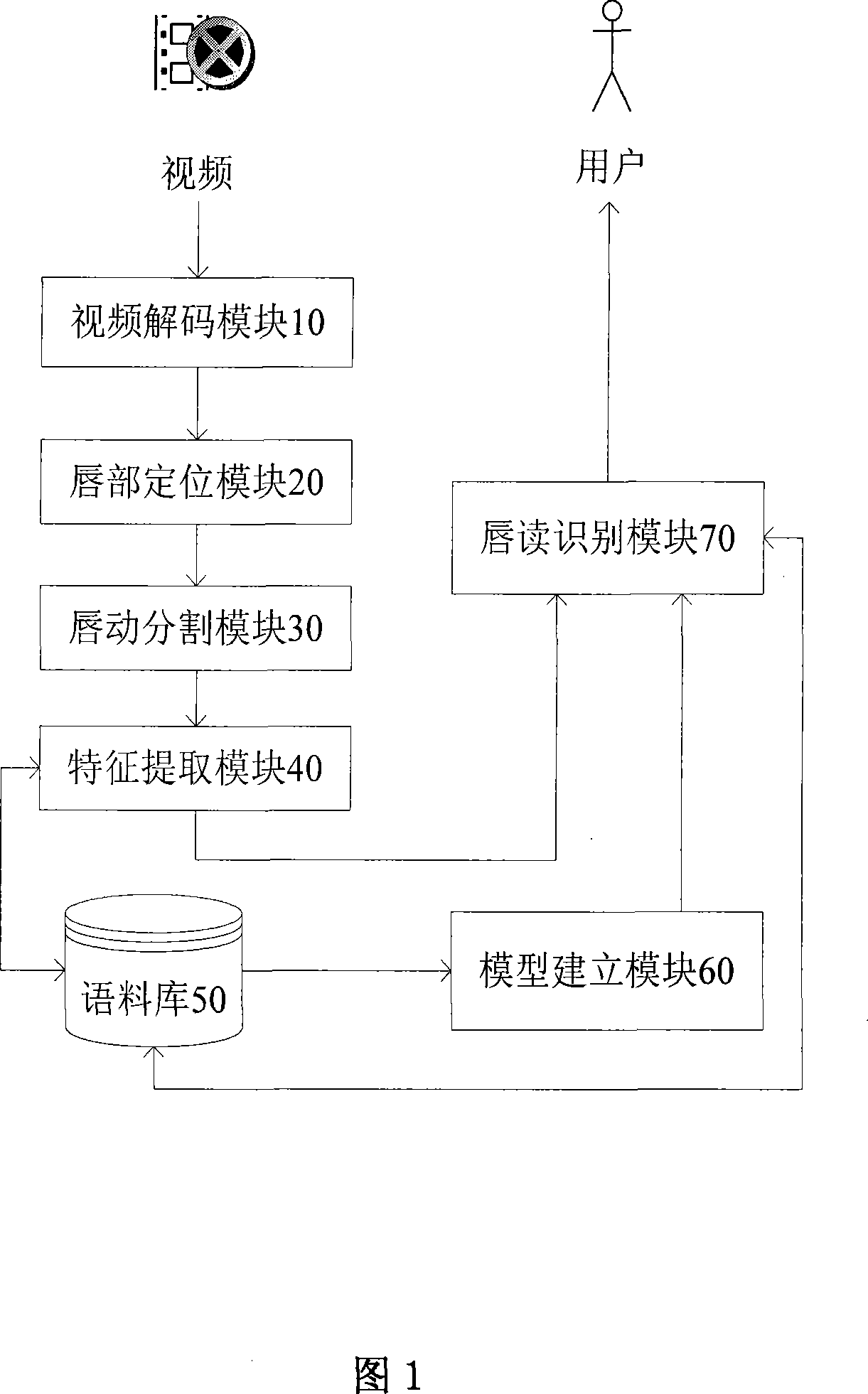

[0050] As shown in FIG. 1 , the present invention includes a video decoding module 10 , a lip positioning module 20 , a lip movement segmentation module 30 , a feature extraction module 40 , a corpus 50 , a model building module 60 and a lip reading recognition module 70 .

[0051] The video decoding module 10 accepts a video file or device given by the user, decodes it, and obtains a sequence of image frames that can be used for processing in the present invention.

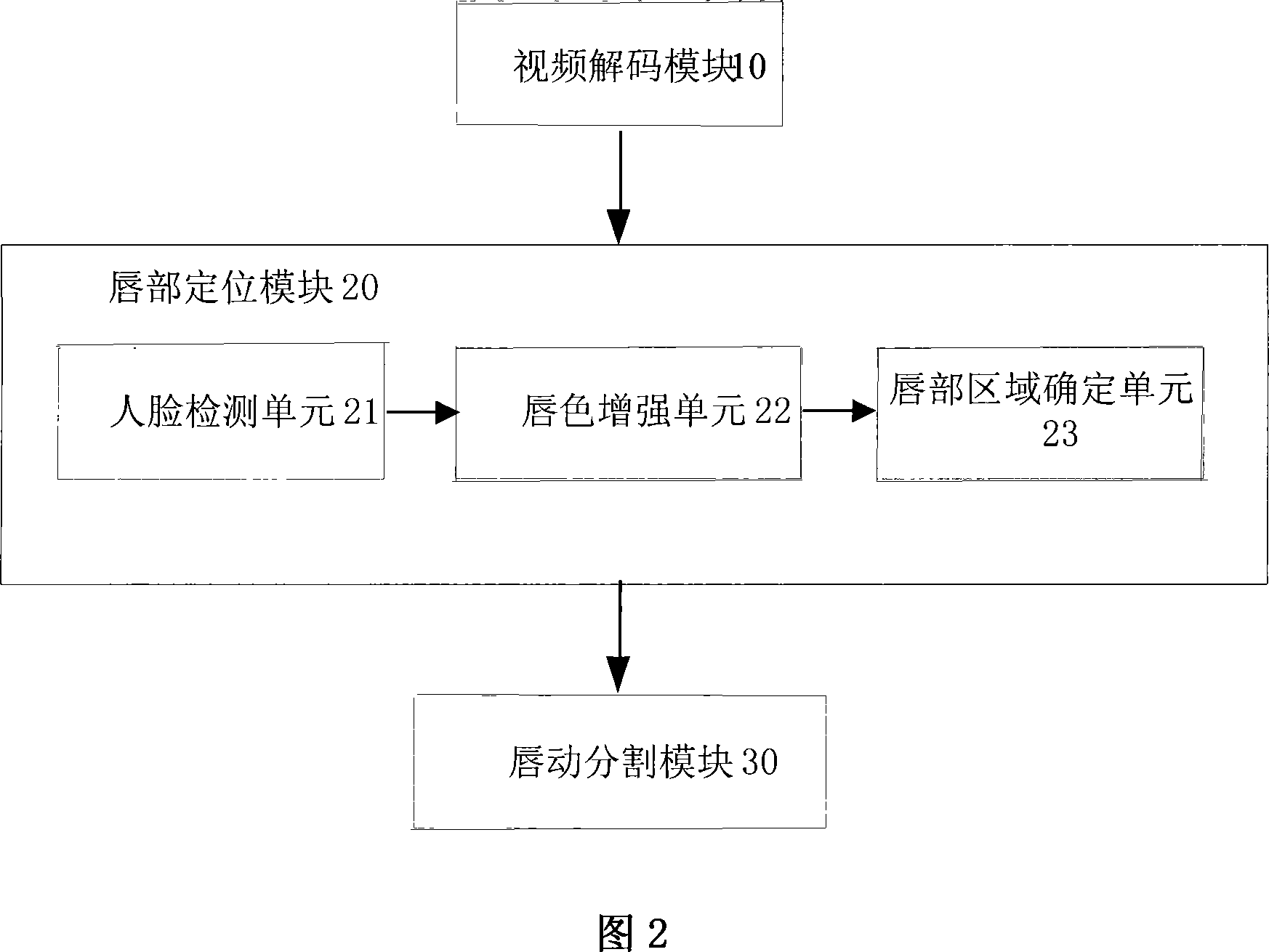

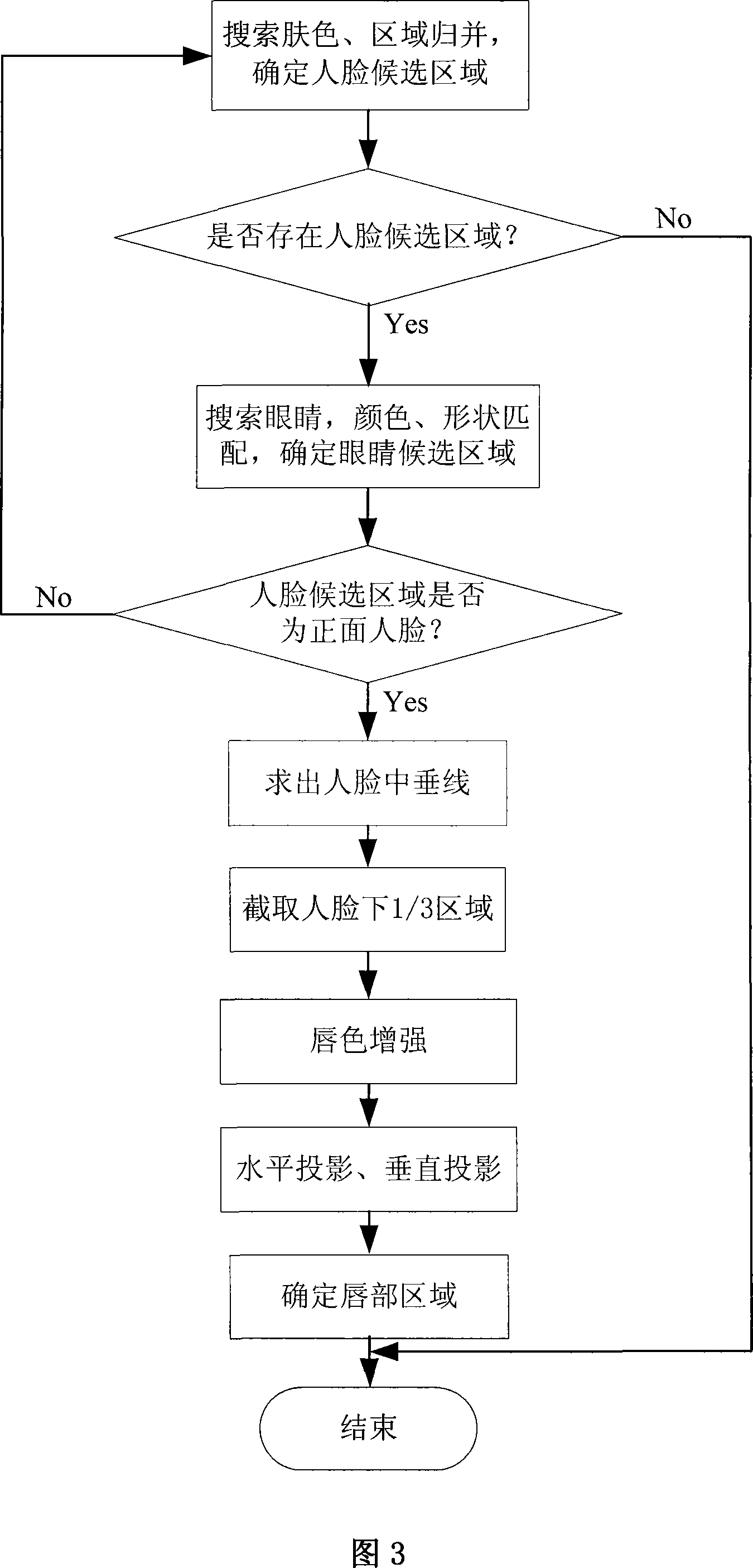

[0052] The lip location module 20 is used to analyze image frames in the video. It finds and locates the speaker's lip position from the video decoding module 10 . These position information need to be provided to the lip movement segmentation module 30 and feature extraction module 40 . The lip positioning module 20 first obtains a lip position vector, which contains 4 components, and each component is a coordinate value in a two-dimensional space, representing the left lip angle, right lip angle, upper lip verte...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com