Handling cache miss in an instruction crossing a cache line boundary

A high-speed cache and memory line technology, applied in memory systems, instruction analysis, concurrent instruction execution, etc., can solve problems such as increasing complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] In the following detailed description, numerous specific details are set forth by way of example in order to provide a thorough understanding of the related teachings. However, it will be apparent to one skilled in the art that the present teachings may be practiced without such details. In other instances, well-known methods, procedures, components, and circuits have been described at a relatively high level and without detail in order to avoid unnecessarily obscuring aspects of the present teachings.

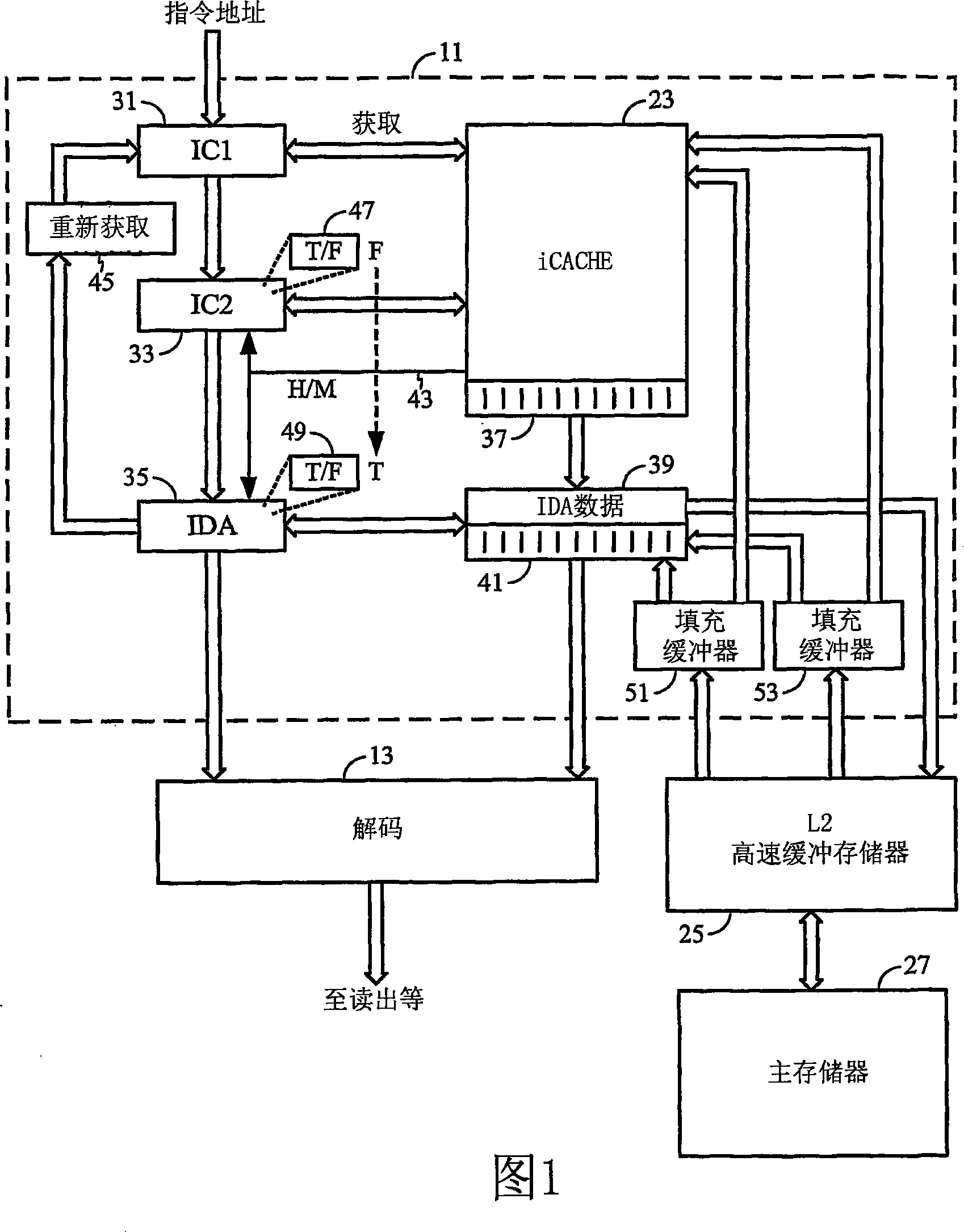

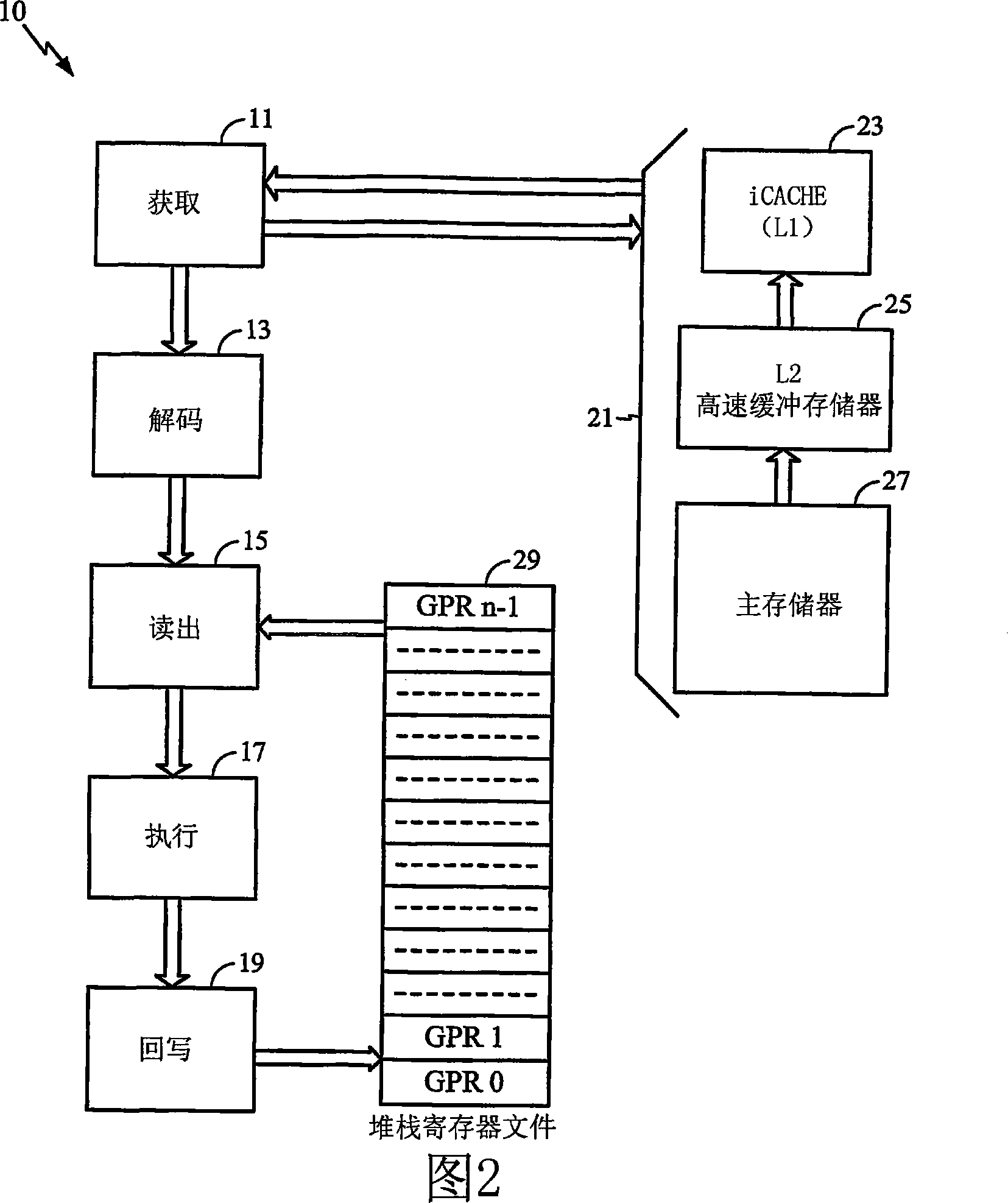

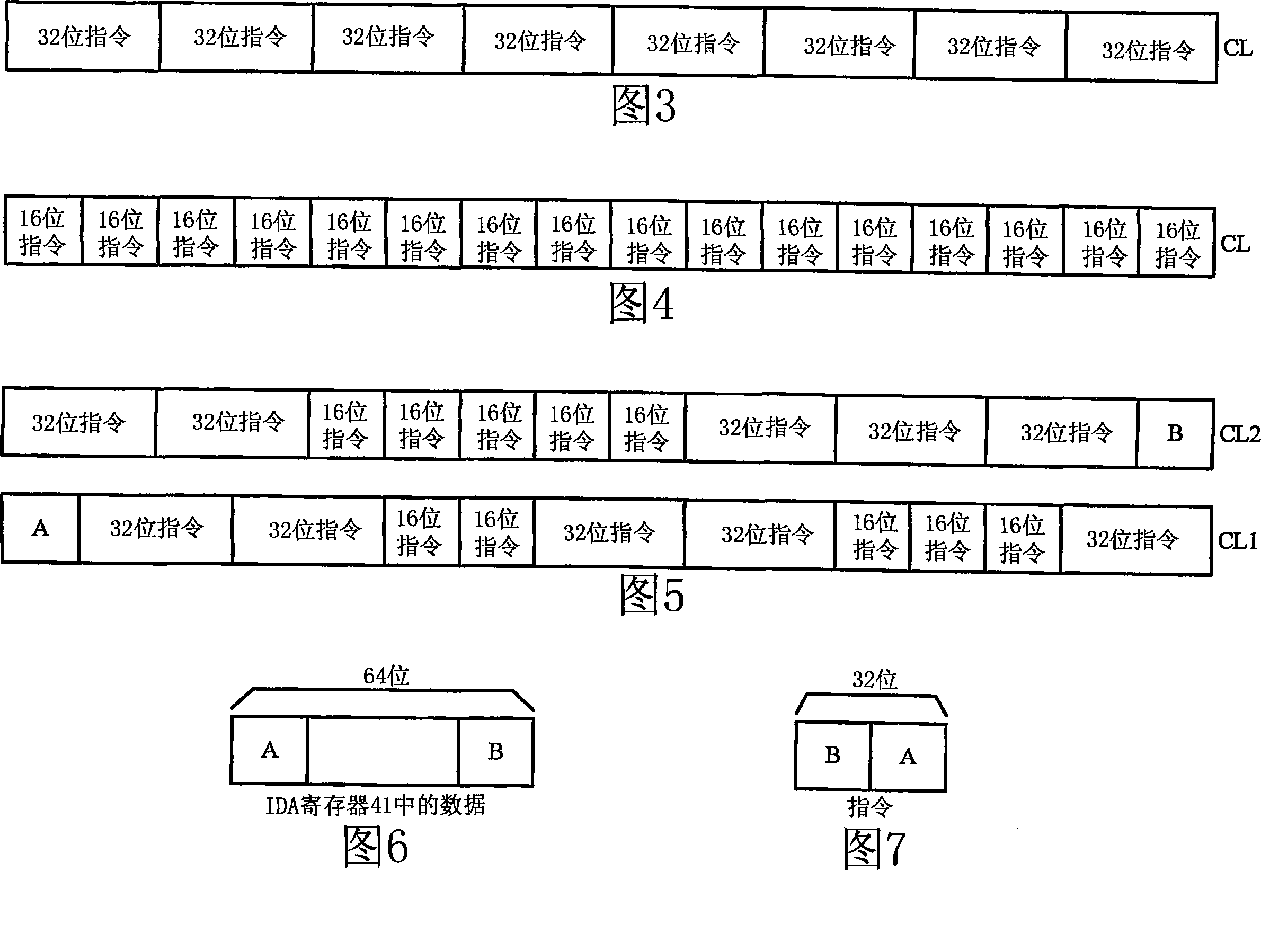

[0023]As discussed herein, examples of systems or portions of a processor intended to fetch instructions for the processor include an instruction cache and a plurality of processing stages. Thus, the fetch part itself is usually formed by a processing stage pipeline. Instructions are allowed to cross cache line boundaries. When the stage from which a request for higher level memory is issued has a first portion of an instruction that crosses a cache line boundary, the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com