Motion estimation method and multi-video coding and decoding method and device based on motion estimation

A technology based on visual motion and motion vector, applied in the field of multi-view encoding and decoding methods and devices, can solve the problems of low encoding efficiency, small motion vector code stream transmission, low encoding and decoding efficiency, etc., to ensure accuracy and reduce code stream The effect of improving the transmission volume and encoding efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

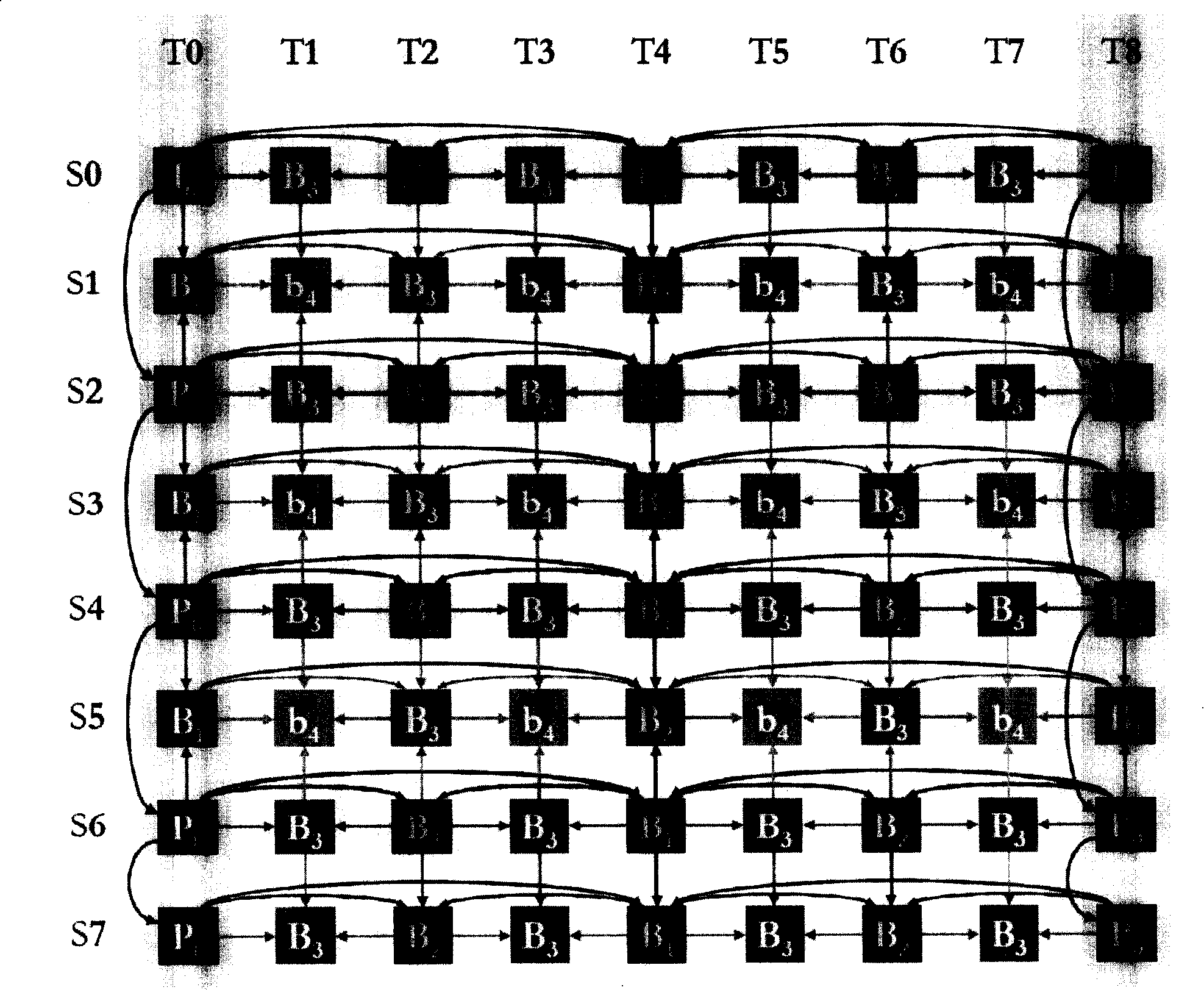

[0067] This embodiment describes the specific implementation of the motion estimation method of the present invention with reference to the accompanying drawings.

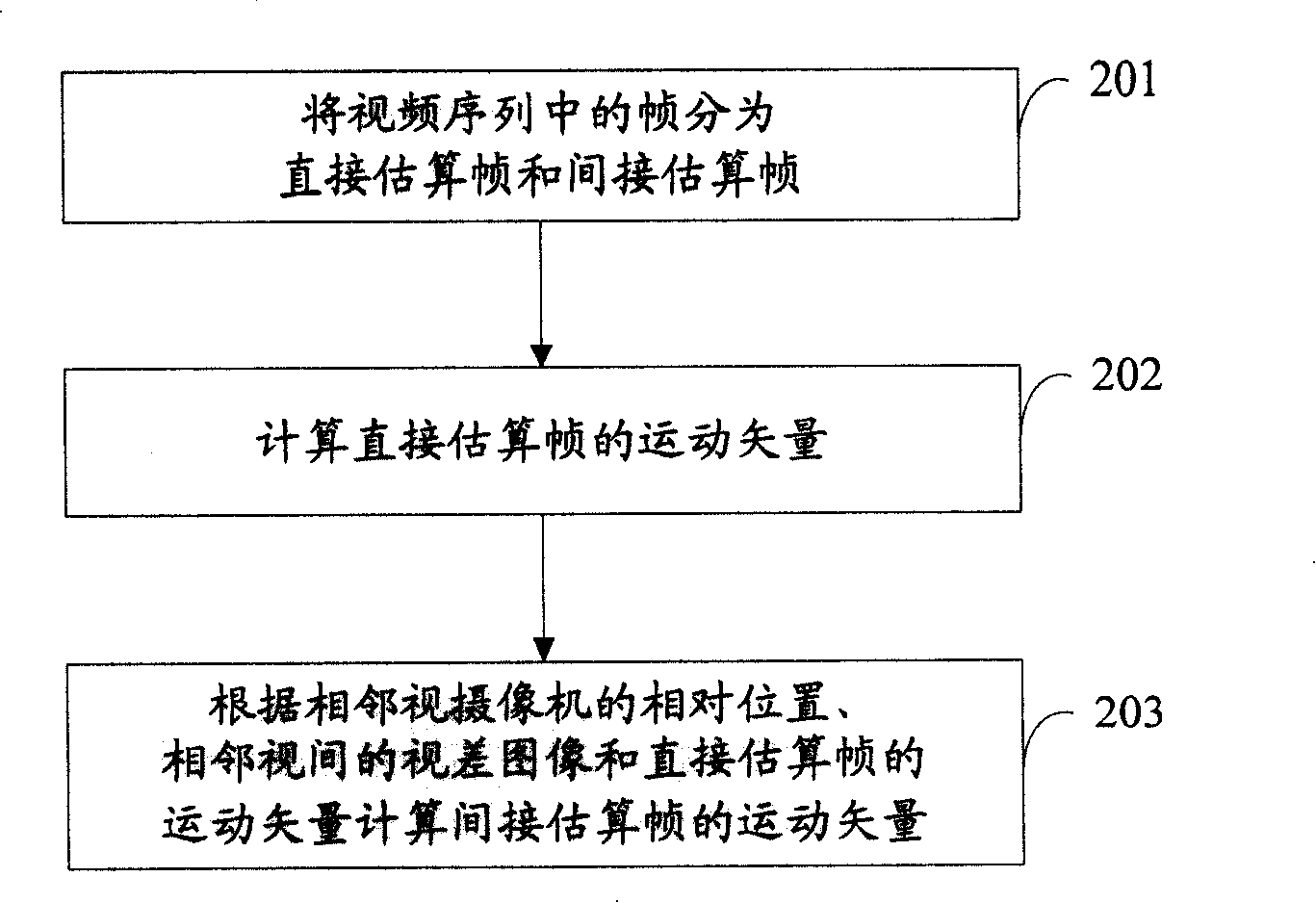

[0068] figure 2 It is a schematic flowchart of a multi-view motion estimation method according to an embodiment of the present invention. see figure 2 , the method includes the following steps:

[0069] Step 201: Divide frames in a video sequence into direct estimation frames and indirect estimation frames.

[0070] In this step, the frames in the video sequence can be divided into direct estimation frames and indirect estimation frames according to the above definitions about direct estimation frames and indirect estimation frames.

[0071] Step 202: Calculate the motion vector of the directly estimated frame.

[0072] In this step, motion estimation can be performed on the directly estimated frame according to the traditional multi-view coding motion estimation algorithm introduced in the background art or ...

Embodiment 2

[0102] This embodiment describes the specific implementation manner of the motion estimation-based multi-view coding method of the present invention with reference to the accompanying drawings.

[0103] Figure 4 It is a schematic flowchart of the multi-view coding method based on motion estimation in Embodiment 2 of the present invention. see Figure 4 , the method includes the following steps:

[0104] Step 401: Divide frames in a video sequence into direct estimation frames and indirect estimation frames.

[0105] Step 402: Calculate the motion vector of the directly estimated frame.

[0106] In this step, motion estimation can be performed on the directly estimated frame according to the traditional multi-view coding motion estimation algorithm introduced in the background art or other motion estimation algorithms in the prior art to obtain its corresponding motion vector.

[0107] Step 403: Calculate the motion vector of the indirectly estimated frame according to the...

Embodiment 3

[0122] This embodiment describes the specific implementation manners of the motion estimation-based multi-view decoding method and device of the present invention with reference to the accompanying drawings.

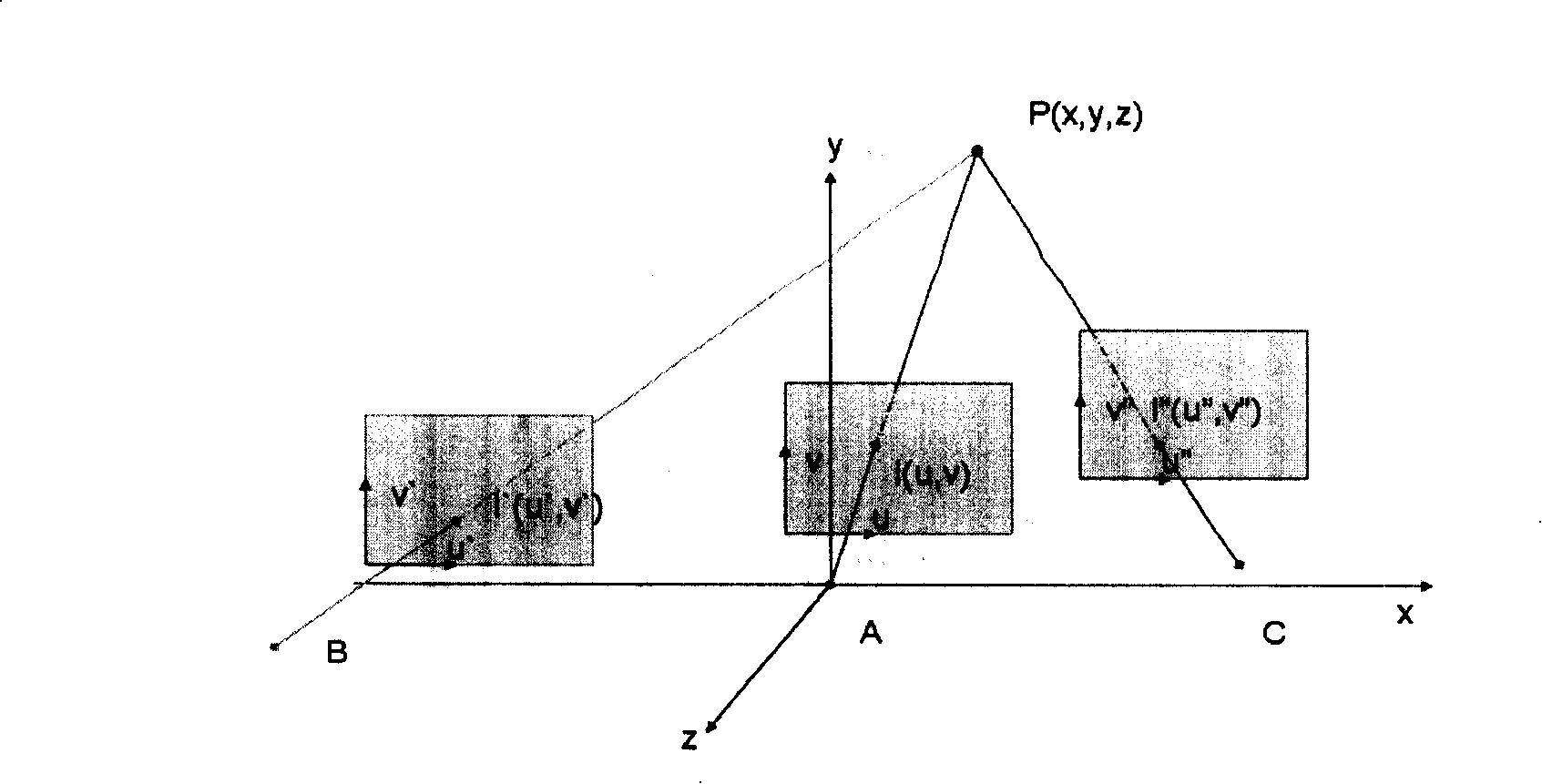

[0123] In this embodiment, same as the first embodiment, S1 is regarded as corresponding to image 3 In the video sequence captured by camera A shown, S0 corresponds to image 3 The video sequence captured by camera B shown in S2 corresponds to image 3 The video sequence captured by camera C is shown, therefore, image 3 The relative positional relationship between the cameras and the coordinates of the cameras are also applicable to this embodiment.

[0124] Figure 6 It is a schematic flowchart of the multi-view decoding method based on motion estimation in Embodiment 3 of the present invention. see Figure 6 , the method includes the following steps:

[0125]Step 601: Divide frames in a video sequence into direct estimation frames and indirect estimation frames...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com