Method and apparatus for scheduling memory

A memory scheduling and memory technology, applied in the computer field, can solve problems such as multiple redundant operations, affecting Cache space usage and processing speed, and redundant memory data, so as to optimize Cache scheduling, improve utilization and The effect of the speed at which data is processed

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

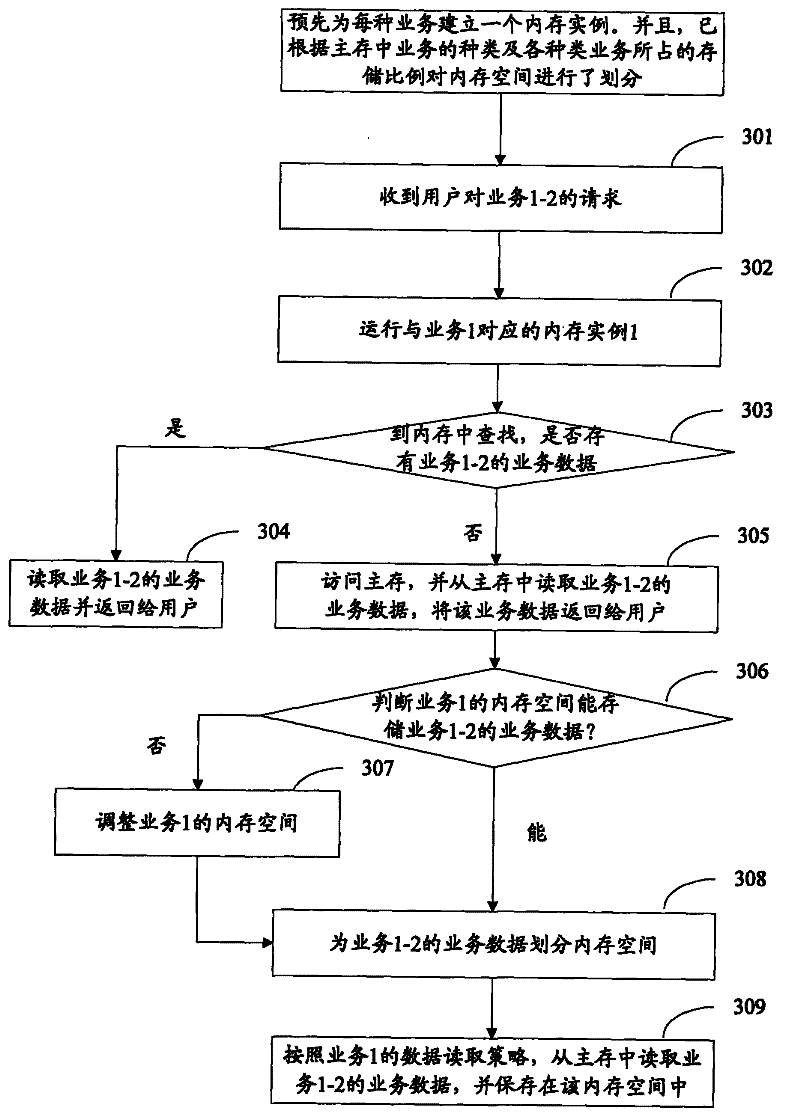

[0026] The embodiment of the present invention establishes a Cache instance for each service, so as to adopt different memory scheduling methods for different services, especially adopt a memory scheduling strategy suitable for the service. This memory scheduling method improves the utilization rate of memory and the speed of processing data. The embodiment of the present invention is mainly applicable to the scheduling of the data area of the memory.

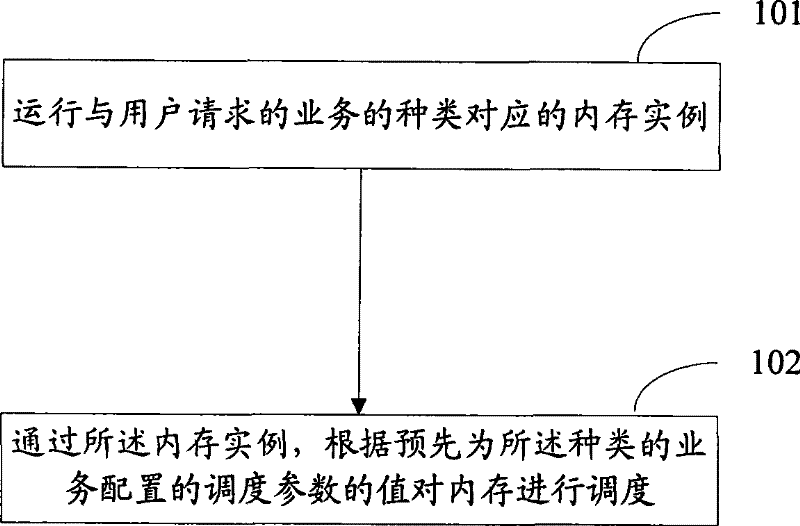

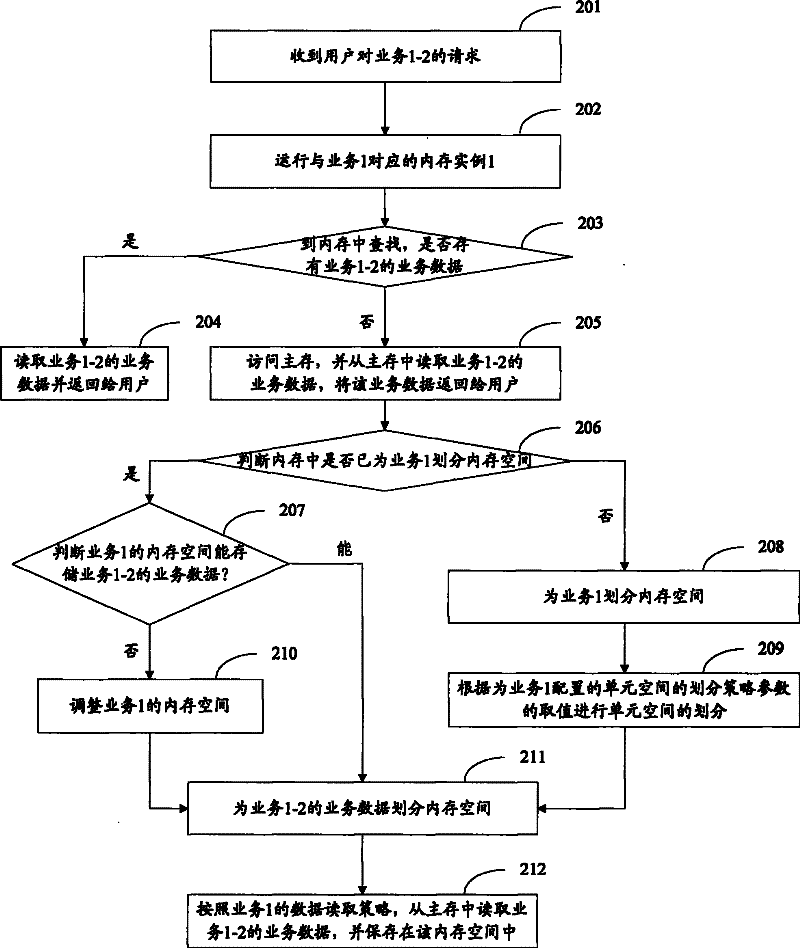

[0027] see figure 1 , the main method flow of memory scheduling in this embodiment is as follows:

[0028] Step 101: Run the Cache instance corresponding to the service type requested by the user.

[0029] Step 102: Using the Cache instance, schedule the Cache according to the value of the scheduling parameter configured in advance for the type of service.

[0030] In this embodiment, Cache scheduling includes a unit space division policy, a unit space scheduling policy, a unit space management policy, and a data reading p...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com