Method and device for extracting characters

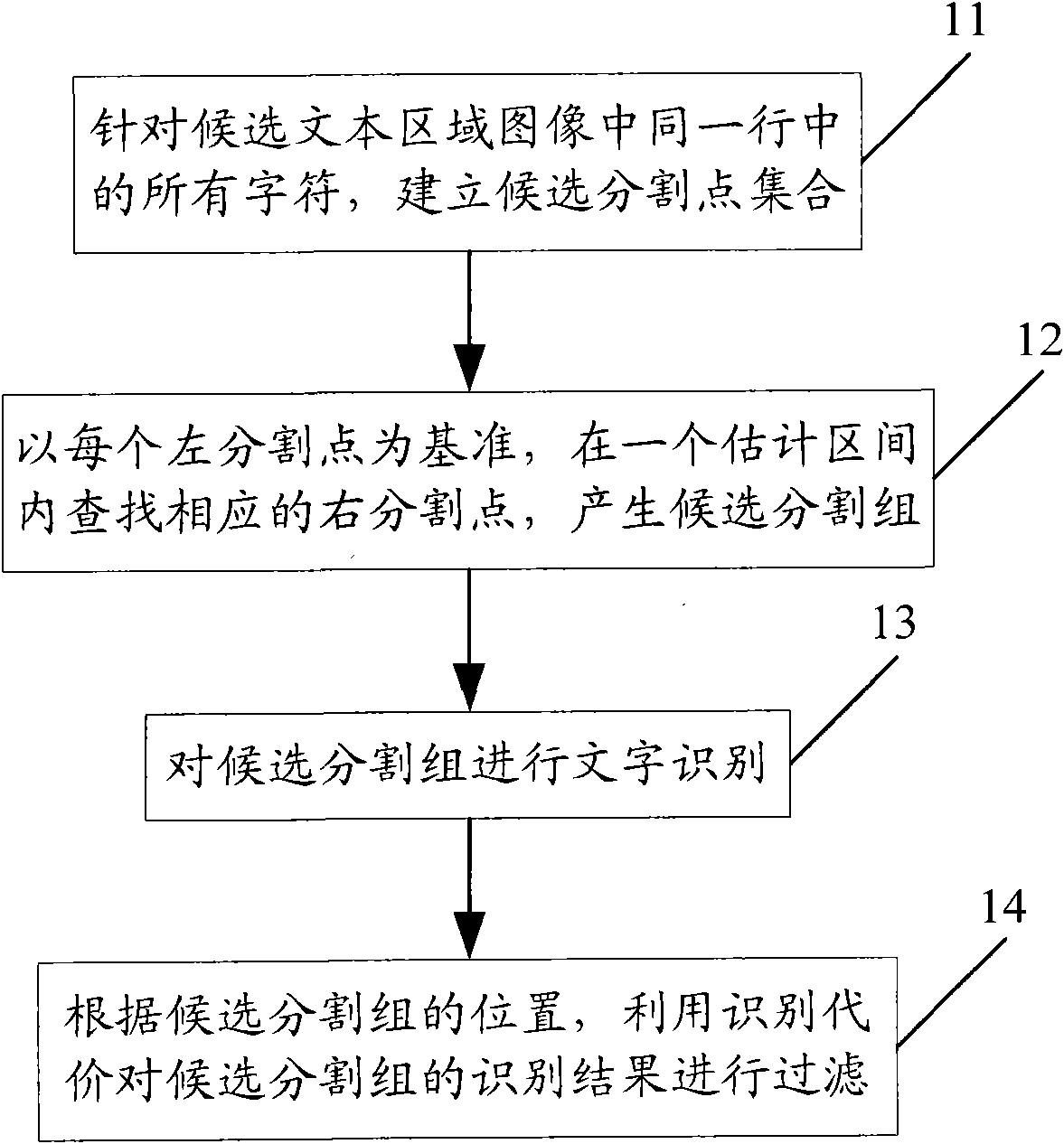

A character and segmentation point technology, applied in the field of character extraction and device, can solve the problems of incomplete consideration, wrong segmentation results, no candidate segmentation point screening, etc., to achieve the effect of improving recall rate and reducing the number of branches

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0022] Before character segmentation and recognition, the image needs to be preprocessed. An optional preprocessing procedure is:

[0023] First, binarize the received image to obtain a binary image. In this way, it is possible to describe the brightness changes near the character strokes without retaining too much background noise.

[0024] Then, the connected domains in the binary image are labeled. After calibration, information such as the position, size, and number of pixels of each connected region in the binary image can be obtained.

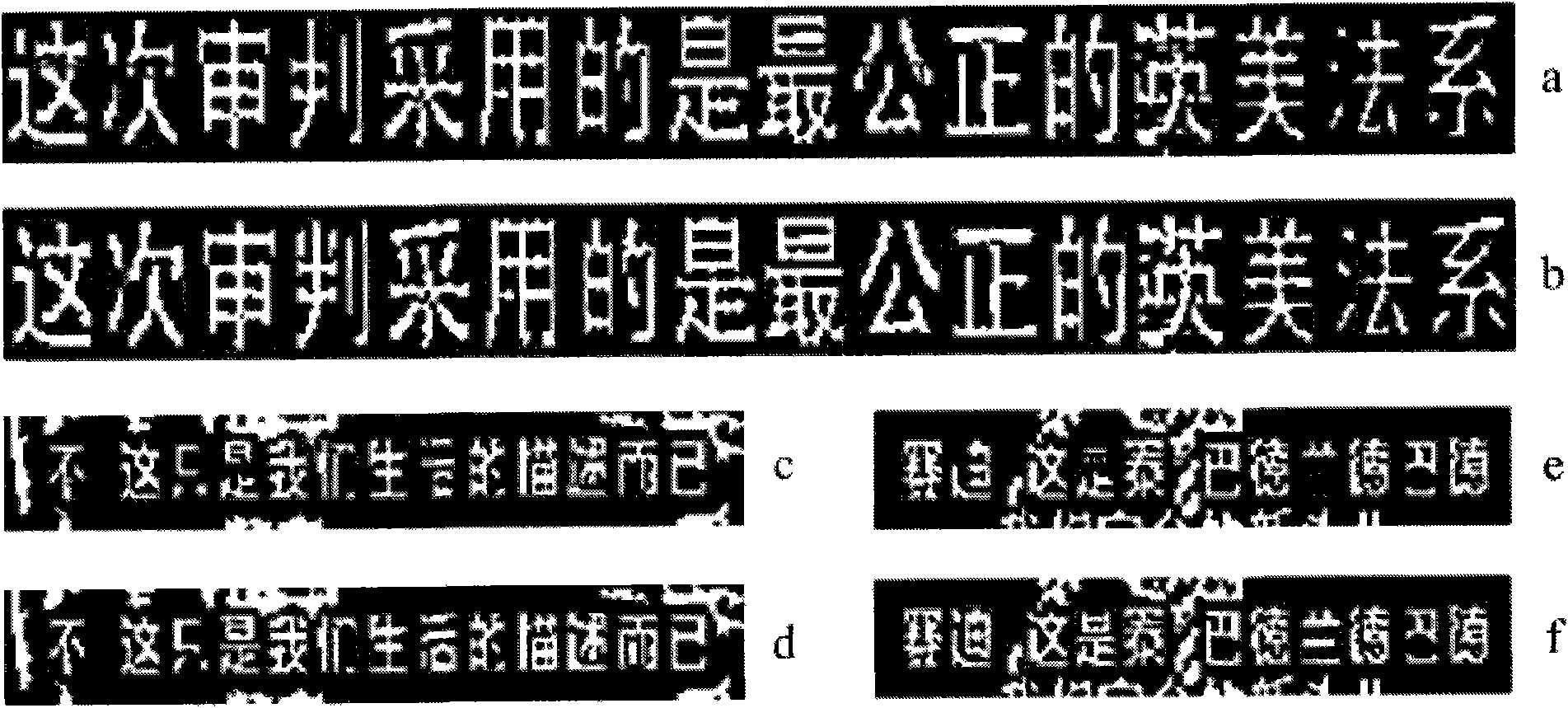

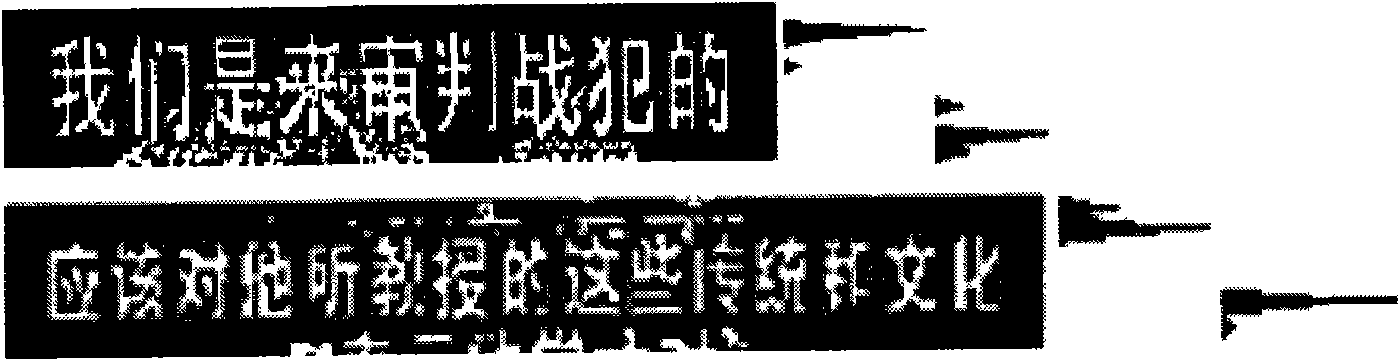

[0025] Then, merge according to the size and positional relationship of each connected domain to form a complete connected domain close to character features, such as figure 1 shown.

[0026] In the binary image of the text area, the number of characters is large, and it is suitable to estimate character characteristics by statistical methods. However, each character is composed of multiple scattered strokes. If the connected domains...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com