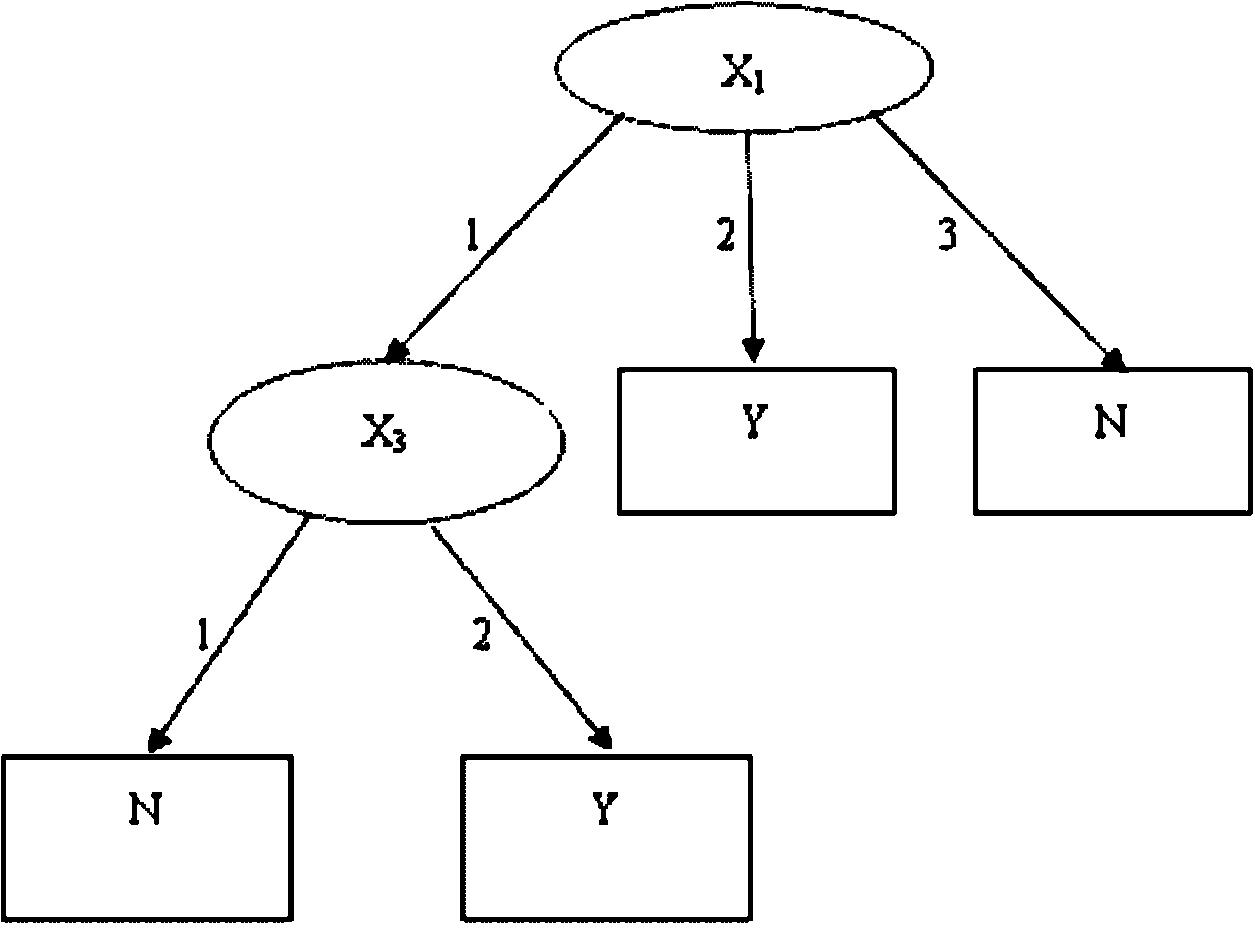

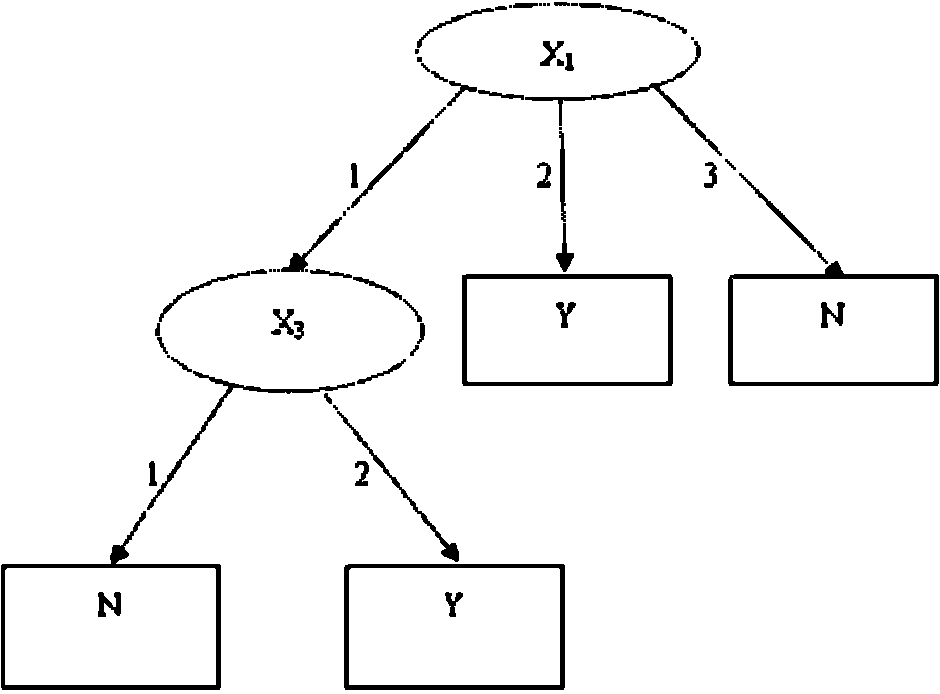

Probability rough set based decision tree generation method

A decision tree and rough set technology, applied in special data processing applications, instruments, electrical digital data processing, etc., to achieve the effect of solving data noise problems

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

example

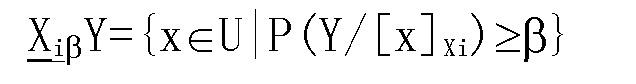

[0126] 1. Calculate the dependence of each condition attribute on the decision attribute, we use r(X, Y) to represent the dependence, then

[0127] The equivalence classes for each attribute are as follows

[0128] U / X 1 = {{1, 2, 3, 13, 14, 15, 16, 19, 20, 25}

[0129] {4, 5, 11, 12, 21, 22, 23} {6, 7, 8, 9, 10, 17, 18, 24}}

[0130] U / X 2 = {{1, 2, 3, 4, 5, 8, 10, 23, 25}

[0131] {6, 7, 13, 14, 17, 18, 19, 20, 21, 22, 24} {9, 11, 12, 15, 16}}

[0132] U / X 3 =

[0133] {{1, 2, 3, 4, 5, 6, 7, 13, 14, 21, 22, 24, 25} {8, 9, 10, 11, 12, 15, 16, 17, 18, 19, 20 ,twenty three}}

[0134] U / X 4 = {{1, 4, 6, 8, 13, 15, 17, 23, 25}

[0135] {3, 5, 7, 9, 12, 14, 16, 18, 19, 22} {2, 10, 11, 20, 21, 24}}

[0136] U / Y=

[0137] {{1, 2, 3, 6, 7, 8, 9, 10, 13, 14, 17, 18, 24, 25} {4, 5, 11, 12, 15, 16, 19, 20, 21, 22 ,twenty three}}

[0138] r(X 1 , Y)=0.6

[0139] r(X 2 , Y)=0

[0140] r(X 3 , Y)=0

[0141] r(X 3 , Y)=0

[0142] 2. Perform relative attribute reduction...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com