Video consistent fusion processing method

A processing method and consistent technology, applied in the direction of TV, color TV, color TV parts, etc., to achieve simple user interaction and improve efficiency

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

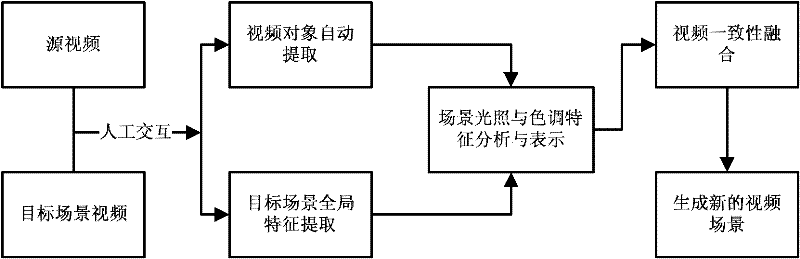

[0030] Each part is described in detail below according to the flowchart of the present invention:

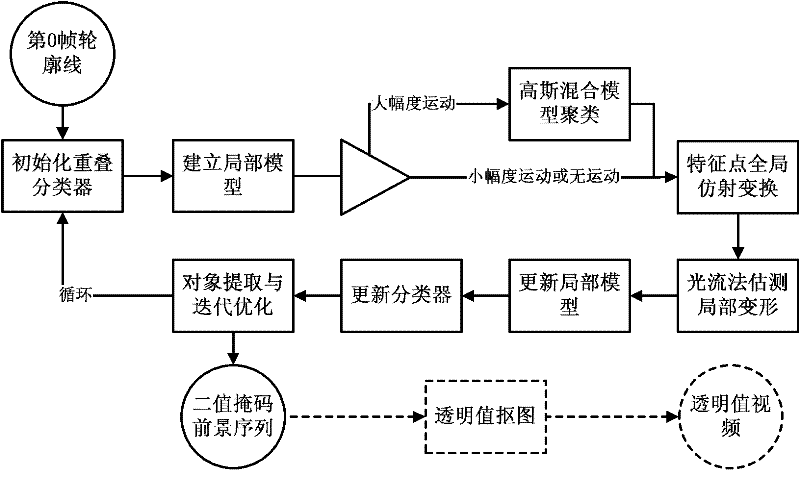

[0031] 1. Interactive selection and automatic extraction of video objects

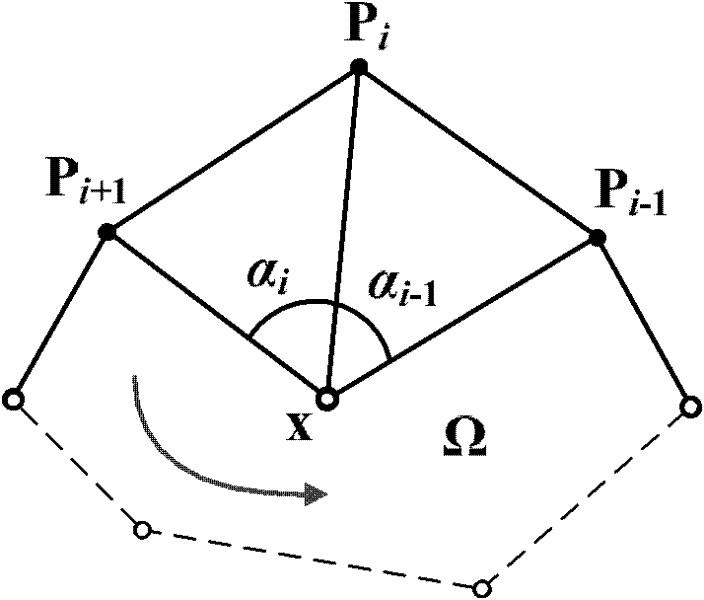

[0032] In order to improve the accuracy of video object extraction in complex scenes, it is necessary to comprehensively consider multiple features to guide the extraction of video objects: (1) Foreground extraction should comprehensively consider multiple features, such as color, texture, shape and motion features. Among them, shape, as an important feature of object recognition, plays a greater role in object extraction. (2) These features should be evaluated both locally and globally to improve the accuracy of object extraction. In the process of video object extraction, the system first extracts the foreground contours of the key frames through manual interaction, and then automatically generates the foreground contours of the remaining frames by means of forward propagation based on key frames. Th...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com