Distributed decision tree training

A decision tree and distributed control technology, applied in the computer field, can solve problems such as difficult, impractical, and impossible to obtain improved classification capabilities of decision trees

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

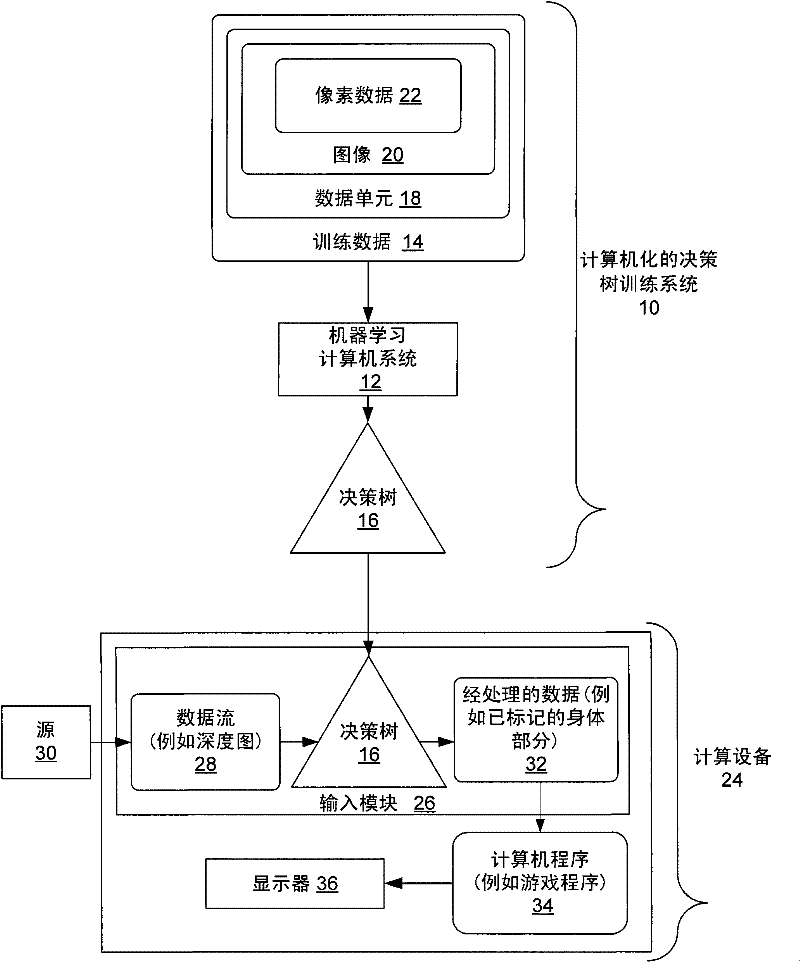

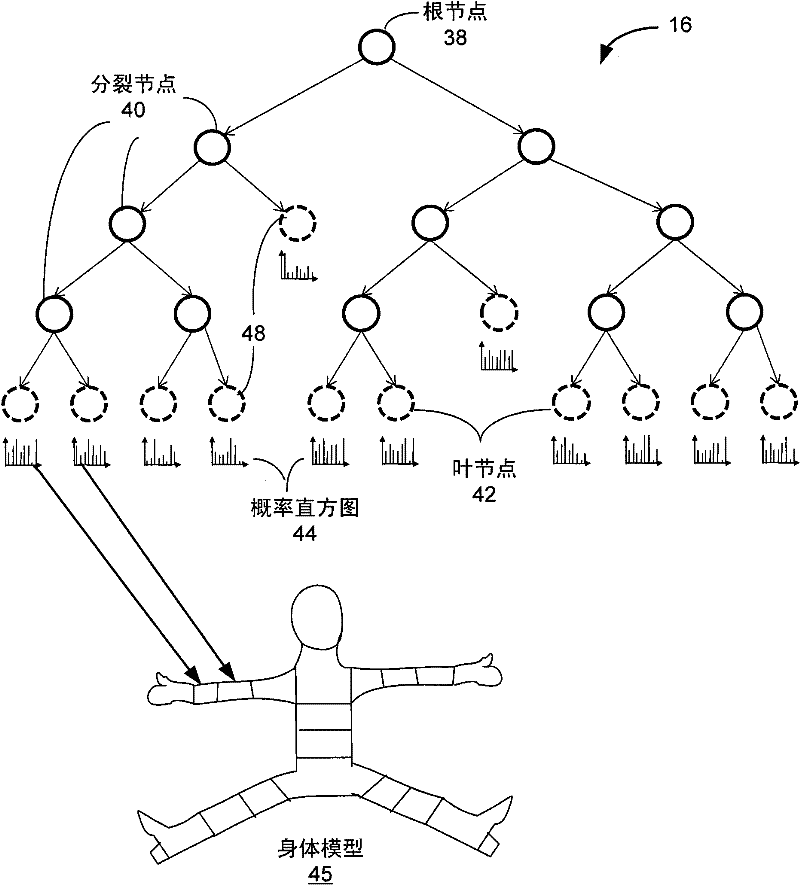

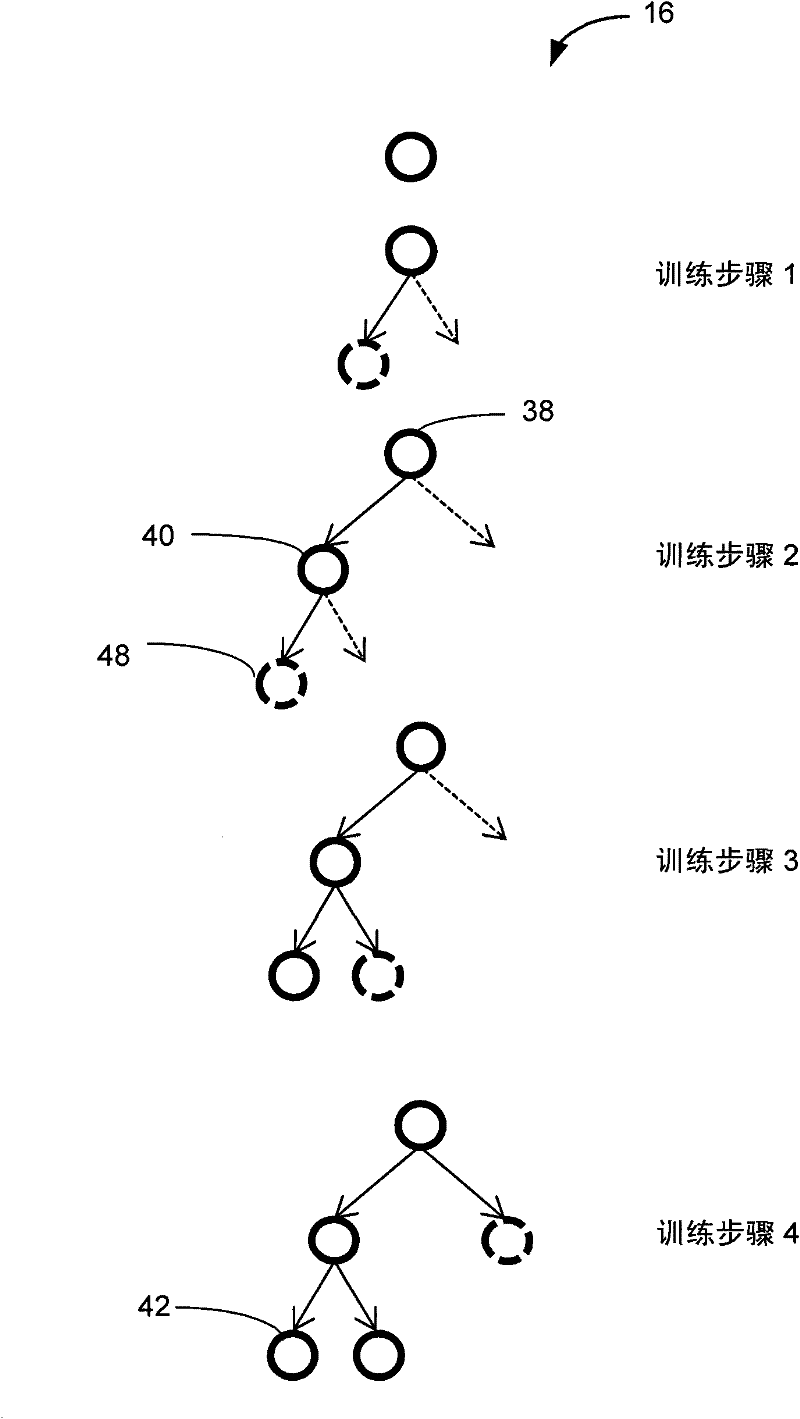

[0013] figure 1 A computerized decision tree training system 10 is shown. Computerized decision tree training system 10 includes machine learning computer system 12 configured to receive training data 14 , process training data 14 , and output trained decision tree 16 . The training data 14 includes numerous examples that have been classified into one of a predetermined number of classes. Decision tree 16 may be trained based on training data 14 according to the procedure described below.

[0014] Training data 14 may include various data types and is generally organized into data units 18 . In one particular example, data unit 18 may contain an image 20 or image region, which in turn includes pixel data 22 . Alternatively or additionally, the data unit 18 may comprise audio data, video sequences, 3D medical scans or other data.

[0015] Subsequent to the training of the decision tree 16 of the computerized decision tree training system 10, the decision tree 16 may be inst...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com