Single-image-based global depth estimation method

A technology of depth estimation and single image, applied in the field of depth estimation, it can solve the problems of large amount of calculation, complex algorithm calculation process, discontinuous depth information, etc., and achieve the effect of small amount of calculation, simple calculation process, and accurate global depth map.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

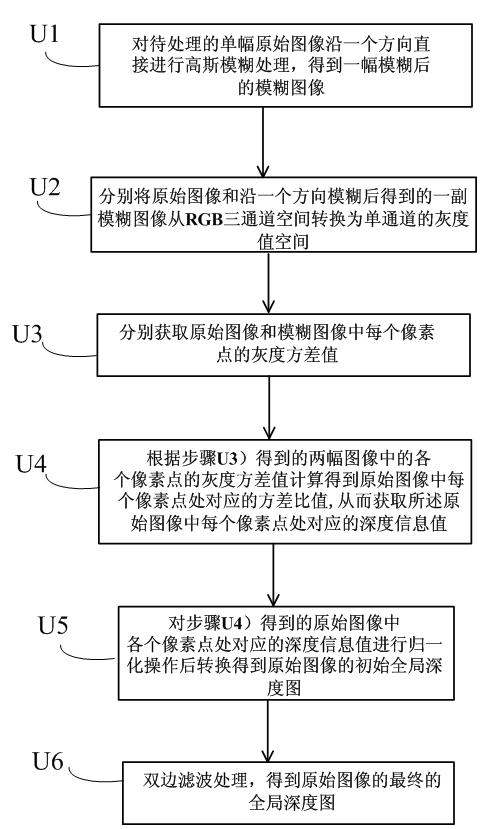

[0012] Such as figure 1 As shown, it is a flow chart of the global depth estimation method in this specific embodiment, including the following steps:

[0013] U1) Perform Gaussian blur processing on the single original image to be processed to obtain a blurred image. Such as figure 1 As shown, in this embodiment, Gaussian blur processing is directly performed along one direction to obtain a blurred image. This direction can be set arbitrarily by the user.

[0014] U2) respectively convert the original image and the blurred image obtained after direct blurring from the RGB three-channel space to the single-channel gray value space.

[0015] In this specific embodiment, the conversion formula shown in formula 5 is used for grayscale conversion, and formula 5 is:

[0016] G(x,y)=0.11×R(x,y)+0.59×G’(x,y)+0.3×B(x,y);

[0017] Among them, G(x, y) represents the converted gray value of the pixel with coordinates (x, y) on the image, R(x, y), G'(x, y), B(x, y) Respectively repr...

specific Embodiment approach 2

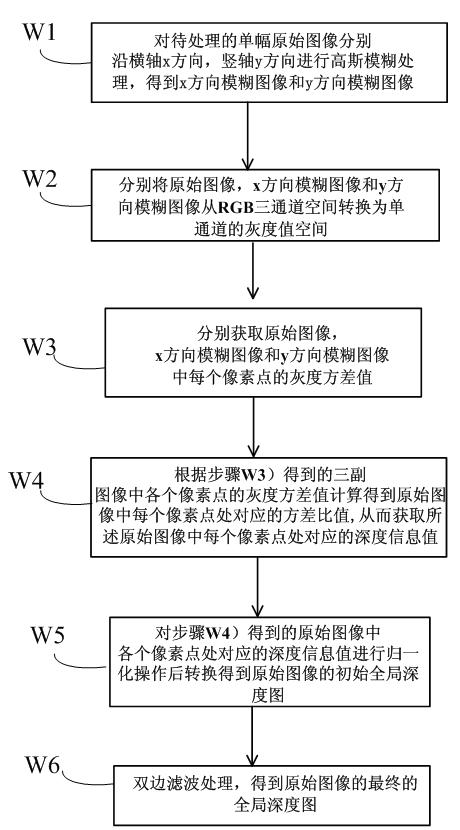

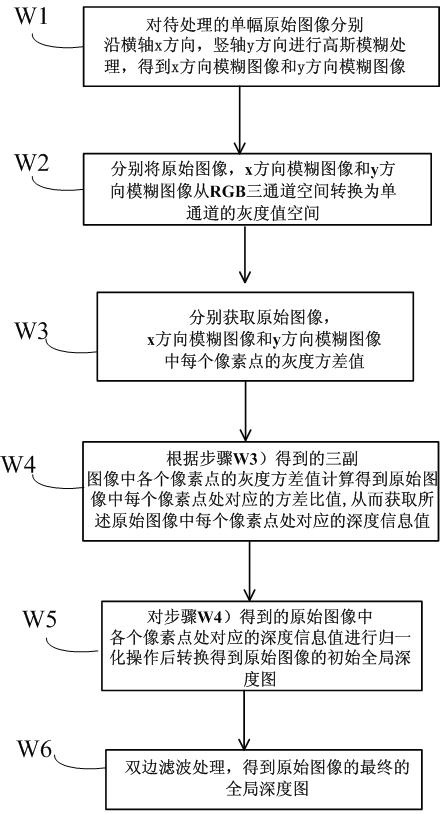

[0039] The difference between this specific embodiment and Embodiment 1 is that in this specific embodiment, during Gaussian blur processing, Gaussian blur processing is performed along the x direction of the horizontal axis and the y direction of the vertical axis. When calculating the variance ratio, the variance in the x direction is weighted The ratio Rx and the variance ratio Ry in the y direction obtain the corresponding variance ratio R at each pixel in the original image.

[0040] Such as figure 2 As shown, it is a flow chart of the global depth estimation method in this specific embodiment, including the following steps:

[0041] W1) Perform Gaussian blur processing on the single original image to be processed to obtain a blurred image. Such as figure 2 As shown, in this specific embodiment, Gaussian blur processing is performed along the x direction of the horizontal axis and the y direction of the vertical axis to obtain two blurred images after blurring, which ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com