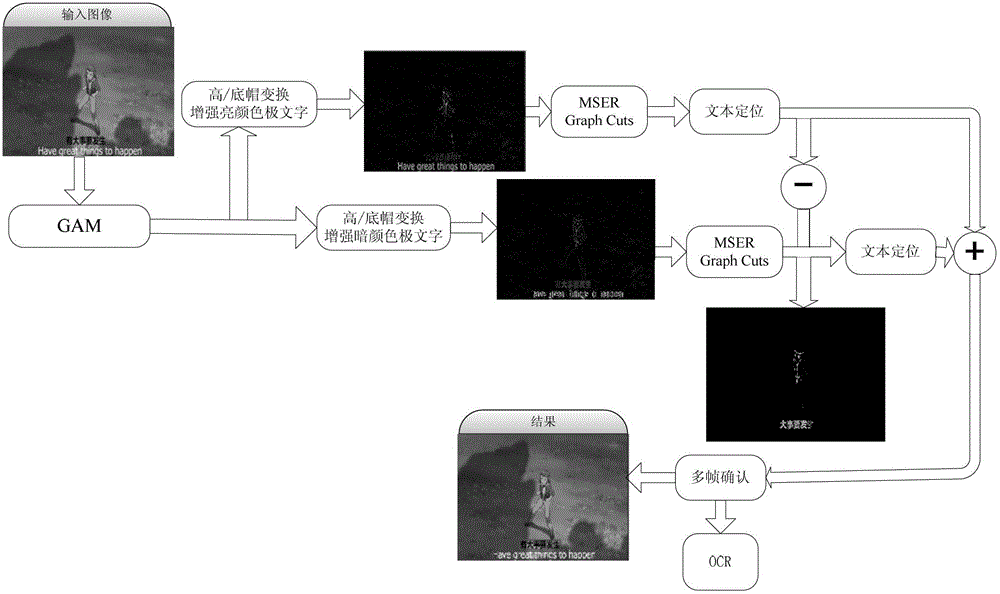

Morphological filtering enhancement-based maximally stable extremal region (MSER) video text detection method

A technology of morphological filtering and text detection, applied in image enhancement, image data processing, instruments, etc., can solve the problems of blurred text boundaries, low contrast between video background and video text, etc.

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0060] Step 1: In the framework of the present invention, since subtitles have a temporary storage feature, there is no need to process each frame of image, and we take one frame of image every five frames for processing. The present invention processes based on pixels. If the picture is too large, it will take a long time to process one frame of image, so the real-time performance will be worse. Therefore, before the image processing, the size of the video image is first converted to 448× by linear interpolation. 336.

[0061] The Euclidean distance between two points in the HSI color space is approximately proportional to the degree of human perception, and it has an important feature: the brightness component and the chrominance component are separated, and the brightness component I has nothing to do with the color information of the image, that is, the HSI color space There are relatively independent features between the chromaticity and brightness of the image, so in the...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com