Method and device for quantizing local features of picture into visual vocabularies

A local feature and visual vocabulary technology, applied in special data processing applications, instruments, electrical digital data processing, etc., can solve problems such as large computing overhead, poor robustness, quantization error, etc., to reduce computing overhead and improve robustness , the effect of reducing the quantization error

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

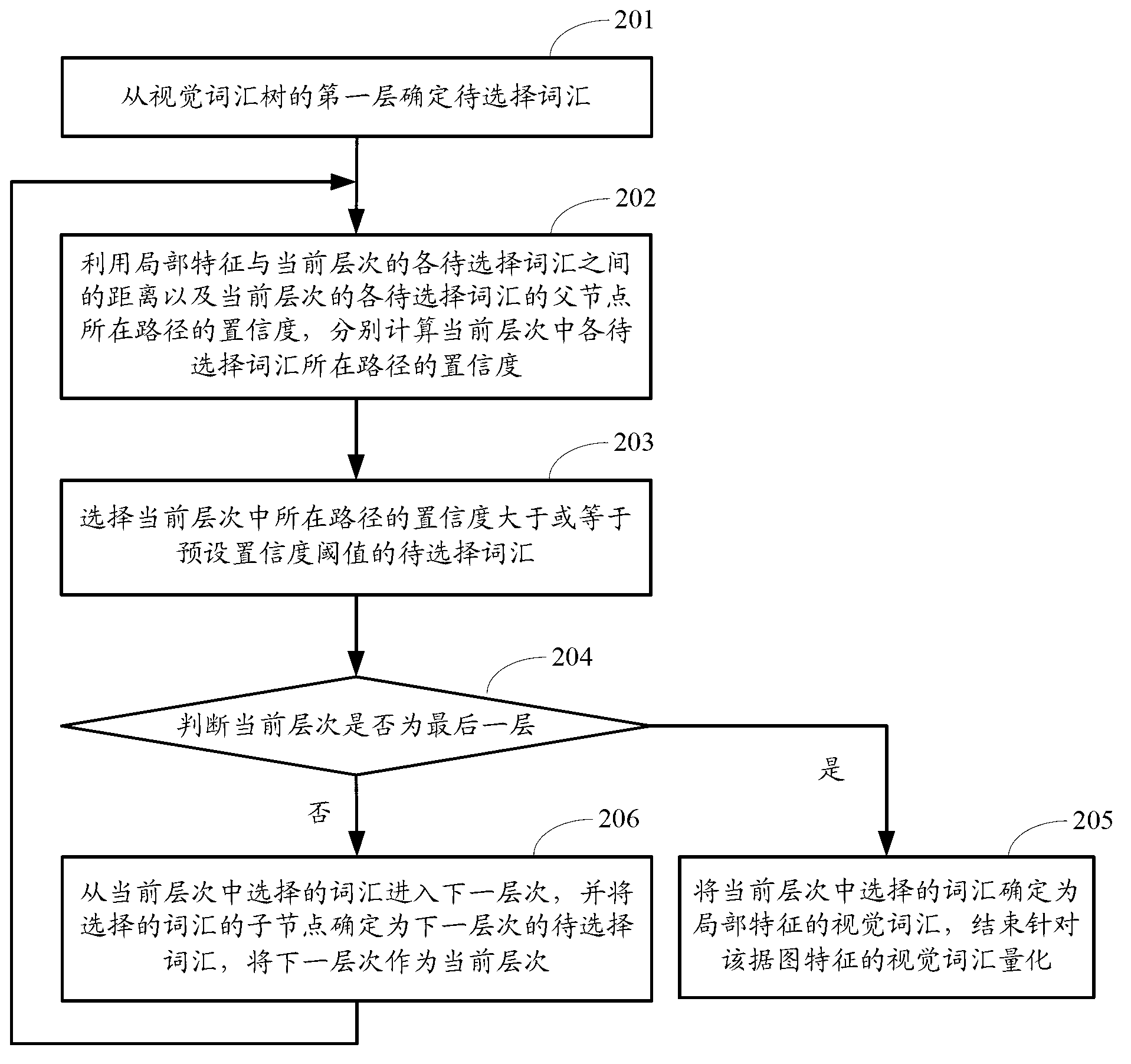

[0037] In the embodiment of the present invention, a fixed number of vocabulary is no longer selected at each layer, but the concept of path confidence is introduced, and the path confidence is used to determine whether to select the vocabulary corresponding to the path and enter the next layer of nodes, specifically as figure 2 As shown, in the process of querying the visual vocabulary tree for each local feature of the picture, starting from the first layer of the visual vocabulary tree, the following steps are performed:

[0038] Step 201: Determine the vocabulary to be selected from the first level of the visual vocabulary tree.

[0039] In the embodiment of the present invention, all the vocabulary in the first layer can be used as the vocabulary to be selected. In addition to this method, other selection methods can also be used to use the vocabulary of one or several nodes as the vocabulary to be selected.

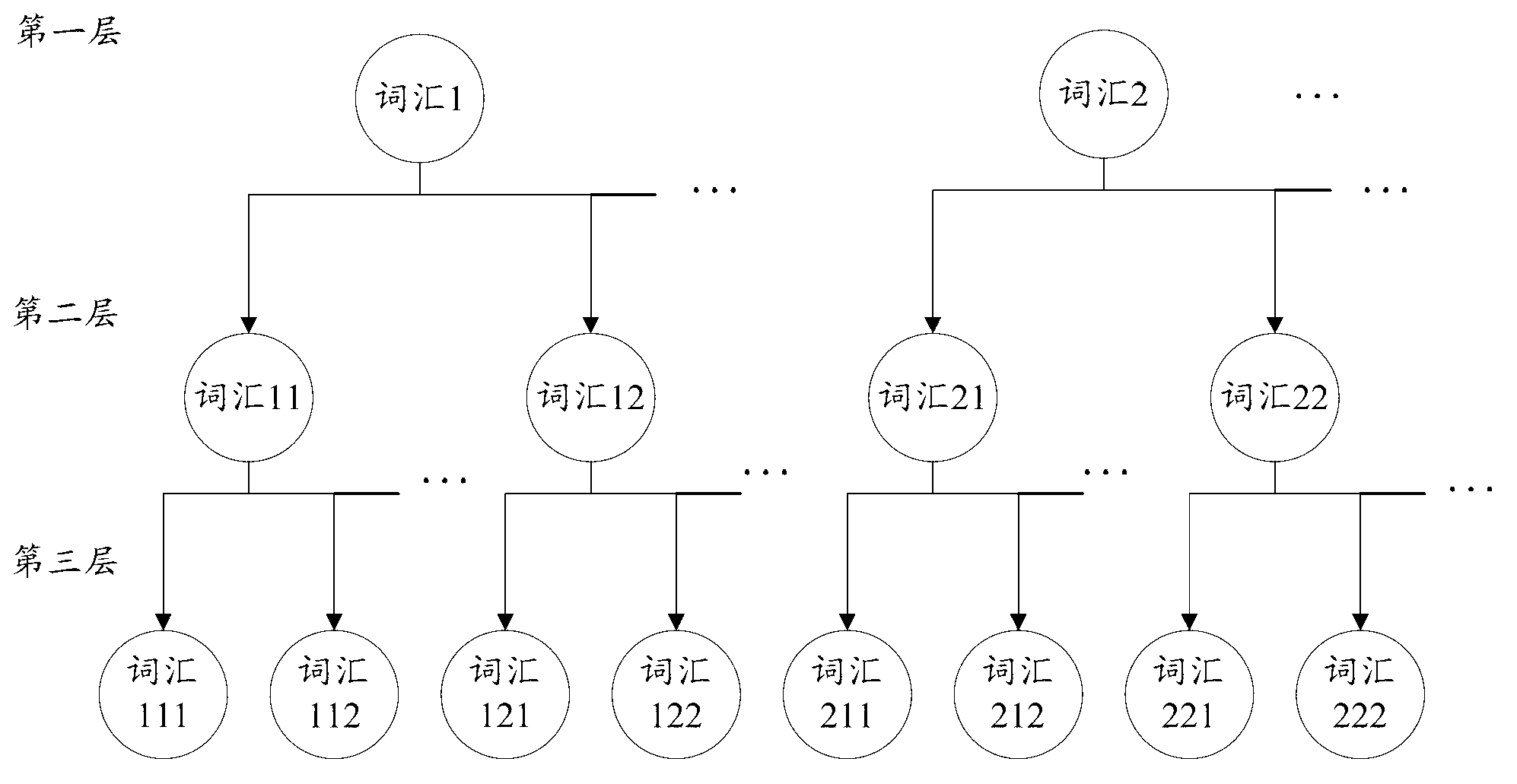

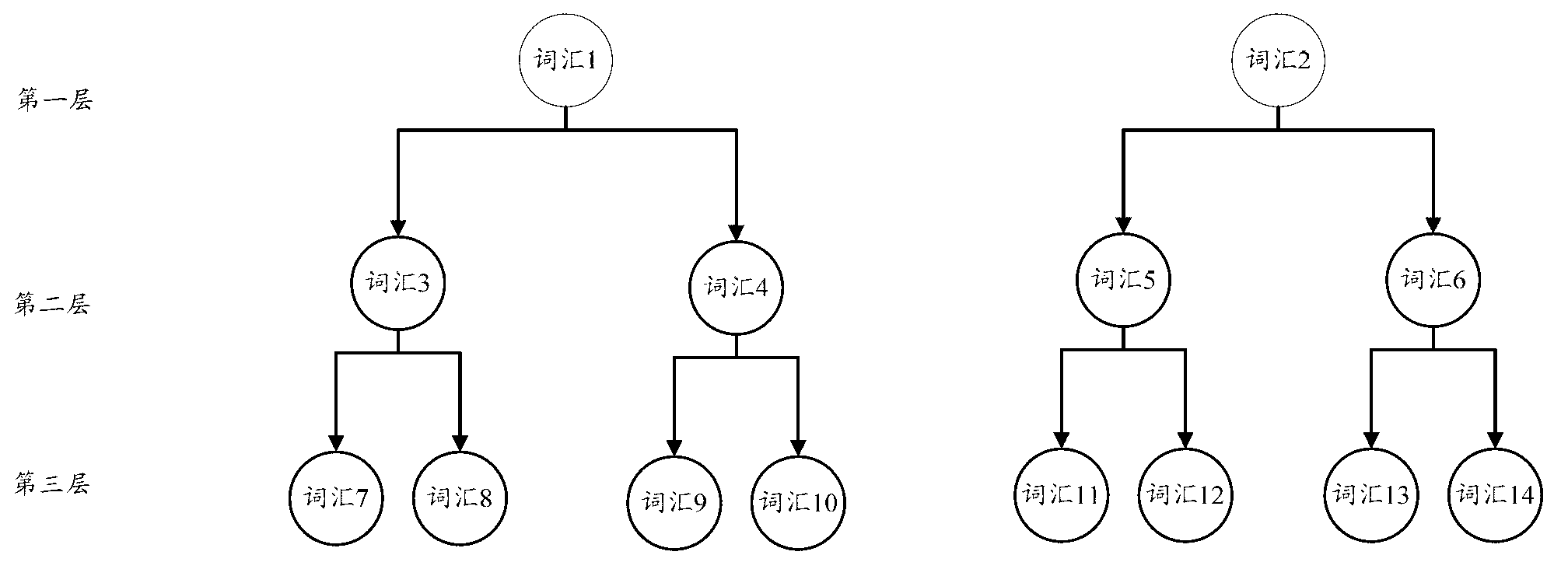

[0040]For the convenience of understanding, a brief introduct...

Embodiment 2

[0062] Figure 4 The device structure diagram provided for the second embodiment of the present invention, such as Figure 4 As shown, the device may include: an initial query unit 401 , a confidence calculation unit 402 , a selection judgment unit 403 , and a visual vocabulary determination unit 404 .

[0063] The quantification of the visual vocabulary tree for local features is actually the process of querying the visual vocabulary tree for each local feature of the picture. In the process of querying the visual vocabulary tree for the local features of the picture, the initial query unit 401 starts from the first visual vocabulary tree of the visual vocabulary tree. One level determines the vocabulary to be selected, and the first level is used as the current level to trigger the confidence calculation unit 402 .

[0064] Specifically, the initial query unit 401 may use all the vocabulary in the first layer as the vocabulary to be selected. Besides this method, other sele...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com