Method and system for determining cache set replacement order based on temporal set recording

A technique of caching and replacing order, applied in the field of data processing

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

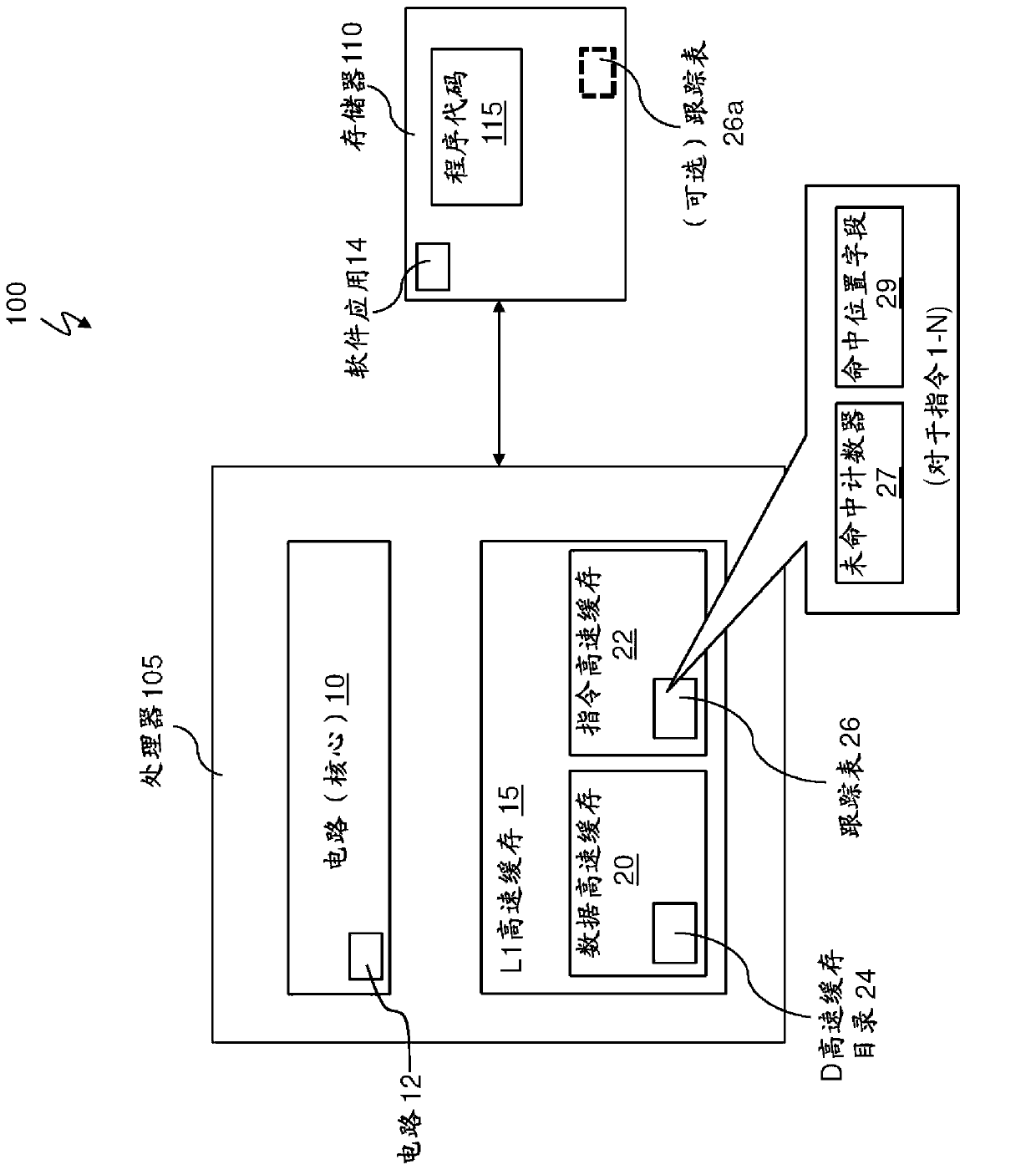

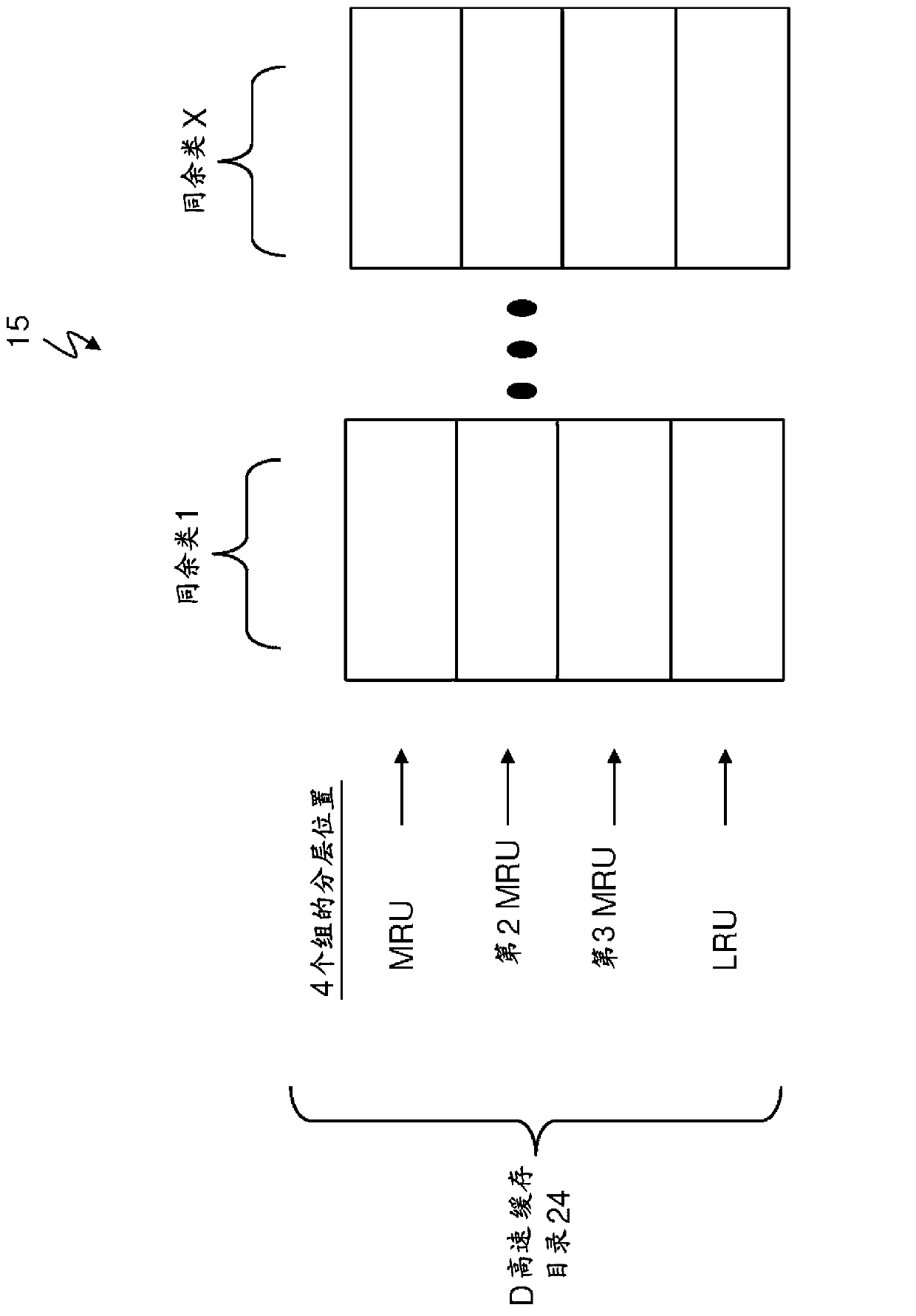

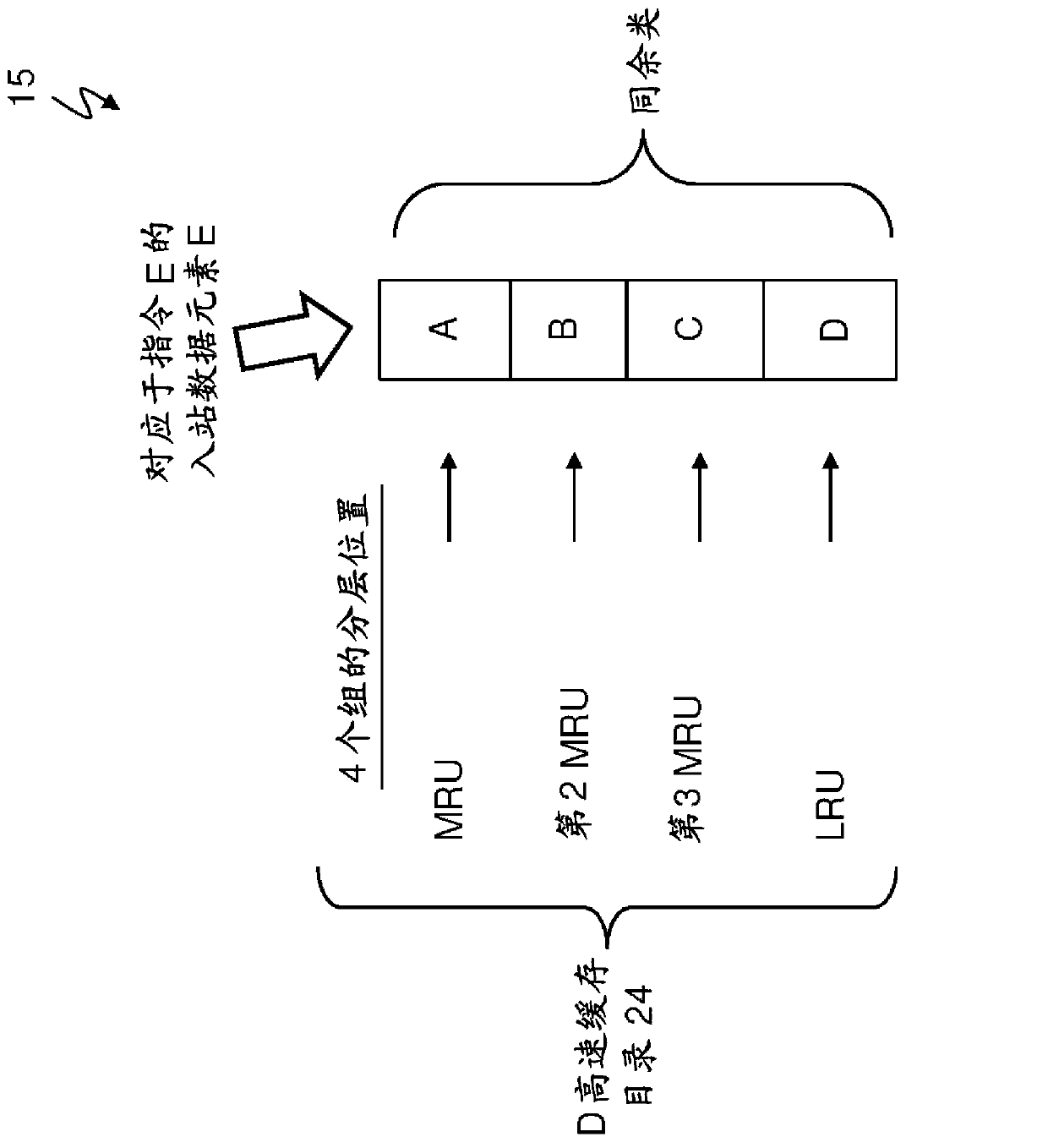

[0014] Microprocessors may contain a Level 1 (L1) data cache (D-cache). The L1 data cache is used to hold data elements for a subset of system memory locations so that instructions performing loads and stores closest to the processor core can be processed. On a cache miss, the data element corresponding to the requested storage location is installed into the cache. Each entry in the cache represents a cache line that corresponds to a portion of memory. One such typical mounting algorithm for caching is based on least recently used (LRU). As an example, the data cache may contain 1024 rows, which are called congruence classes, and the data cache may be a 4-way set associative cache, which has a total of 4k entries. For each congruence class, the ordering of the most recently used group to the least recently used group can be tracked as a set of hierarchical positions for replacement. When installing a new entry into the data cache for a given congruence class in one of the 4...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com