Network violent video identification method

A violent and video technology, applied in the field of video classification problems, can solve the problems of small data volume, performance and processing speed reduction, etc., and achieve the effect of reducing dimensionality and space complexity

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0012] In order to make the object, technical solution and advantages of the present invention clearer, the present invention will be described in further detail below in conjunction with specific embodiments and with reference to the accompanying drawings.

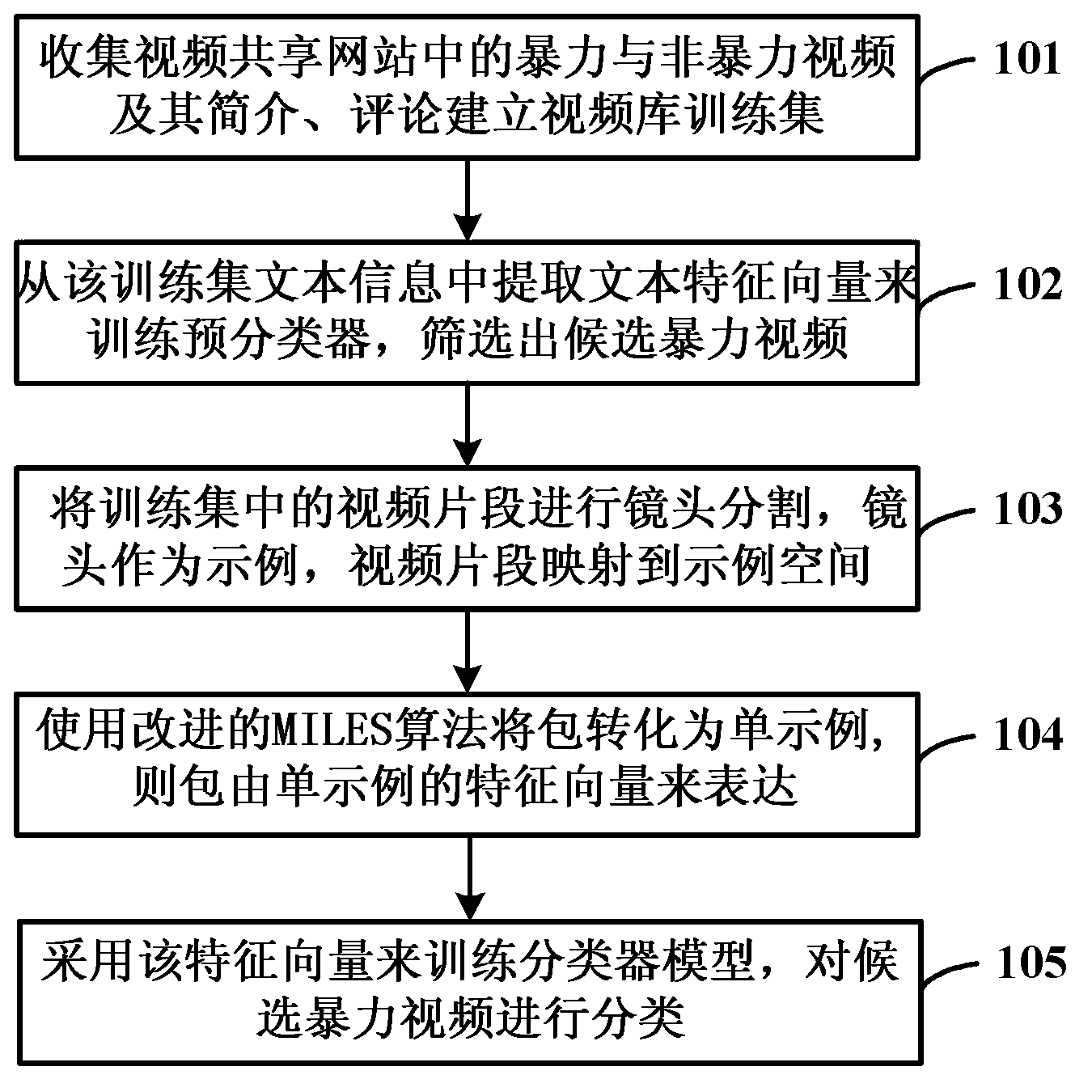

[0013] The invention proposes a method for identifying network violence video. In this method, violent and non-violent videos and their introductions and comments in video sharing websites are collected as samples, a video training set is established, and text features related to the training set video are extracted from the text information of the training set, so that text features can be used to Vector training pre-classifier model, use the pre-classifier to classify new video samples to obtain candidate violent videos, segment the video clips in the training set into shots, extract the bottom-level features such as video and audio of the shots to form feature vectors to represent the shots, and put The shot is regarde...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com