A Video Event Summary Graph Construction and Matching Method Based on Detail Description

A technology of video event and matching method, applied in the field of computer vision and information retrieval, to achieve the effect of improving the probability of converging to the global optimal solution

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be described in detail below in conjunction with the accompanying drawings.

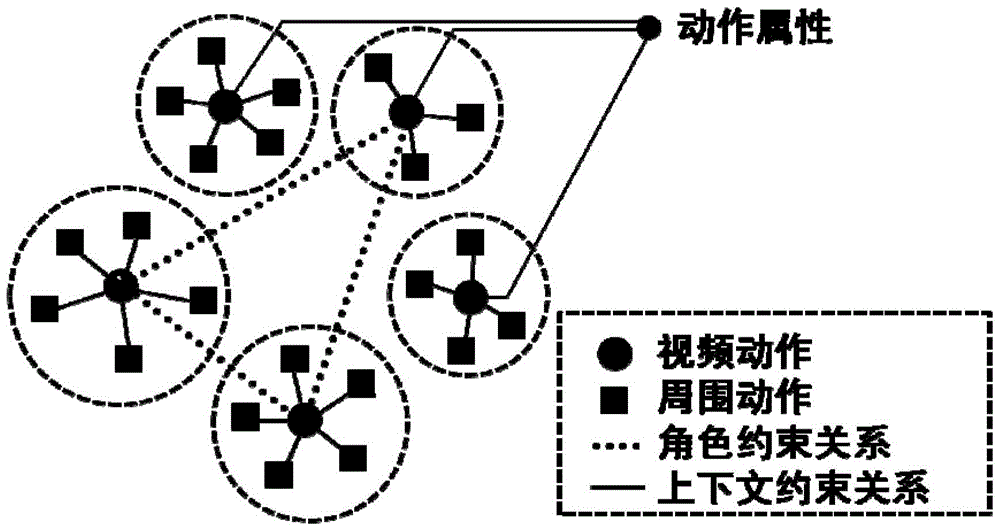

[0038] refer to figure 1 The schematic diagram of the event summary graph of the present invention, the event summary graph is an undirected attribute graph, which describes the event action information through the semantic attributes of the nodes, and describes the event layout information through the relationship between nodes, so as to represent the details of a certain video event The action occurrence state in . The circular nodes in the figure represent profile actions, and the dotted edges connecting two profile actions represent role constraint relationships. If two actions are performed by the same character, there is a role constraint relationship between the two action nodes. In addition, each summary graph action and its spatially adjacent actions (square nodes in the figure) form a spatial context relationship, which is represented by the solid line in...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com