Data storage method, data scheduling method, device and system

A data access and data technology, which is applied in the field of communication networks, can solve the problems of reducing data access performance, occupying a lot of memory for cache nodes, and complex data access process, so as to improve data access performance, improve data processing speed, The effect of simplifying the data access process

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

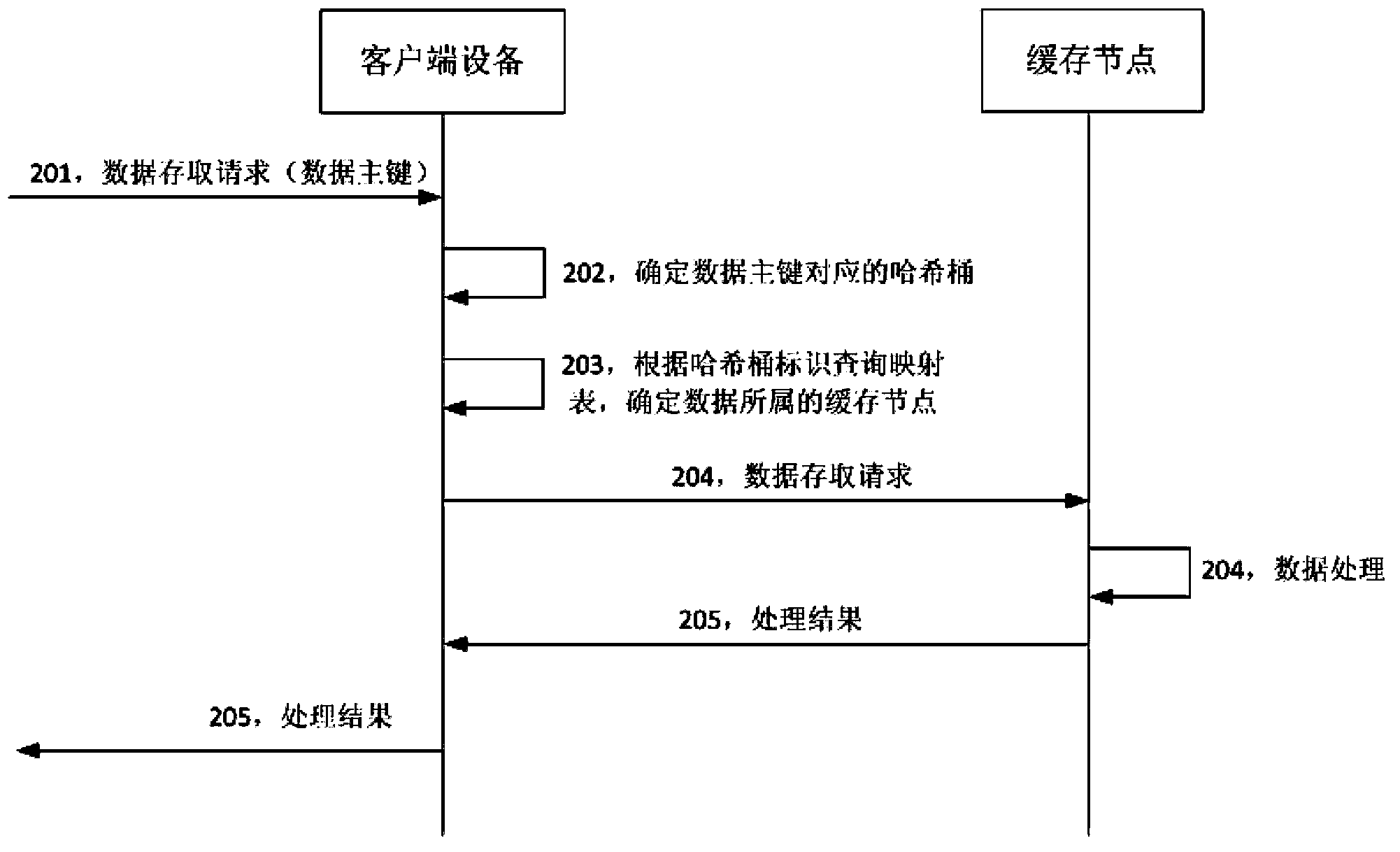

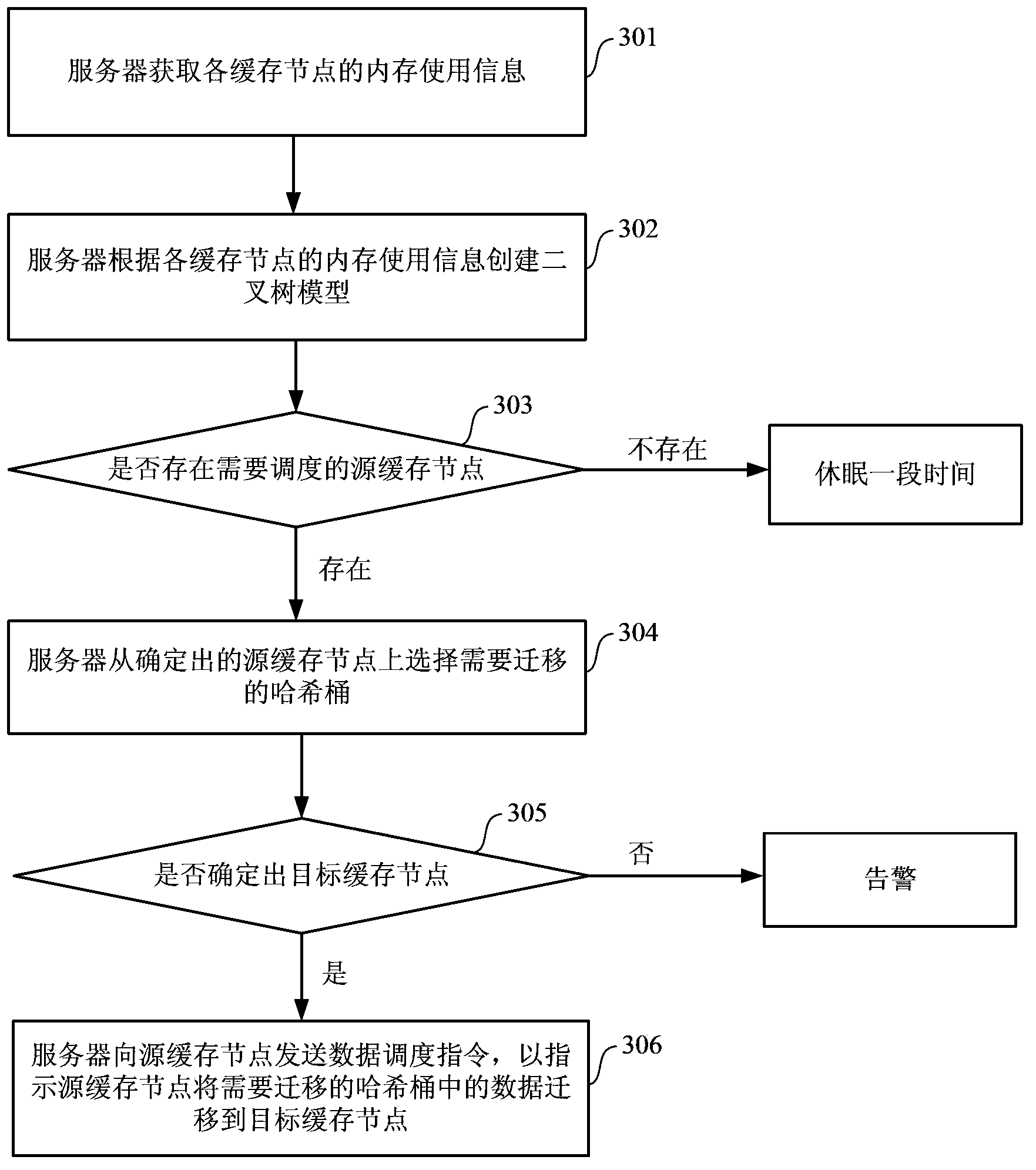

[0040] Aiming at the above-mentioned problems in the prior art, embodiments of the present invention provide a data access scheme and a data scheduling scheme. Embodiments of the present invention will be described in detail below in conjunction with the accompanying drawings.

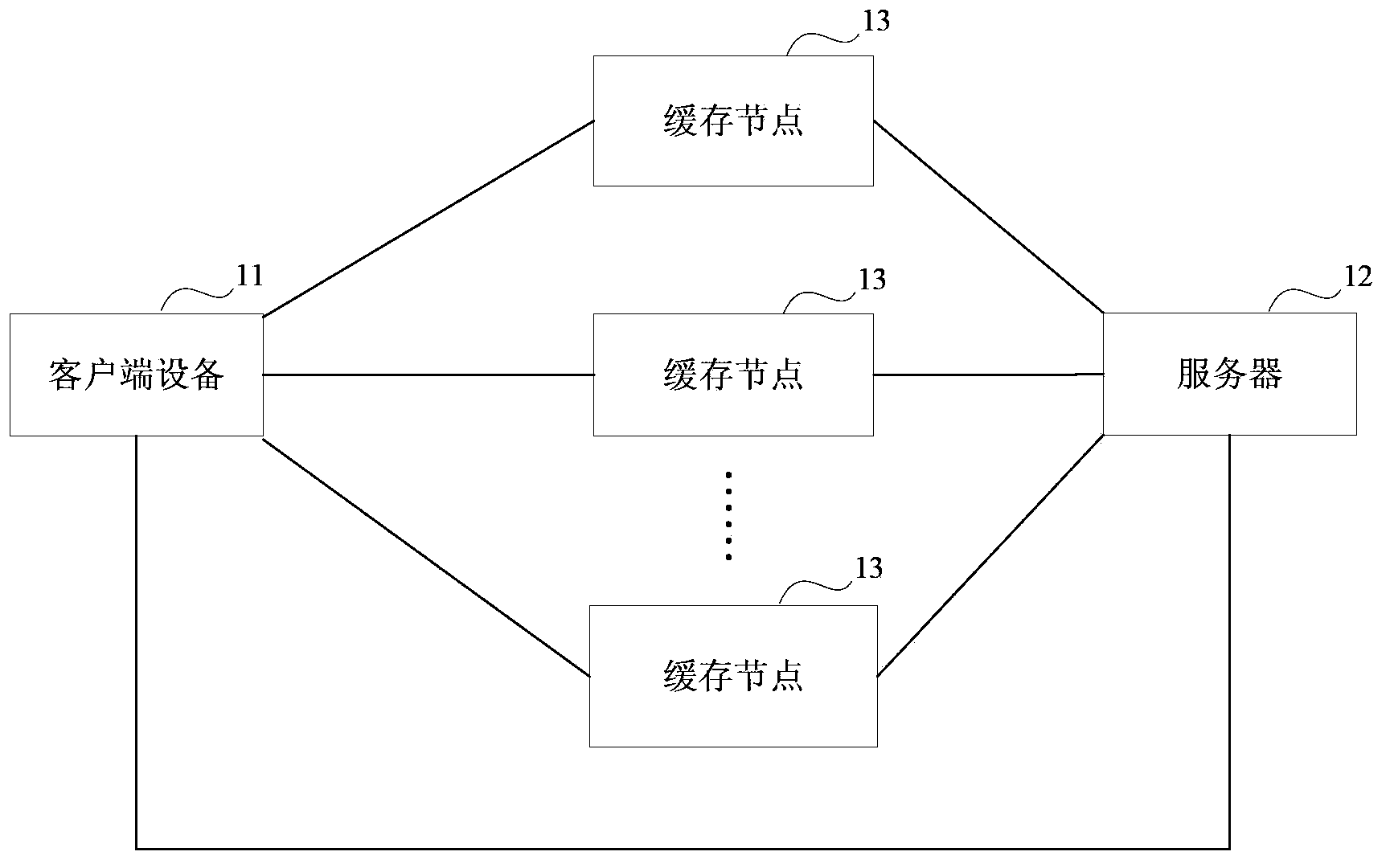

[0041] figure 1 The architecture of the distributed cache system provided by the embodiment of the present invention is shown, and the cache system supports data access requests of multiple clients. The system architecture may include: a client device 11 (there may be multiple ones, only one client device is shown in the figure), a server 12 and at least two cache nodes 13 . The client device 11 is used for data access; the server 12 is used for monitoring and scheduling data distribution of each cache node 13; the cache node 13 is used for caching data in memory and responding to the data access request of the client device 11.

[0042] The distributed cache system of the embodiment of the present i...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com