Data cleaning method for data files and data files processing method

A technology of data files and processing methods, applied in the direction of electrical digital data processing, special data processing applications, instruments, etc., can solve problems that no longer meet data statistical analysis, etc., and achieve the goal of reducing processing burden, ensuring integrity, and saving storage space Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0037] The present invention will be described in detail below in conjunction with the accompanying drawings.

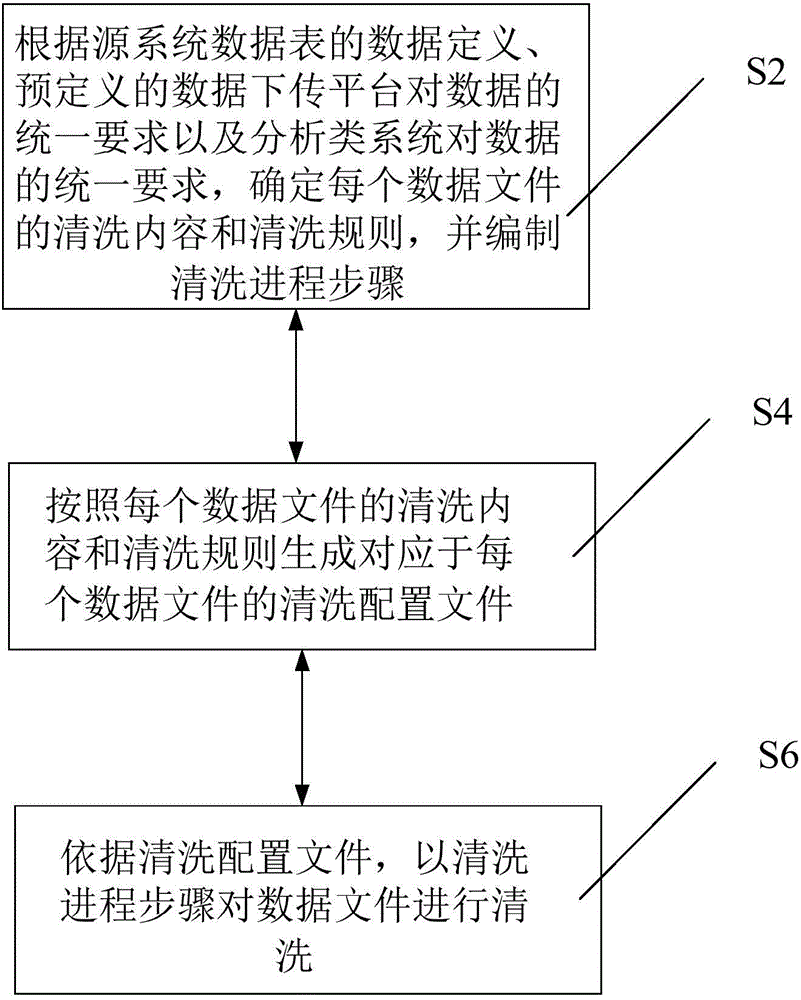

[0038] According to an embodiment of the present invention, a data cleaning method for data files is provided, wherein the data files come from various source systems, and can also be called source files, source file data, or data files from source systems, etc., such as figure 1 As shown, it is a flowchart of a data cleaning method for a data file according to an embodiment of the present invention, the data cleaning method includes:

[0039] Step S2: Determine the cleaning content and cleaning rules of each data file according to the data definition of the source system data table, the unified requirements of the predefined data download platform for data, and the unified requirements of the analysis system for data, and compile the cleaning process step, wherein, specifically, the data downloading platform is a downloading intermediary for downloading data files o...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com