Robot visual processing method based on attention mechanism

A technology of robot vision and attention mechanism, applied to instruments, computer components, character and pattern recognition, etc., can solve problems such as immature visual system

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment Construction

[0101] The present invention will be further described in detail below in conjunction with the embodiments and the accompanying drawings, but the embodiments of the present invention are not limited thereto.

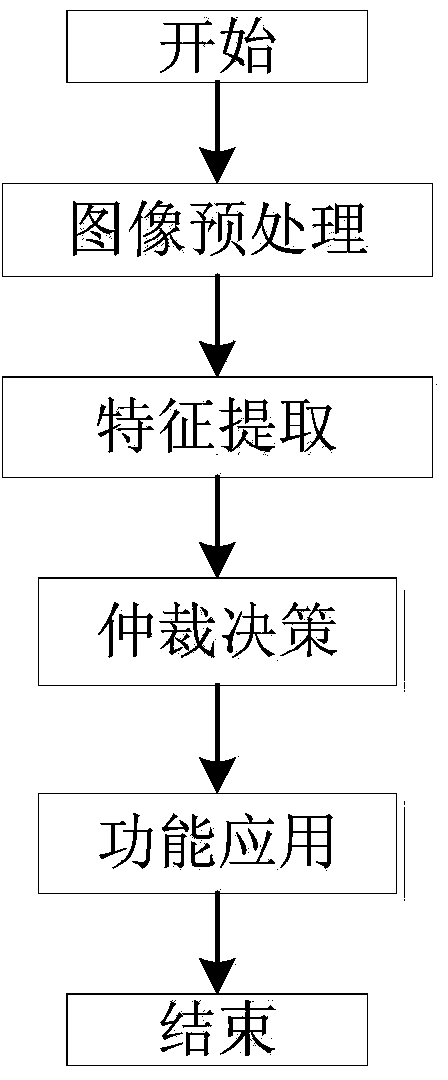

[0102] Such as figure 1 , an attention mechanism-based approach to robot vision processing, consists of the following sequential steps:

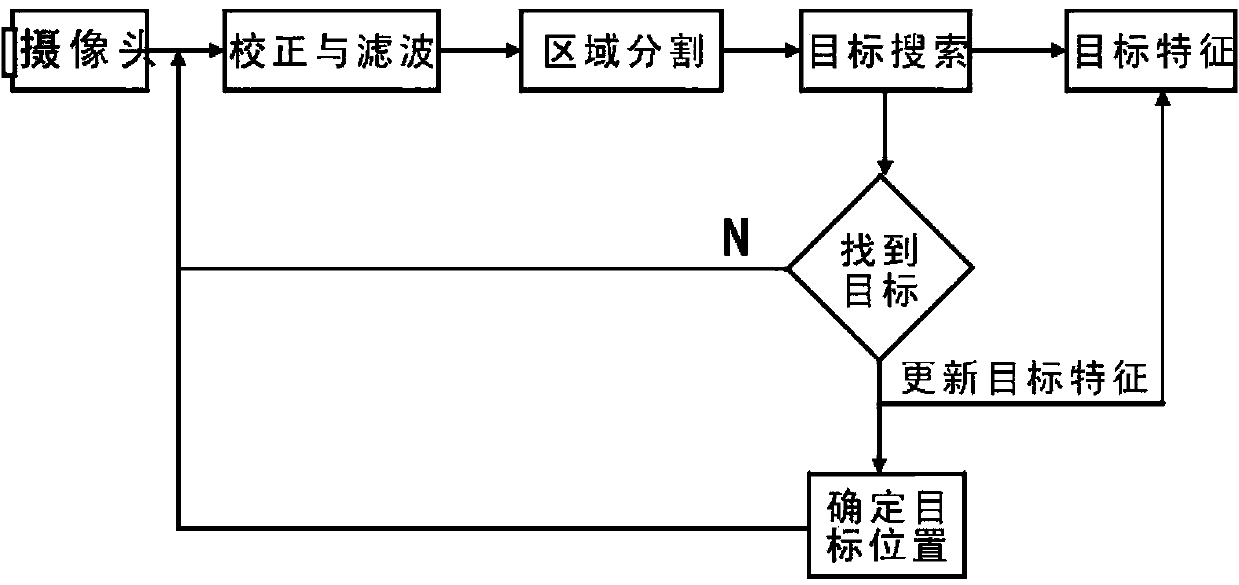

[0103] S1. Image preprocessing: perform basic processing on the image, including color space conversion, edge extraction, image transformation and image thresholding; the image transformation includes basic scaling, rotation, histogram equalization, and affine transformation of the image;

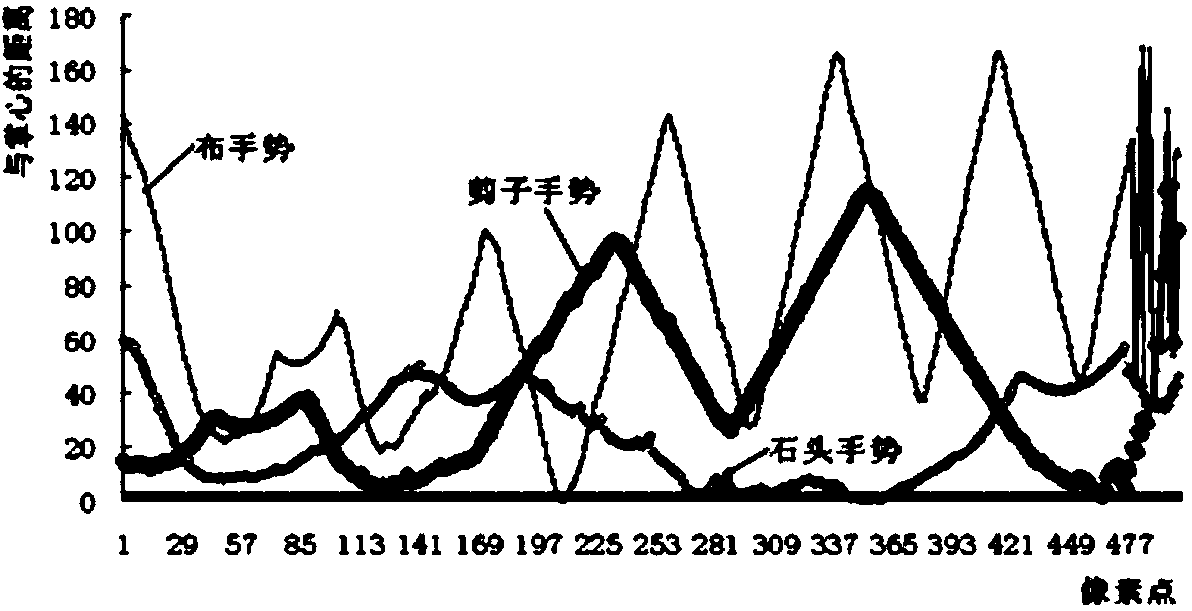

[0104] S2. Feature extraction: extract five types of feature information of skin color, color, texture, motion and spatial coordinates from the preprocessed image;

[0105] S3. Arbitration decision: For the information obtained by the feature extraction layer, according to a certain arbitration decision strategy, it is selectively distributed to t...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com