RANSAC algorithm-based visual localization method

A visual positioning and algorithm technology, applied in the direction of photo interpretation, navigation and calculation tools, etc., can solve the problems of many iterations, slow positioning speed, long calculation time, etc., to reduce the number of iterations, improve the positioning speed, and improve the calculation speed. Effect

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

specific Embodiment approach 1

[0024] The visual positioning method based on the RANSAC algorithm of this embodiment, such as Image 6 As shown, the method goes through the following steps

[0025] Steps to achieve:

[0026] Step 1. Calculate the feature points and feature point description information of the image uploaded by the user to be located through the SURF algorithm;

[0027] Step 2. Select a picture with the most matching points in the database, perform SURF matching on the feature point description information of the image obtained in step 1 and the feature point description information of the picture, and define each pair of matching images and pictures as a pair Matching images, each pair of matching images will get a set of matching points after matching;

[0028] Step 3, through the RANSAC algorithm of matching quality, after removing the wrong matching points in the matching points of each pair of matching images in step 2, determine the 4 pairs of matching images that contain the largest...

specific Embodiment approach 2

[0030] Different from the first embodiment, the visual positioning method based on the RANSAC algorithm of this embodiment embodies the improved RANSAC algorithm, and through the improved RANSAC algorithm of the matching quality, the matching of each pair of matching images in step two The process of eliminating the wrong matching points in the points:

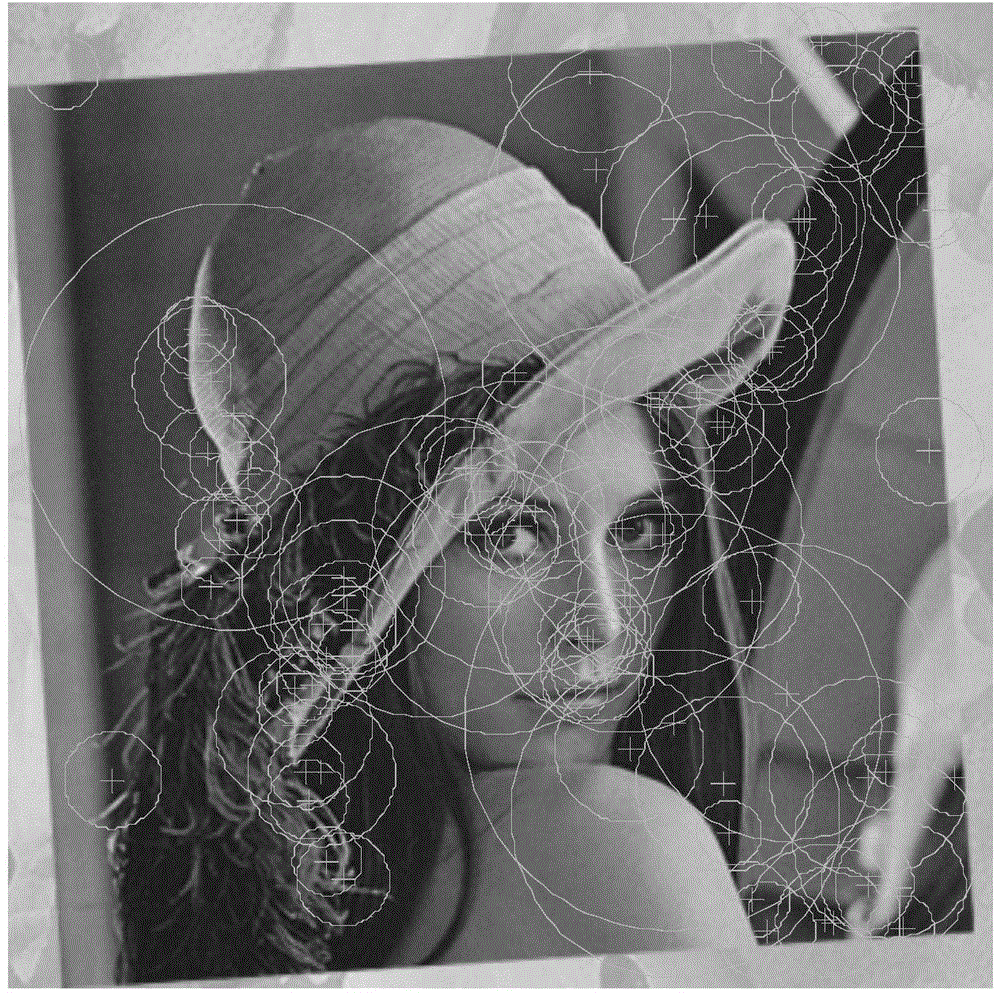

[0031] Step 31. Set the image uploaded by the user, such as figure 1 shown, with n 1 feature points, pictures in the database, such as figure 2 shown, with n 2 feature points, from n in the image 1 Select a feature point from the feature points, respectively with the n in the picture 2 feature points using the European calculation formula: i=1,2,...,n 1 Perform calculations to obtain n that is the same as the number of feature points in the picture 2 Euclidean distance; then, from n 2 Extract the minimum Euclidean distance d and the second small Euclidean distance from the first Euclidean distance, calculate the rati...

Embodiment 1

[0040] Carry out an implementation according to the content of the second specific implementation mode, specifically: figure 1 Schematic diagram of the feature points of the image uploaded by the user, in which there are 130 feature points, figure 2 It is a schematic diagram of the feature points of the database image, in which there are 109 feature points, and there are 90 pairs of matching points between them, such as Figure 4 The shown schematic diagram of matching points matched by the improved RANSAC algorithm of the method of the present invention obtains 82 pairs of correct matching points, 8 pairs of wrong matching points are removed, and the number of iterations is 1 time. The improved RANSAC algorithm takes 0.203 seconds, and the original RANSAC algorithm It takes 0.313 seconds and requires 2 iterations.

[0041] The schematic diagram of matching points without using the improved RANSAC algorithm for matching is as follows image 3 shown.

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com