Data packet processing oriented buffer and application method

A data message and message processing technology, which is applied in the computer field, can solve problems such as the complexity of the data message processing process, achieve the effects of reducing the number of memory copies, reducing overhead, and improving system performance

- Summary

- Abstract

- Description

- Claims

- Application Information

AI Technical Summary

Problems solved by technology

Method used

Image

Examples

Embodiment 1

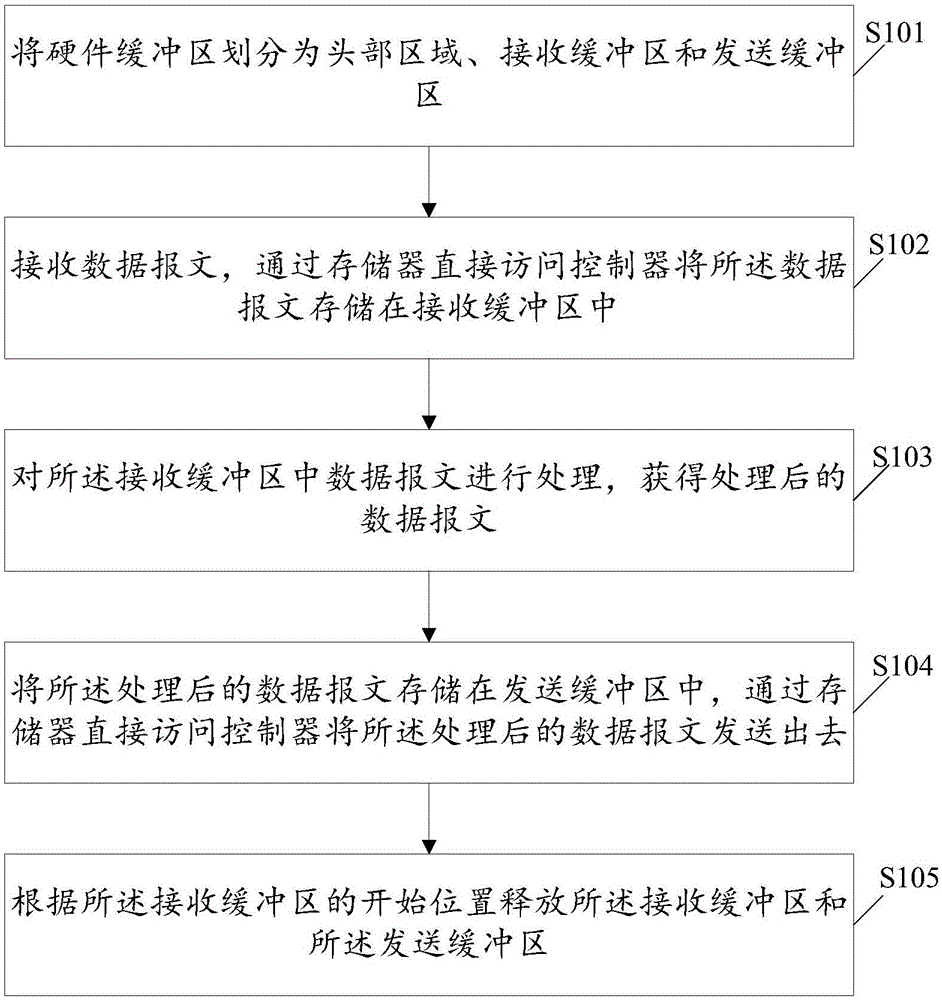

[0031] Such as figure 1 As shown, the embodiment of the present invention provides a buffer usage method oriented to data packet processing, including:

[0032] S101. Divide the hardware buffer into a header area, a receive buffer, and a send buffer; send the start position of the head area, the start position of the receive buffer, and the start position of the send buffer to the The memory directly accesses the controller. The header area belongs to a reserved area, specifically, the header area is a part reserved when allocating a buffer, and this part is not used as a receiving and sending buffer, but only used as a parameter record or to identify the characteristics of the buffer. The receive buffer is used to store received data packets. The sending buffer is used to store the processed data packets.

[0033] sending the start position of the header area, the start position of the receive buffer and the start position of the send buffer to the network coprocessor of t...

Embodiment 2

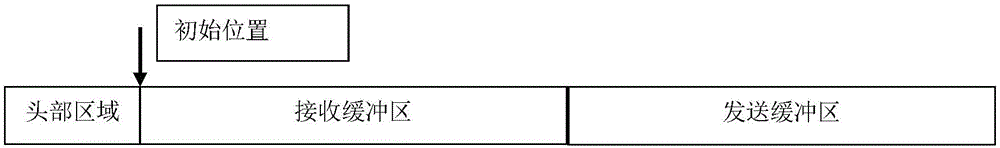

[0041] Such as figure 2 As shown, the embodiment of the present invention provides a buffer for data packet processing, including a header area, a receiving buffer and a sending buffer;

[0042] Among them, the head area, sending buffer and receiving buffer are put together and allocated at one time during initialization, where the arrow points to the head address of the receiving buffer visible to the hardware, that is, the initial position. The receiving address of the data message and the recovery address of the buffer are both this address. The header area is a reserved area, and this part is not used as a receiving and sending buffer. Only used as a parameter record or to identify the characteristics of the buffer.

[0043] The receive buffer is used to store received data packets according to continuous physical addresses, and to send its start position to the network coprocessor of the central processor; specifically, the network coprocessor of the central processor ...

PUM

Login to View More

Login to View More Abstract

Description

Claims

Application Information

Login to View More

Login to View More - R&D

- Intellectual Property

- Life Sciences

- Materials

- Tech Scout

- Unparalleled Data Quality

- Higher Quality Content

- 60% Fewer Hallucinations

Browse by: Latest US Patents, China's latest patents, Technical Efficacy Thesaurus, Application Domain, Technology Topic, Popular Technical Reports.

© 2025 PatSnap. All rights reserved.Legal|Privacy policy|Modern Slavery Act Transparency Statement|Sitemap|About US| Contact US: help@patsnap.com